I focus on growth you can measure, not vague promises. When crawl waste clogs indexation, the right fixes feel less like a pressure valve and more like risk reduction. Clean the pipes, show the numbers, and let high-intent pages get seen. That is the spirit of smart crawl-budget work: consistent, accountable, and tied to revenue pages that actually move pipeline.

The first 30–60 days: quick wins that move pipeline

I start with smaller projects that clear obvious waste and free up crawl capacity. Each action maps to outcomes leaders care about: faster discovery of revenue pages, steadier rankings, and lower blended CAC as organic scales. For a deeper primer, see this crawl budget optimization guide.

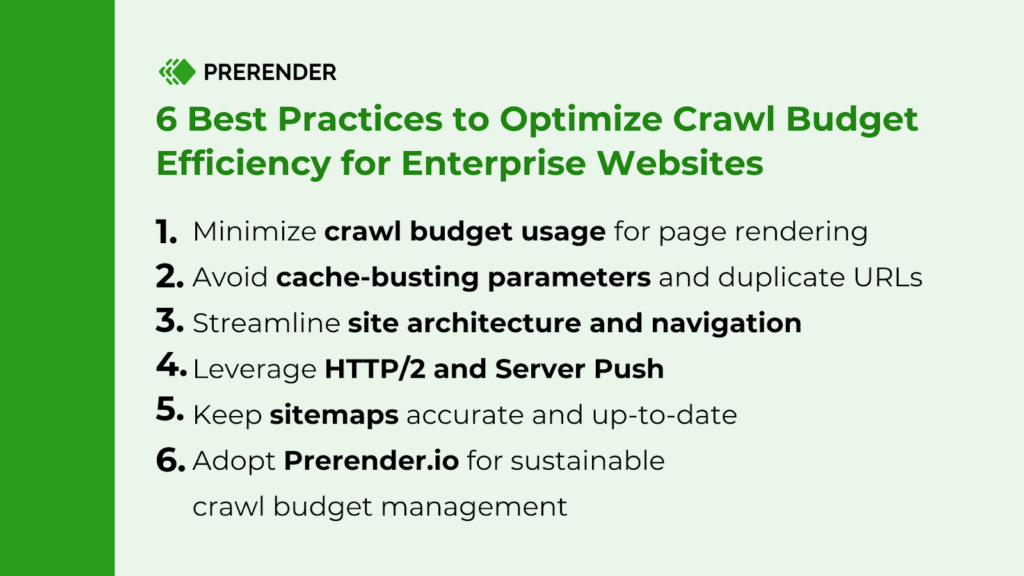

- Consolidate duplicate URLs and parameters. Strip UTM, session IDs, and unnecessary sort/filter variants so one clean URL represents each intent. Expect impact within about a month. Learn how index bloat happens and how to fix it here.

- Fix noisy 4xx and 5xx at scale. Redirect or retire what should go, stabilize what should not fail. Brush up on HTTP return codes and common crawl errors. Healthier crawl stats typically show within 2–6 weeks.

- Refresh and prune XML feeds by section. Keep them accurate, remove stale or dead entries, and use lastmod properly so crawlers prioritize fresh changes.

- Tighten internal linking to revenue pages. Reduce click depth to key templates so they get crawled and recrawled faster.

- Improve server response and caching. Faster, consistent TTFB earns more crawl capacity and improves user speed. Consider modern protocols like HTTP/2 and techniques like Server Push where appropriate.

- Define rules for facets and filters. Allow a small, pre-defined set of crawlable combinations that have search value; route the rest away from the index while keeping them usable.

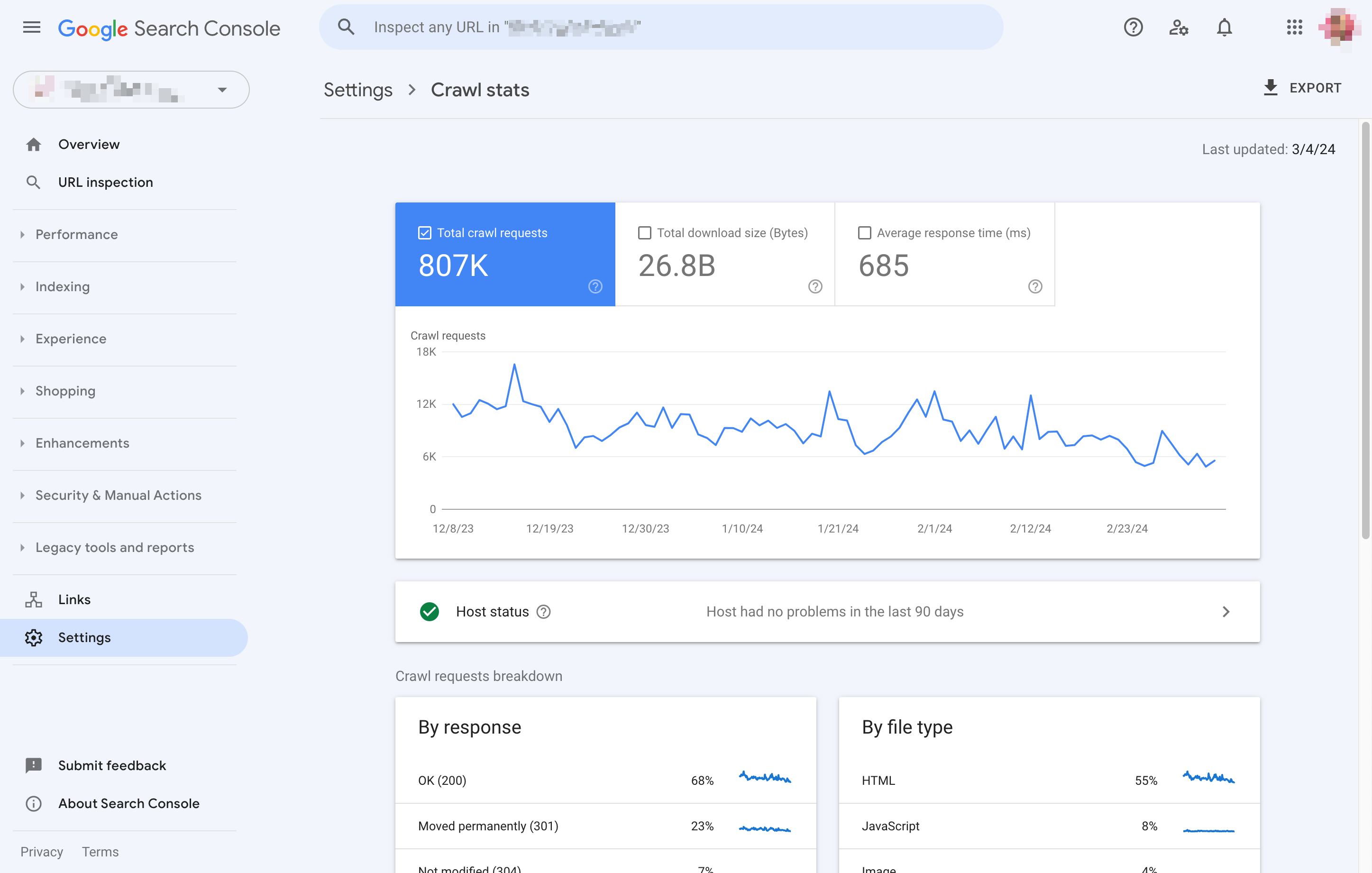

To keep this grounded, I baseline three numbers before any change and track them weekly: average pages crawled per day, the share of priority templates crawled in the last 14 days, and the server error rate on bot traffic. These are the early warning lights for whether the fixes are working. Use Google Search Console and its crawl reports to validate, and cross-check with SEO Log File Analyser to see real bot activity.

Eliminate waste: duplicates and errors

Duplicate URLs burn crawl capacity and muddy analytics by splitting the same page across variants. I normalize structure (lowercase, a consistent trailing-slash rule), enforce one canonical per cluster, and 301 near duplicates. If a low-value facet helps users but should not rank, I leave it usable and mark it noindex. For faceted navigation, I cap crawlable ranges and canonical back to the base view for the rest. With the legacy parameter tool deprecated, I handle parameter rules at the application or CDN layer rather than hoping for a setting to fix it. For a refresher on how crawlers operate, see Googlebot and what it means to be easy to crawl.

Crawlers are patient, but not that patient. Persistent 4xx and 5xx erode trust and waste the allowance you do have. I redirect retired URLs to the most relevant target when intent still exists and return 410 for content that is gone for good. I fix root causes behind 5xx spikes - timeouts under load, memory caps, flaky upstreams - and use 503 with Retry-After only for short, planned maintenance. I also replace "temporary" 302s with 301s when the destination is actually permanent. For thin or expired pages, I remove or noindex at scale; if a seasonal page returns, I keep the URL and metadata intact so it retains context. If you're dealing with temporary inventory or listings, see guidance on how to manage old content and expired listings for ecommerce SEO here.

Crawl budget: when it matters and what misuse looks like

Crawl budget is two dials working together. Crawl rate limit is how much your host can handle without tipping into errors. Crawl demand is how much a crawler wants to fetch based on quality, popularity, and change rate. This mostly matters for larger sites (thousands of URLs) or stacks that generate many URL variants; smaller sites usually do not feel pressure unless filters, large knowledge bases, or frequent updates create extra surface area. For context on bot behavior, read Bot traffic: What it is and why you should care about it ».

Signals of misuse show up quickly:

- A wide, persistent gap between total discoverable URLs and indexed pages.

- Frequent bot hits on parameters or infinite spaces that should never rank.

- Priority pages that go weeks without bot visits.

- Server logs that show 5xx spikes when crawlers ramp up.

- Sluggish TTFB during peaks.

- Heavy client-side rendering that delays discovery of content and links.

If that sounds familiar, move the quick wins to the top of your list and recheck your baselines two weeks after each change. For Search Console how-tos, use our Search Console guide.

Make logs your source of truth

Screenshots are pretty; server logs are proof. Logs show what a crawler asked for, what the server returned, and how often the crawler comes back. I collect 60–90 days to smooth out odd weeks, then parse by user agent, URL, template, depth, and status code. I tag priority templates - services, case studies, category hubs - and look for two patterns: waste and misses. Waste looks like parameter storms, infinite calendars, duplicate sort orders, or soft 404s (empty results returning 200). Misses are revenue pages with no bot hits in the last two weeks.

I also compare spike days to deploy notes or incidents; error storms often line up with a release or a vendor hiccup. When I can quantify the waste and its sources, decisions get easier, and it becomes straightforward to estimate how much crawl capacity a fix will free up. Pairing what logs show with the official crawl-stats report closes the loop between what crawlers try to do and what they actually achieve. If you need a workflow, consider SEO Log File Analyser and read more about crawl errors.

Earn more crawl attention the right way

I cannot force crawlers to fetch everything, but I can make fetching my site easy and worthwhile. Speed and stability come first: enable modern protocols, compress assets, cache smartly, and trim heavy templates that get frequent bot hits. Smaller, faster pages mean more URLs fetched per day and better user experience. See these explainers on HTTP/2 and optional Server Push, and this media files optimization guide for JS-heavy sites.

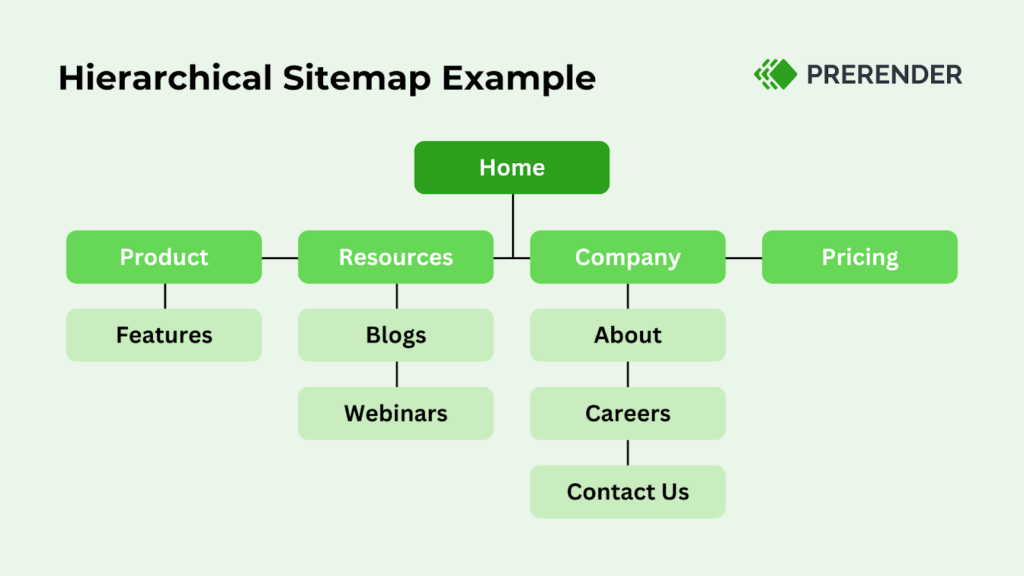

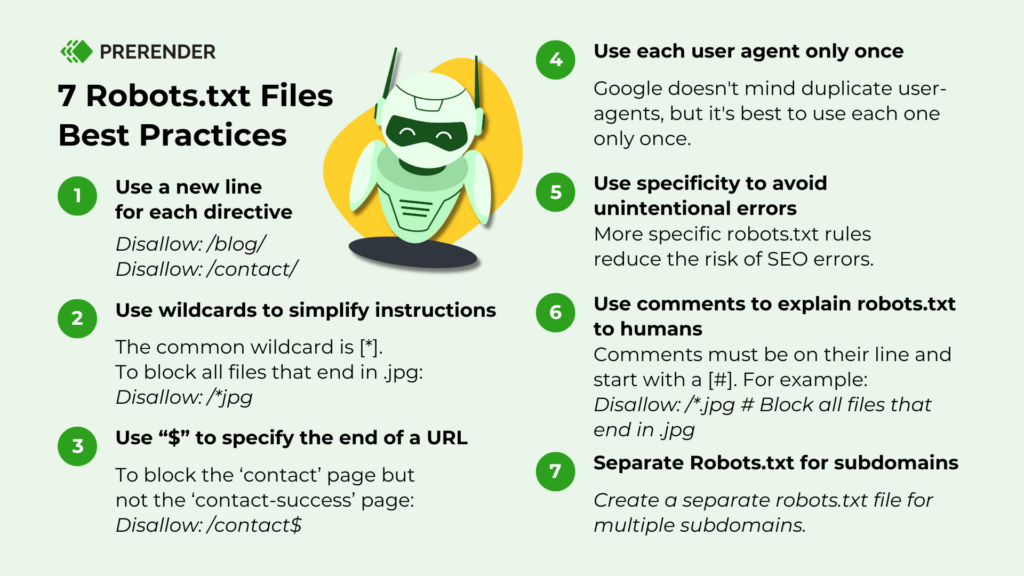

Structure is the next lever. I keep click depth shallow - two to three clicks from hubs to priority templates - and build topic hubs that funnel link equity and give crawlers clear pathways. Orphaned URLs that should rank get linked back into relevant clusters. Feeds stay accurate by section, with correct lastmod values; dead or duplicate URLs come out quickly so crawlers do not waste cycles. I use robots.txt to manage crawling to guide crawl paths (for example, keeping bots out of admin or staging) but not to remove previously indexed pages. Assets needed for rendering (CSS and JS) remain crawlable so bots can see layout and links. Because mobile-first indexing is the default, I make sure content, links, and structured data on mobile match desktop; desktop greatness will not save a thin mobile version.

Modern stacks deserve special care. Heavy client-side apps can slow discovery, so I use server-side rendering or pre-rendering for sections where content must be visible immediately. After major changes, I look for higher hits on priority templates, lower error rates, and faster recrawl times in both logs and crawl-stats.

Pitfalls and practical answers

These traps look harmless until the graphs turn ugly:

- Overusing robots.txt for index management instead of noindex/canonicals. Learn the differences between directives the differences between noindex and nofollow and when to use robots.txt.

- Ignoring mobile-first parity on important templates. See mobile-first indexing.

- Leaving 302s and 503s in place for long stretches. Review correct return codes.

- Blocking CSS or JS required to build links and content.

- Allowing infinite URL spaces (calendars, uncapped facets) to run wild. Avoid spider traps that add new URLs for Google without value.

- Putting session IDs in URLs or shipping stale, bloated sitemaps. Fix index bloat causes here.

How long until fixes show results? Early wins typically appear within 30–60 days: fewer errors in crawl stats, more frequent hits on revenue pages, and steadier rankings as recrawl cadence improves. Do smaller B2B sites need this? Usually not - until filters, large knowledge bases, or frequent updates expand the surface area. Tight hygiene still shortens time to value.

What is a healthy crawl-to-index ratio? There is no universal magic number. As a gut check, I want steady bot hits on priority templates every one to two weeks and a shrinking gap between submitted and indexed. How do I "see my budget"? There is no exact budget number; use the crawl-stats report for volumes and response types, then validate with logs and sitemaps to judge efficiency. Should I block parameters or rely on canonicals/noindex? Prefer canonicals and noindex for consolidation and removal; use robots.txt to steer bots away from infinite spaces that do not need crawling. Do JavaScript-heavy pages hurt capacity? They can, especially when content requires execution to appear; server-side rendering or pre-rendering helps crawlers fetch clean HTML and reduces wasted processing.

Week to week, I monitor indexed pages in priority sections, bot hits on revenue pages, 4xx/5xx rates on bot traffic, average response time and TTFB for bot requests, and the share of crawl wasted on parameters versus core templates. Those five metrics keep the work honest. If traffic suddenly dips, start here: Experienced a Sudden Drop in Traffic? Here’s How to Fix It.

A final thought: crawl hygiene does not win deals by itself, yet it decides whether your most persuasive pages get a fair shot. Keep it simple, map fixes to outcomes, and let the compounding effect do the quiet work in the background. For adjacent opportunities, see how to improve AI search visibility: How to Get Your Website ‘Indexed’ By ChatGPT and Other AI Search Engines.

.svg)