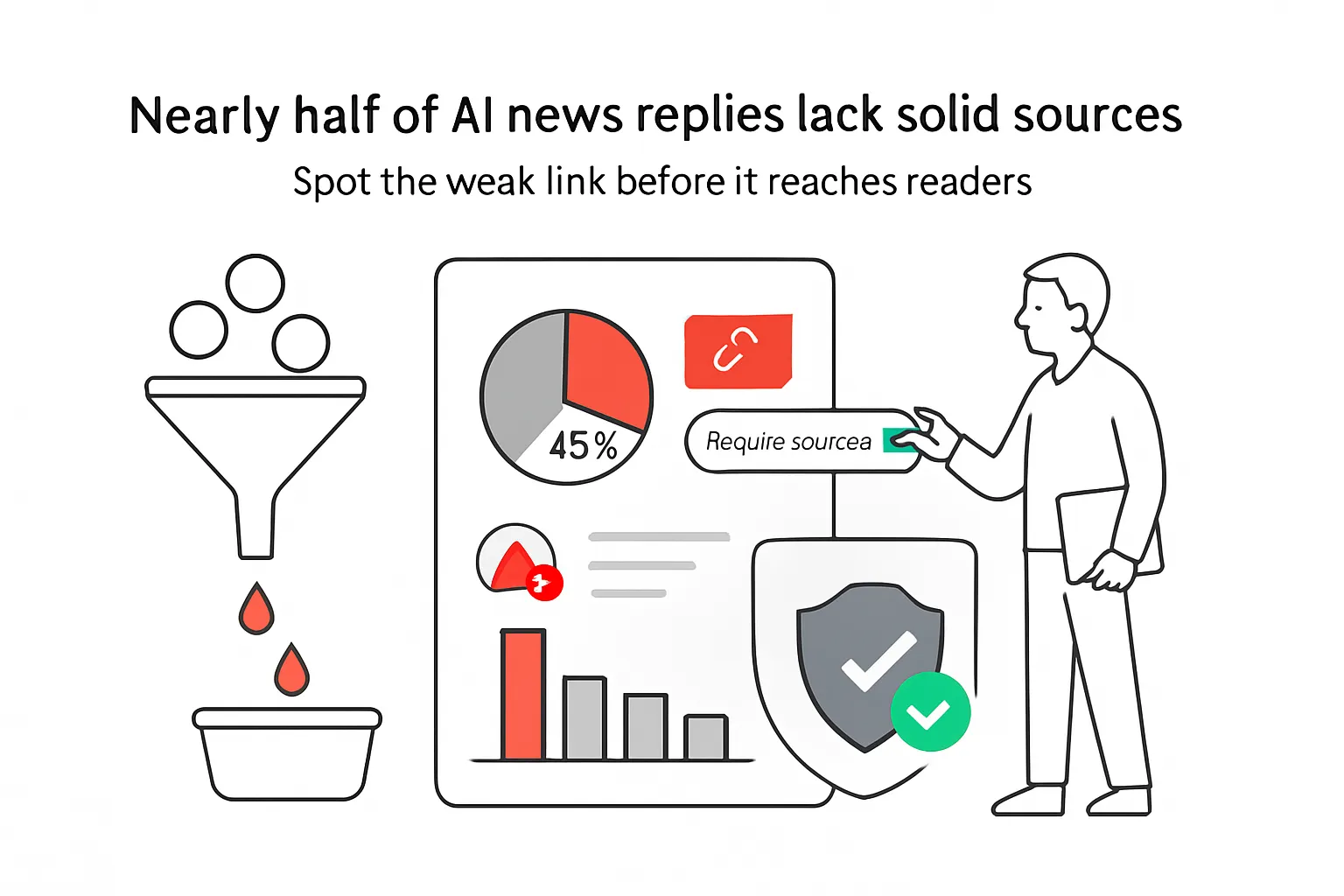

Consumer AI assistants frequently mishandled or misrepresented news in a large cross-market audit: 45% of 2,709 evaluated answers contained at least one significant issue. Findings come from a European Broadcasting Union (EBU) and BBC study spanning four leading assistants across 18 countries.

Executive snapshot

- 45% of 2,709 AI-assistant news answers contained at least one significant issue; 81% had some issue [S1].

- Sourcing was the main failure mode: 31% of responses had significant sourcing problems such as missing, misattributed, or misleading citations [S1].

- Platform spread: Gemini had significant issues in 76% of its responses, driven by 72% with sourcing problems. Other assistants were at or below 37% for major issues and below 25% for sourcing [S1].

- Scope: Free/consumer versions of ChatGPT, Copilot, Gemini, and Perplexity were tested in 14 languages by 22 public-service media organizations across 18 countries [S1].

- Implication: Treat assistant-generated news answers as unverified summaries and cross-check against original sources before planning or publishing.

Method and source notes

The study assessed the integrity of news answers from consumer-grade assistants, measuring accuracy, sourcing, and related issues across 2,709 core responses. Responses were generated May 24 to June 10 using a shared set of 30 core questions, with optional local prompts. Participating public-service media organizations temporarily lifted technical blocks restricting assistant access to their content during the test window and reinstated them afterward [S1].

Primary source: the EBU/BBC 2025 report and a companion News Integrity in AI Assistants Toolkit for technology vendors, media organizations, and researchers [S1][S2]. Authoritative summaries: Search Engine Journal coverage and Reuters reporting on public-trust implications [S3][S4].

Key limitations

- Focus on news Q&A and consumer tiers only - findings may not generalize to paid or enterprise deployments [S1].

- Short audit window - performance can shift with model updates [S1].

- Issue severity thresholds follow the study’s rubric; cross-study comparisons should be cautious [S1].

Findings on sourcing, accuracy, and assistant differences

Error incidence was high and consistent across languages and markets, underscoring reliability challenges for consumer assistants on news tasks [S1]. Sourcing failures dominated: absent citations, misattribution, and links that did not support claims were the most frequent and severe problems [S1].

Platform variance was pronounced. Gemini showed the highest rate of significant issues, largely tied to sourcing. ChatGPT, Copilot, and Perplexity performed comparatively better but still exhibited nontrivial error rates [S1].

The EBU/BBC also released a standardized evaluation and mitigation framework via its News Integrity in AI Assistants Toolkit to guide vendors and media organizations [S2]. EBU leadership warned that growing reliance on assistants for news could erode public trust if reliability gaps persist, a concern echoed in press coverage [S4].

Implications for marketers

- Likely: Treat assistant summaries as unverified. The error rates - especially in sourcing - warrant strict verification before using assistant output for content planning, briefs, ad copy, or client reporting [S1].

- Likely: Monitor how your brand and sources are cited in assistants. Misattribution and missing citations create reputational and compliance risk, particularly in regulated categories. Periodic audits of branded queries and high-value topics help surface issues early [S1].

- Likely: Maintain machine-readable citations. Clear bylines, dates, references, and structured data can reduce misreads and improve attribution when assistants draw from your content. This lowers risk but does not guarantee accuracy [S1][S2].

- Tentative: Favor channels that preserve source context for news-linked content. Prioritize owned properties, newsletters, and search surfaces with prominent links while assistants mature [S1][S4].

- Tentative: For sensitive claims in finance, health, legal, or safety, require dual-source confirmation from original documents before publication to avoid costly corrections [S1].

- Speculative: As vendors adopt the EBU/BBC toolkit, sourcing performance may improve. Re-test quarterly to track model drift and policy changes [S2].

Contradictions and gaps in the evidence

- Paid or enterprise models were not tested; providers claim stronger reliability and citation controls in paid tiers, so results may understate best-case performance [S1].

- The rubric for significant vs. some issues is study-specific; without a public confusion matrix or inter-rater reliability metrics, cross-benchmark comparability is limited [S1].

- The short window and rapidly updating models mean results can age quickly; longer replications would strengthen confidence [S1].

- Findings apply to news Q&A and should not be generalized to other tasks without additional evidence [S1].

Data appendix

- Sample: 2,709 core assistant responses; 30 core questions; optional local questions [S1].

- Coverage: 14 languages; 18 countries; 22 public-service media organizations [S1].

- Tools: ChatGPT, Copilot, Gemini, Perplexity - consumer/free versions [S1].

- Key rates: 45% significant issues; 81% some issue; 31% significant sourcing issues [S1].

- Platform spread: Gemini - 76% significant issues and 72% sourcing issues; others ≤37% significant and <25% sourcing [S1].

Sources

- [S1] EBU/BBC - News Integrity in AI Assistants (cross-market study; 2025).

- [S2] EBU/BBC - News Integrity in AI Assistants Toolkit (2025).

- [S3] Search Engine Journal - Coverage of EBU/BBC study (Matt G. Southern; Oct 2025).

- [S4] Reuters - Reporting on EBU perspective and public-trust implications (Oct 2025).

.svg)