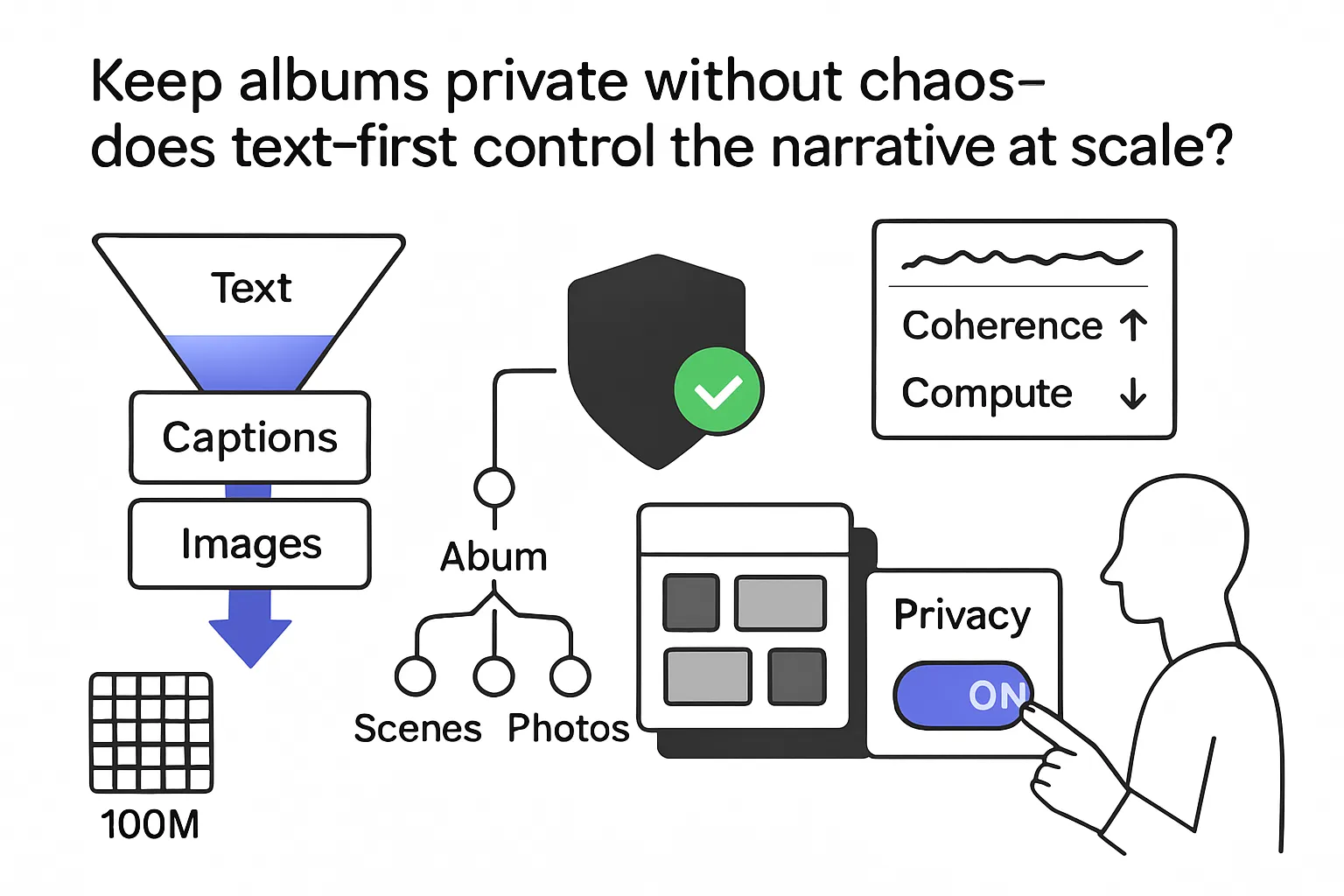

Google Research outlines a hierarchical text-image pipeline that trains language models under differential privacy to generate coherent, multi-image photo albums: first creating an album summary and per-photo captions, then rendering images with a text-to-image model. The study asks whether album-level coherence can be preserved under formal privacy while enabling downstream, non-private analysis on synthetic substitutes. It evaluates semantic similarity on structured text using the MAUVE score, topic overlap, and visual coherence on albums derived from YFCC100M. Positioned against long-context training, the text-first hierarchy aims to be both privacy-preserving and compute-efficient. Most DP synthetic data to date has emphasized simple outputs like short text or single images, making album-level structure a meaningful step forward for Differential privacy since its inception nearly two decades ago.

Differentially private synthetic photo albums

The method converts each real album to text (one album summary plus detailed per-photo captions), privately fine-tunes two LLMs with DP-SGD (one for summaries, one for captions conditioned on the summary), generates synthetic structured representations hierarchically, and finally renders images with a text-to-image model. Album-level coherence is encouraged by conditioning every caption on the same album summary and by contribution bounding so that each user can influence at most one training example. Evaluation compares semantic similarity between real and synthetic text using MAUVE, topic distributions in summaries, and qualitative visual consistency across rendered albums. Reported benefits include: lossy text descriptions reduce memorization risk at the image layer, hierarchical generation shortens contexts to help both cost and DP noise tolerance, and text is cheaper to sample and filter before expensive image generation. For benchmarking, albums are formed by grouping photos from the same user within a one-hour window on YFCC100M.

Executive snapshot

The study tests whether DP-trained LLMs can produce coherent synthetic albums that preserve dataset-level semantics after rendering.

- Scale: evaluated on a public dataset with approximately 100M images (YFCC100M)

- Hierarchy: two DP-fine-tuned LLMs (summary model and caption model) condition all captions on a shared album summary

- Album construction: photos grouped by the same user within a one-hour window to form the “real” albums

- Cost rationale: self-attention scales roughly quadratically with context length (O(L^2)); two shorter-context stages can be cheaper than one long-context model

Implication for marketers: DP-synthetic albums can offer a safer proxy for multi-image creative testing and modeling workflows when direct user imagery is off-limits.

Method and source notes

What was measured: semantic similarity between real and synthetic structured representations (MAUVE on album summaries and captions), overlap of common topics in summaries, and qualitative visual coherence across rendered album images.

Generative pipeline: DP fine-tuning via DP-SGD; contribution bounding (each user contributes at most one example per set); hierarchical text generation followed by text-to-image rendering. Privacy parameters (epsilon, delta), model sizes, the image backbone, and sample counts are not disclosed in the blog post.

Adjacent work: text intermediaries have also been used for DP single-image synthesis, including Private Evolution and Wang et al.

Key caveats: blog-level reporting without a peer-reviewed paper, no disclosed epsilon/delta, figures not numerically tabulated, and no downstream image-task benchmarks.

Findings

The approach introduces a hierarchical, text-mediated strategy for DP album synthesis and presents evidence of semantic and thematic preservation at the album level alongside practical training cost and privacy hygiene.

Method and pipeline design under DP

- Structured intermediary: replace each album’s images with a text summary and detailed captions; train two separate LLMs under DP-SGD. Contribution bounding limits any single user’s influence.

- Hierarchical conditioning: for each synthetic album, first sample a summary, then generate all captions with that summary as shared context to promote intra-album thematic consistency.

- Efficiency emphasis: text generation is cheaper to filter and sample, and two shorter-context models can be more robust to DP noise than one long-context model.

Evaluation outcomes on YFCC100M

- Semantic similarity: MAUVE on text compares real vs synthetic summaries and captions; the blog presents curves rather than numeric tables.

- Topic overlap: frequent topics in synthetic summaries closely match those in real summaries, indicating preservation of dataset-level themes.

- Visual coherence: qualitative inspection shows album-level consistency after rendering (e.g., trips, couples, events).

- Construction rule: albums grouped by same-user photos within one hour; each user contributes at most one example per training set to support DP assumptions.

Compute and privacy considerations

- DP training: DP-SGD bounds any single user’s influence; higher privacy budgets lead to weaker protection, but epsilon/delta and composition across models are not reported.

- Context length vs cost: self-attention cost grows with sequence length, motivating a two-stage design that shortens contexts.

- Adjacent corroboration: text intermediaries for DP image synthesis have been explored in Private Evolution and Wang et al.

Interpretation and implications

Likely

- For organizations constrained by user-image privacy, DP-synthetic albums are a viable substitute dataset for non-private analytics and prototyping where album-level themes matter. Text-layer preservation of topics and MAUVE-measured similarity supports this use.

- Hierarchical conditioning provides album coherence under DP while containing compute, since shorter contexts reduce attention cost and sensitivity to DP noise.

Tentative

- Two-stage modeling may offer better privacy-utility balance than single long-context training for multi-image coherence, though the evidence provided is qualitative rather than ablation-based.

- Topic alignment suggests coverage of frequent patterns, but rare or sensitive concepts may be underrepresented due to DP noise and the text bottleneck.

Speculative

- DP-synthetic albums could support theme-level A/B testing without touching user data; any impact on campaign performance would need separate validation on non-private assets.

- With clearer privacy parameters and downstream benchmarks, similar pipelines could extend to synthetic video sessions via shot-level captions and scene summaries.

Contradictions and gaps

- Missing privacy specifics: epsilon/delta, sampling rates, clipping norms, and composition across the two LLMs are not disclosed, preventing comparison to prior DP image or text synthesis.

- Metrics centered on text: similarity is assessed at the text layer, not directly on rendered images or downstream image tasks.

- No numeric tables: MAUVE curves and topic lists are shown without tabulated scores.

- Dataset construction: one-hour grouping may under- or over-segment sessions and may not generalize beyond Flickr-style content.

- External validation: no third-party replication, memorization audits at the image layer, or comparisons to non-hierarchical DP baselines are provided.

Sources

- Google Research Blog (Oct 20, 2025) — “A Picture’s Worth a Thousand (Private) Words: Hierarchical Generation of Coherent Synthetic Photo Albums”

- YFCC100M — Thomee et al. (2016)

- DP-SGD — Abadi et al. (2016)

- Wang et al. (2025) — Text-based intermediaries for DP single-image generation

- Private Evolution — Sim et al. (2024)

- Efficient Transformers survey — Tay et al. (2022)

- MAUVE score — Pillutla et al. (2021)

- Calibrating Noise to Sensitivity — Dwork et al. (2006)

.svg)