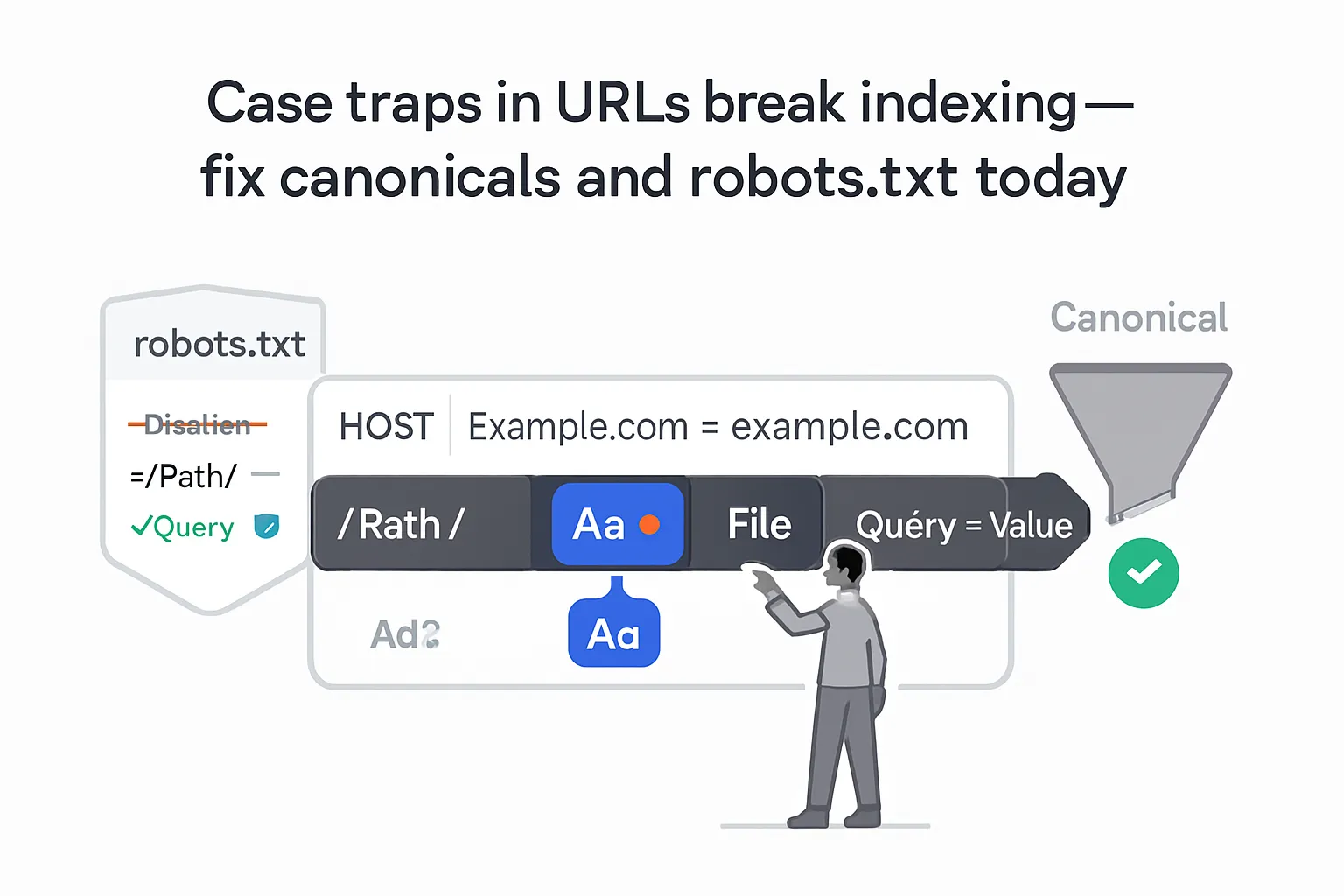

Google Search Advocate John Mueller clarified how URL case affects canonicalization and robots.txt handling. In a public Reddit reply, he said that paths, filenames, and query parameters are case-sensitive, while hostnames are not. He advised keeping URL casing consistent to avoid unexpected canonicalization and crawling outcomes.

Google’s advice on canonicals: they’re case sensitive

Mueller said case sensitivity matters for canonicalization and recommended consistent URL casing across a site. Paths, filenames, and query parameters are case-sensitive, while the hostname is not. He responded to a scenario where live URLs and rel=canonical tags used different casing.

"Hope should not be part of an SEO strategy."

For managing duplicates, Google documents how to consolidate signals and use rel=canonical effectively. See Google Search Central’s guidance on consolidating duplicate URLs.

Robots.txt handling

Mueller also noted that URL case affects robots.txt processing. Googlebot matches rules to paths as written, so uppercase and lowercase can be treated differently. Keep rule paths aligned with the exact URLs you intend to allow or block. Reference: Create a robots.txt file.

Key details

- Speaker: John Mueller, Google Search Advocate.

- Platform: Reddit - public comment in r/SEO.

- Statement: Path, filename, and query parameters are case-sensitive; the hostname is not.

- Canonicalization: Case sensitivity influences Google’s canonical selection.

- Robots.txt: Case sensitivity impacts path matching in robots.txt.

- Related docs: Google’s guidance to consolidate duplicate URLs and use rel=canonical.

- Robots.txt docs: Google’s robots.txt implementation guidance on rule matching behavior.

Background

Google evaluates multiple signals when selecting a canonical page. The rel=canonical link element helps indicate a preferred URL among duplicates, but consistency in URL casing strengthens signals.

Robots.txt controls crawler access at the path level. Since rule matching follows the file’s syntax, inconsistent casing between rules and URLs can lead to unintended crawling or blocking.

.svg)