When production gets wobbly, I do not want heroics. I want a calm hand and clear change rollback procedures that put revenue, data, and customers first. I think in terms of triggers, a small decision tree, and named owners. Simple, fast, repeatable. This guide lays out the patterns I use with modern B2B teams that care about uptime, cost, and clean accountability. The goal is not just to reverse a bad release; it is to reduce the odds I ever need to.

Change rollback procedures

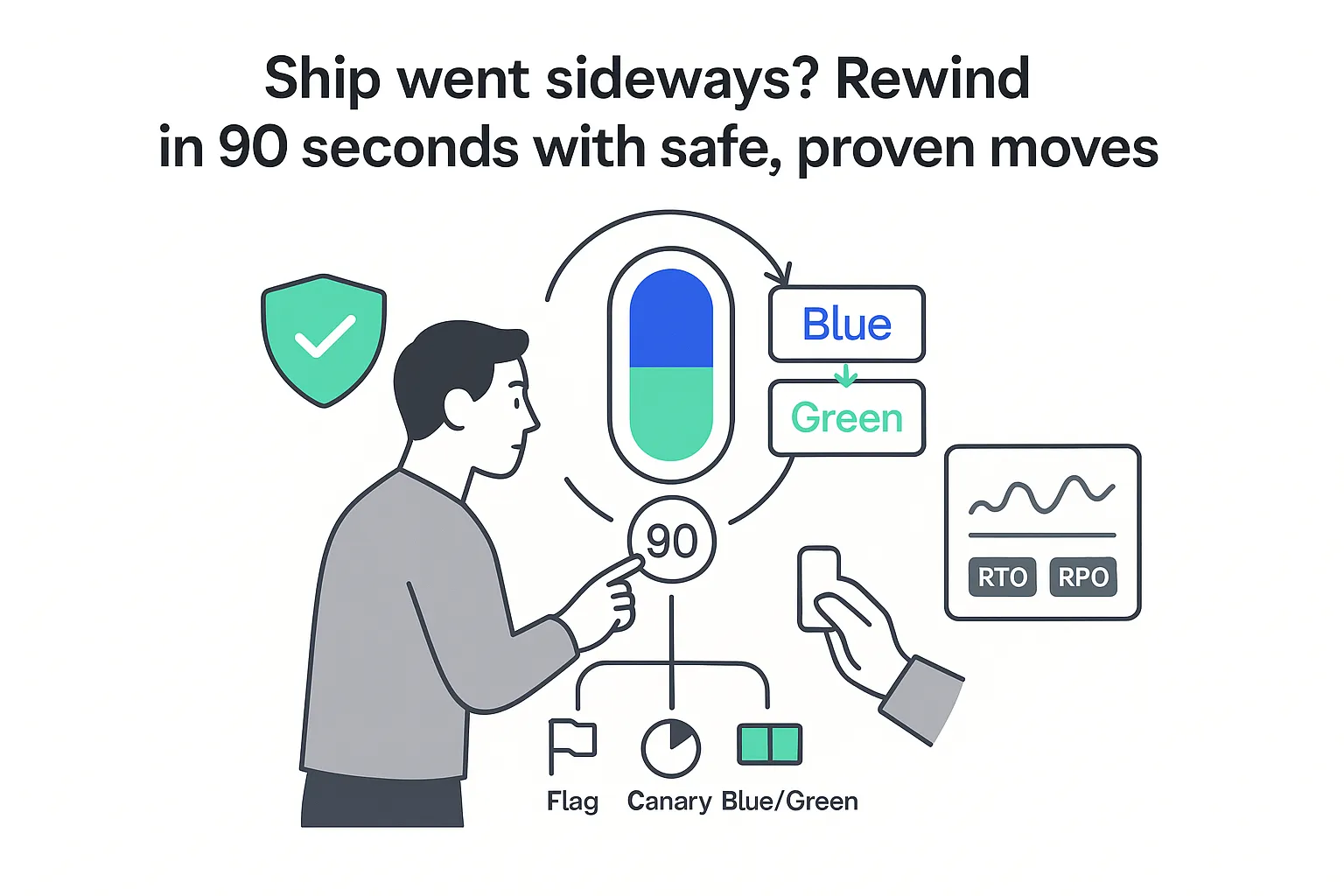

Here is a ninety-second executive playbook I keep at hand. I tune it to minimize downtime and revenue risk across code, infrastructure, and data.

- Triggers. Any SLO breach (latency, error rate, saturation), spike in error rates, failure to pass smoke tests, or missing heartbeats in critical services. If the blast radius touches billing, authentication, scheduling, or customer integrations, I treat it as urgent. Guidance from enterprise software experts and integration specialists can help teams tighten these triggers.

- Decision tree. First, I decide whether a roll-forward is faster and safer than a rollback. If yes, I ship the smallest verified fix. If no, I choose the smallest safe reversal in this order: feature flag off, canary stop, blue/green traffic flip back, prior image redeploy. I avoid database rollbacks except as a last resort. This aligns with modern Continuous Delivery.

- Who does what. I appoint an Incident Commander to run the call and time-box decisions. The dev lead confirms code state. The SRE or DevOps owner executes the traffic plan. QA validates the target state. A comms lead updates executives, customer success, and status-page users, following advice from communication strategists.

- When. I make the call within five minutes of a confirmed trigger. I start the reversal inside ten minutes. I aim for recovery inside the stated RTO for the affected service.

Quick-start essentials I turn into a runbook:

- Define RTO and RPO with business owners, plus SLOs and SLIs for each service tier.

- Add pre-deployment validation gates that fail fast on config drift, secret mismatches, or missing migrations.

- Set a snapshot and backup plan for infra and data. Include point-in-time recovery and environment snapshots for app images.

- Prepare a traffic-shifting plan using your load balancer or service mesh, with documented switch-back steps for blue/green and canary.

- Create verification gates: health checks, synthetic tests, key user journeys, and data checks.

- Write a comms template for status-page copy, exec summaries, and customer-success guidance. See communication planning experts.

- Run a post-incident review tied to DORA metrics (MTTR, change fail rate), with action items on test gaps, change size, and observability. Coordinate with change management specialists.

I treat change rollback procedures as a product. I version the docs, rehearse them, and measure outcomes. Teams that communicate well, prepare, and verify quickly recover faster and break trust less.

Rollback strategy

I choose among several patterns based on blast radius, risk tolerance, and RTO targets.

- Blue/green. Two production stacks, one active. Deploy to the idle stack, verify, then switch traffic. Rollback is a traffic flip that takes seconds. I use this when RTO is tight and the app can run two versions in parallel (remember the cost of duplicate capacity).

- Canary. I send a small slice of traffic to the new version and watch golden signals. If anything tips, I stop the canary or step back. I use this when I need real user signals but want to limit impact.

- Feature flags. I ship dark, toggle on for a small cohort, then grow. I toggle off if needed. I use flags for risky features and UI changes, pairing them with blue/green or canary. Learn more about Feature flags.

- Immutable images. I keep releases as versioned images. Rollback is simply redeploying the previous known-good image. I use this for predictable redeploys and clean audits.

- Environment freeze with roll-forward. For major data changes, I freeze deploys, apply backward-compatible changes first (expand and contract), then ship code. If a release fails, I roll forward quickly with a one-line fix rather than roll back. I prefer this when DB risk is high.

I map these to common recovery speeds:

- Ten-minute recovery. Redeploy the previous app version while skipping database steps. Safer when migrations were small or only additive. Octopus users often pair this with Calculate Deployment Mode to detect rollback mode.

- Three-minute recovery. Decouple database and code. Apply backward-compatible data changes earlier. Then code deploys become low risk, and rollback is quick. Share configuration via library variable sets to keep releases consistent.

- Immediate rollback. Blue/green or a staging path in prod lets me flip traffic back in seconds. Use Runbooks and call them during a deploy with Run Octopus Deploy Runbook.

Decision guidance:

- Small blast radius, medium risk, moderate RTO: canary or feature flag.

- Large blast radius, low tolerance for risk, tight RTO: blue/green.

- Data-heavy change: environment freeze, expand then contract, and favor roll-forward.

B2B SaaS examples:

- Multi-tenant. I prefer canary by tenant cohort, plus feature flags per account tier. Blue/green at the edge can also work for stateless services.

- Single-tenant. Blue/green per tenant gives clean isolation. Recovery is a traffic switch for that tenant only.

Rollback risks

Frequent rollbacks are a symptom of upstream problems: oversized changes, weak tests, or thin observability. When I fix the pipeline, rollbacks get rarer and faster.

Key risks:

- State drift. Config in prod does not match code. A rollback may not restore the exact prior state.

- Config mismatches. Secrets or environment variables differ across nodes. Partial reversals show odd behavior.

- Data migrations. Backward-incompatible changes put me in a corner. Removing a column is easy. Losing user data is not.

- Toggle debt. Many flags with unclear owners or expiry dates create confusing states.

- Partial rollbacks. Services move back at different speeds. Contracts break.

Mitigation:

- Ship smaller changes. Small batches ship faster and roll back faster.

- Use progressive delivery. Canary, feature flags, and staged rollouts reduce blast radius.

- Strengthen health checks. Golden signals are ready on deploy, not after.

- Budget for errors. I use error budgets to control release pace and avoid risky deploys late in a cycle.

Backout plan

A backout plan and a rollback are close, but not the same. A backout plan stops a change in progress and reverts a configuration or infra action before it completes. A rollback reverses a change that already reached users. See Backout vs Rollback Plan for a concise comparison.

When I choose a backout plan:

- Infrastructure or config changes. Terraform plan looks off or Kubernetes manifests fail validation. I back out immediately.

- External dependency failure. The payment gateway is timing out. I back out the integration toggle or routing change.

- Security gates fail. Secrets, certs, or policies show an issue. I stop, back out, and reassess.

When I choose a rollback:

- Application code defects in production that I cannot patch in minutes.

- Performance regressions visible at the edge.

- User-facing bugs that block logins, orders, or key workflows.

Simple backout template I paste into change tickets:

- Trigger criteria. Exact metrics or checks that fire the backout.

- Steps. The commands or console actions, including traffic switches.

- Owner. The primary and a backup by name.

- Verification. Health checks and a quick acceptance script.

- Comms. One short note for execs and one for customer-facing teams with clear timeframes.

For an example of tracking, Read more about it here.

Deployment rollback

I treat deployment rollback as a first-class path in CI or CD, not an afterthought.

Technical steps that make rollbacks boring:

- Pre-flight checks. Confirm secrets, environment variables, and DB schema compatibility. Validate contracts between services with a quick smoke test.

- Artifact immutability. Store images with content hashes. Pin versions. Rollback is a redeploy of a known artifact.

- Traffic shift. Use load-balancer rules, an ingress controller, or a service mesh to drain, shift, and pin traffic. Keep the previous pool warm for a set window.

- Health checks and auto-halts. Fail fast on startup errors, latency spikes, or elevated error rates. Integrate this with the deploy controller.

- Rollback runbooks. Add a rollback job to the pipeline that redeploys the last good release and flips traffic back.

Model examples:

- Octopus Deploy. Add a rollback runbook that redeploys the previous release. Use Calculate Deployment Mode and Runbooks to streamline traffic flips.

- Azure DevOps. Create a Release pipeline with a manual-intervention gate and a rollback stage that deploys the prior artifact and runs a traffic-switch script.

- GitHub Actions. Keep a reusable workflow that, on rollback, pulls the previous image, updates the Kubernetes deployment, and calls the load-balancer runbook.

Blue/green swap example: Deploy new code to the idle color. Run smoke tests and a set of user journeys. If all green, switch traffic. If not, stay on the old color and mark the new build as failed.

Canary rollback thresholds: If p95 latency rises by 20 percent for five minutes or error rate crosses 2 percent, I stop the canary. If health does not recover in ten minutes, I roll back to the prior version for that service.

For cloud pipeline and IaC considerations, see insights from cloud computing experts.

Database rollback

My stance is simple: I do not roll back the database unless I am dealing with corruption or a disaster. Data loss and long recovery times are too likely.

Safer patterns:

- Forward-only migrations. Every migration moves forward. No destructive steps without expand then contract.

- Expand then contract. Add new structures first. Write to both old and new fields. Migrate data in the background. Flip reads to the new field. Remove the old field later.

- Read replicas. Offload risky reads during updates. Promote only when safe.

- Feature-flagged writes. Gate new writes or new shapes of writes. Toggle off if needed without touching schema.

- Backfills. Run backfills idempotently in small batches with clear progress markers.

Last-resort restore: If I must restore, I use point-in-time recovery. I expect data loss between the restore point and the failure unless I capture a change log and replay. I communicate this risk clearly to leadership and any affected customers.

Helpful scripts and habits:

- Idempotent migrations with checks for current state.

- Data-fix scripts kept in version control with clear ownership.

- Automated checks that confirm referential integrity and row counts after deploys.

Automated rollback

Automation closes the gap between detection and action. Done well, it takes pressure off the team and reduces the blast radius.

I define automated triggers:

- SLO breaches for latency, error rate, and saturation.

- Failed smoke tests in production.

- Startup failures or unhealthy pod counts after a deploy.

- Anomalies from synthetic checks or canary analysis.

I wire triggers to my pipeline gates:

- Datadog, New Relic, or Prometheus can feed alerts to the deploy controller.

- For canaries, I use Argo Rollouts or Spinnaker to pause or roll back traffic automatically based on thresholds.

- I pair with feature flags, such as LaunchDarkly, to kill a feature instantly while I investigate.

For monitoring and verification patterns, see real-time processing experts.

I keep humans in the loop for high-risk moves:

- I require approvals for full production rollbacks except during a declared incident.

- I capture audit logs and change tickets automatically.

- I limit automatic rollback to a short window, usually under thirty minutes after release, to avoid confusing users who already saw the change.

Rollback metrics

I measure how rollback procedures perform and publish the results to leadership monthly, emphasizing trends instead of snapshots. Using DORA definitions keeps the conversation consistent across engineering and the business.

I track and report:

- MTTR from trigger to stable state.

- Change fail rate over the past month.

- Rollback frequency and the percentage of deploys covered by feature flags or canary.

- Time to detect, both manual and automated.

- Percent of rollbacks that were automated versus manual.

- Recovery success rate on the first attempt.

- RTO target versus actual for each incident.

I make it business-fluent:

- SLA adherence by tier.

- Error-budget burn and how release pace adjusted.

- Credits avoided due to quick recovery.

- Churn risk avoided when incidents stayed under target thresholds.

For post-rollback reviews and feedback loops, see performance measurement specialists and performance evaluation specialists.

FAQ

Q: What is the difference between a rollback and a backout plan

A: A rollback reverses a change that reached users. A backout plan stops and undoes a change still in progress, often for infra or config, before it fully lands. See Backout vs Rollback Plan.

Q: When should I roll forward instead of rolling back

A: If the fix is small, tested, and can ship faster than a rollback, I roll forward. This is common with feature flags and minor config mistakes. Roll-forward is also safer after irreversible database changes.

Q: How fast should a rollback be for enterprise apps

A: Many teams set an RTO of five to fifteen minutes for customer-facing services. Blue/green traffic flips can beat that. Large data changes often need longer, so I plan for a three-stage path with a fast toggle, a quick app redeploy, and a slower data remediation.

Q: Should I ever roll back the database

A: Only as a last resort. Prefer forward-only migrations, expand then contract, and data backfills. If I must restore, I use point-in-time recovery and communicate the data window at risk.

Q: How do feature flags help with rollbacks

A: Flags let me switch off risky features in seconds without redeploying. They support gradual exposure and safer tests in production. I clean up flags quickly to avoid debt.

Q: What metrics prove rollback effectiveness to executives

A: MTTR trends, change fail rate, percent automated, and how RTO targets compare to actuals. I tie those to SLA adherence, credits avoided, and improved pipeline stability.

Q: Which tools support automated rollbacks

A: Octopus Deploy, Argo Rollouts, and Spinnaker handle versioned releases and traffic control. Azure DevOps and GitHub Actions can run rollback workflows. Pair them with Datadog, New Relic, or Prometheus for signals, and LaunchDarkly for flags. For Octopus-specific patterns, explore Runbooks and rollback templates like Calculate Deployment Mode.

A final word. Change rollback procedures are more than a safety net. They are a sign of accountable engineering. I keep the playbook short. I rehearse often. I measure everything. Then I let the team ship with confidence, knowing recovery is quick, clean, and calm.

.svg)