I want AI to pull its weight, not create more noise. If you run a B2B service company, you’re likely juggling long sales cycles, smart buyers, and a slammed team. You don’t need another dashboard. You need a clear way to track what AI changes, when it shows up in revenue, and how to hold teams accountable without micromanaging. This AI measurement framework delivers a practical ladder from data to dollar impact, with fast signals and CFO-friendly math.

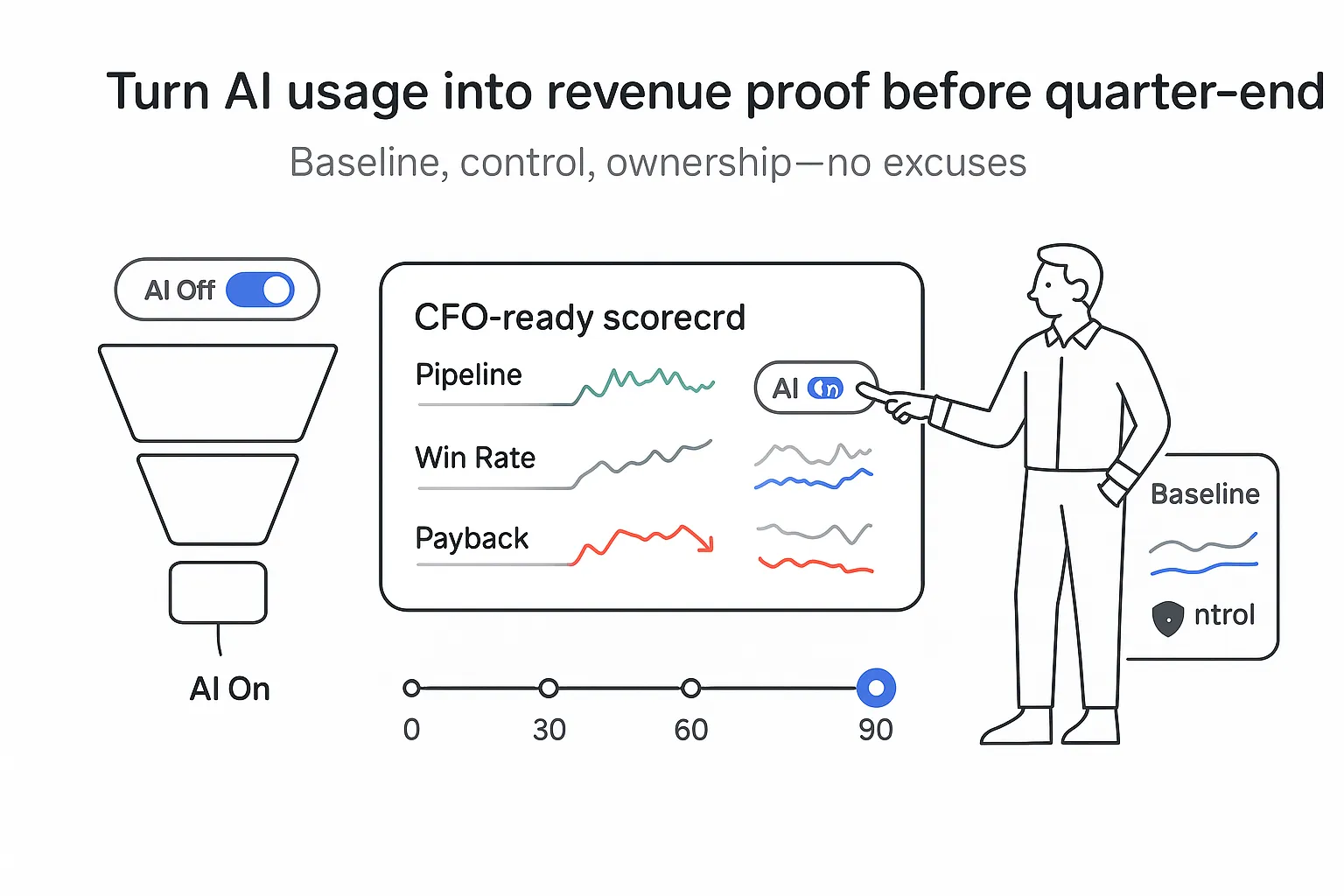

At a glance: the AI measurement framework

Timeline and expectations

- 4 to 6 weeks to baseline core usage and outcome metrics per team

- 90 days to a stable adoption baseline (leading indicators you can trust)

- Around 180 days to an early, defensible ROI signal

Early outcome ranges to watch (validate in a controlled test)

- Qualified pipeline added: 8 to 15% lift from better routing, cleaner notes, and faster follow-ups

- Sales cycle reduction: 10 to 20% via quicker proposals, clearer next steps, fewer handoffs

- Meeting no-show reduction: 15 to 25% via reminders, recap emails, and calendar checks

- Win rate change: 5 to 12% when AI guides stage moves, next actions, and objection handling

- CAC payback: target under 9 months by redeploying time saved and improving conversion in typical B2B service motions

Framework summary

- Inputs: data quality, access, governance

- Enablement: training, prompts, playbooks

- Usage: adoption and AI-assisted actions in the flow of work

- Outcomes: pipeline, win rate, cycle time, cost to acquire

Sample scorecard snapshot

- Data readiness score: 78/100

- Daily active users on AI: 62%

- AI-assisted email send rate: 55%

- Forecast accuracy delta: +6 points

- Win rate change vs baseline: +4.2 points

- Cycle time reduction: 13%

The ladder: from data to business impact

I run the same model across sales, marketing, and customer success to keep the math clean and the narrative simple. Move from data to enablement to usage to outcomes - with clear owners and a steady cadence.

Data

- Systems: CRM, meeting recordings, email and calendar, billing

- Hygiene: quality checks, deduplication, required fields, aligned stage definitions

- Controls: permissions, PII guardrails, audit trails

Enablement

- Training, prompt libraries, playbooks

- User provisioning and access

- Process documentation and governance acknowledgement

Usage

- AI-assisted actions embedded in daily workflows

- Assisted call notes, email drafts, next-step recommendations, proposal drafts

Outcomes

- First efficiency wins (time to notes, proposal turnaround)

- Then revenue lift and cost efficiency (win rate, cycle time, CAC)

Ownership and cadence

- RevOps: metric definitions, baselines, dashboards, A/B test setup

- PMO: rollout plan, risks, change management

- Sales leadership: adoption targets, coaching, process adjustments

- Rhythm: weekly usage check, monthly outcome review, quarterly ROI readout

Common pitfalls to avoid

- Weak data foundations: fix required fields, dedupe accounts, and align stage definitions before you automate

- No control group: always hold out a team or territory to avoid chasing shadows

- Correlation vs causation: tie AI-assisted actions to stage moves and wins via attribution rules, not guesses

Leading indicators: readiness, enablement, and use

I track two buckets early: preparedness and enablement, plus use and engagement. If the groundwork is shaky, the fun stuff won’t stick. If people don’t use AI in the flow of work, nothing moves.

Preparedness and enablement metrics

- Data readiness score: standardized across CRM, calls, emails, calendar

- Percent CRM fields AI-enabled: clear definitions and validation rules in place

- Percent reps or CSMs provisioned: licenses assigned, permissions set

- Prompt library completeness: core prompts for research, summaries, emails, proposals

- Process documentation: AI-related workflows published in the playbook

- Governance compliance: policy acknowledgment, PII guardrails, escalation paths

Use and engagement metrics

- Daily active users: percent of licensed users active daily and weekly

- Assisted call notes percent: calls with AI summaries captured to CRM

- AI-assisted email send rate: messages drafted or checked by AI

- Next-step acceptance rate: AI-recommended actions accepted and logged

- AI-generated proposals used: proposals drafted with assistance and sent to buyers

- Time to CRM hygiene: minutes from meeting end to updated opportunity next steps

30/60/90-day micro-goals

- Day 30: data readiness 70/100; 80% provisioned; 40% DAU

- Day 60: data readiness 75/100; 60% assisted call notes; 50% AI-assisted email send rate

- Day 90: data readiness 80/100; 65% next-step acceptance; 55% AI-generated proposals used

Adoption heatmap example

- Team A: DAU 68% | Notes 72% | Proposals 58%

- Team B: DAU 51% | Notes 63% | Proposals 49%

- Team C: DAU 77% | Notes 81% | Proposals 66%

- Team D: DAU 34% | Notes 29% | Proposals 21%

Tip: set thresholds where managers get alerts if adoption drops below 40% for a week.

Lagging indicators: experiments and outcome signals

This is where activity turns into outcomes. Keep it clean and time-bound: capture at least 6 weeks of baseline per team, then run treatment vs control for 8 to 12 weeks. If seasonality is strong, reference the last 12 months.

Outcome metrics to track

- Meetings booked: total and per rep

- Qualified opportunities: count and percent of meetings that convert

- Pipeline velocity: qualified pipeline value times win rate divided by cycle length

- Proposal turnaround time: hours from request to first draft sent

- Forecast accuracy delta: absolute error improvement in quarter

- Stage progression rate: share of deals moving from stage to stage

- Win rate: closed-won divided by qualified

- Average deal size: average ACV in period

- Sales cycle length: median days from qualification to close

Experiment design notes

- Split: A/B by team, region, or segment; keep territories stable during the window

- Baseline window: minimum 6 weeks; for strong seasonality, use 12-month bands for context

- Minimal detectable effect: define the smallest lift worth acting on (for example, 5-point win-rate lift at 80% power, 5% significance); size your sample based on average opportunity count

Simple calculator example

Inputs

- 400 qualified opportunities per quarter

- Average deal size 25,000

- Current win rate 22%

- Cycle length 74 days

- Proposed win-rate lift 6 points on AI-assisted deals

- Share of deals with AI assistance 60%

Projected lift

- Baseline wins: 400 × 0.22 = 88 deals

- AI wins: 400 × 0.60 × 0.28 = 67.2

- Non-AI wins: 400 × 0.40 × 0.22 = 35.2

- New total wins: 102.4

- Added wins: 14.4 deals or 360,000 in bookings

- If gross margin is 65%, gross profit lift ≈ 234,000 for the quarter

Seasonality normalization

- Build a simple monthly index using the last two years of bookings and meetings

- Apply the index to expected volumes during the test window

- Report raw and seasonally adjusted numbers side by side

Implementation in 90 days: start small, measure, scale

Where to start isn’t a mystery. Pick one high-leverage use case, wire the data, define success up front, and hold a standing review. A steady 90-day plan beats a sprawling rollout.

Suggested 90-day roadmap

- Weeks 1-2: pick one use case

- Meeting summaries to CRM, proposal drafting, or account research briefs

- Weeks 2-3: secure data and governance

- CRM field map, call-recording permissions, role access, PII checks

- Weeks 3-4: define success and guardrails

- Target metrics, acceptance criteria, red lines for human approval

- Weeks 4-5: train the team

- Short live sessions, quick videos, prompt library in your wiki

- Weeks 5-6: instrument tracking

- Instrument events in your CRM, connect to your warehouse, build a BI view

- Weeks 6-10: launch pilot

- Two teams in treatment, one in control; weekly usage review, coaching, and fixes

- Weeks 10-12: review and scale

- Compare against baselines and control; keep what works, pause what doesn’t, plan the next use case

RACI for clarity

- Responsible: RevOps

- Accountable: CRO

- Consulted: Sales managers, Legal, Security

- Informed: Finance, IT

Pilot readiness checklist

- Data: required fields validated; meeting and email data accessible

- Governance: AI policy signed; PII redaction on call transcripts

- Enablement: users provisioned; prompt library live

- Measurement: baseline captured; control group locked; dashboards built

- Review: weekly usage standup; monthly results readout

Roadmap swimlanes

- Enablement: provisioning, training, prompts

- Data and ops: integrations, field mapping, redaction

- Usage: summaries, emails, proposals

- Results: pipeline, cycle, wins

Agentic AI: speed with guardrails

Agentic AI can run autonomous workflows - drafting outreach, sequencing follow-ups, summarizing calls, logging notes, and updating CRM fields. That speed is great; guardrails keep it safe.

Workflow examples

- After a call, the agent summarizes outcomes, sets next steps, updates the opportunity, and drafts a recap email for approval

- For a new lead, the agent enriches the account, runs a fit score, places the lead in a sequence, and schedules the first touch if calendars align

- For late-stage deals, the agent builds a proposal draft from templates and pricing rules, then routes it to the owner for review

Safeguards to track

- Handoff quality: percent of drafts accepted without edits

- Intervention rate: percent of actions requiring human correction

- Error rate: percent of actions that created a data error or missed a policy rule

- Time to correction: minutes from flag to fix

- Precision thresholds by action type

- Low-risk (call summaries, next steps): target >95% precision before automation

- Medium-risk (email sends): keep a human in the loop until >90% precision

- High-risk (pricing, contractual language): keep human approval at all times

Agentic flow with control points

- Listen to call → summarize → extract fields → update CRM → draft recap email → human approve

- Plan next step → recommend action → schedule task or send sequence → human approve for medium risk

- Proposal draft → pull template → fill pricing → flag exceptions to manager → human approve

Measurement twist

- Tie agentic actions to stage progression (for example, when recap emails go out within two hours and next steps are logged, stage-two-to-stage-three conversion rises from 41% to 49%)

- Set weekly thresholds: if intervention rate exceeds 15% for two weeks, pause that workflow and retrain prompts or templates

ROI: CFO-grade math without the fluff

Finance wants math, not magic. Use a simple model: AI-attributed gross profit gains minus all-in costs over 6 to 12 months. Only count realized gains (for example, time actually redeployed to revenue-generating work), not theoretical time saved.

Core formula

AI ROI = (Incremental gross profit − Total AI costs) ÷ Total AI costs

Where incremental gross profit equals

- Added bookings from win-rate lift × gross margin

- Plus added bookings from cycle-time improvements × gross margin

- Plus retained bookings from reduced no-shows or churn × gross margin

- Plus capacity redeployed × average productivity × gross margin

Total AI costs include

- Licenses and usage fees

- Enablement time for managers and reps

- RevOps and IT hours for setup and data work

- Ongoing administration and monitoring

Fast signals vs slower signals

Fast (weeks)

- Proposal time saved: hours saved per rep × number of reps × hourly productivity value

- CRM hygiene speed: time from meeting end to updated next step; expect fewer stalled deals

Slower (months)

- Win-rate uplift: controlled test results over at least one full cycle

- Forecast accuracy: smaller misses enable better resource allocation

Worked example

Inputs

- 40 sellers

- Two hours saved per week per seller from summaries and email drafts

- Productivity value per hour 120

- Quarter length 13 weeks

- Gross margin 65%

- AI subscription and ops costs per quarter 180,000

- Win-rate lift adds 360,000 bookings (as in the earlier calculator)

Time-savings gross profit

- Time saved value = 40 × 2 × 13 × 120 = 124,800

- Gross profit on time savings = 124,800 × 0.65 = 81,120

Revenue-lift gross profit

- 360,000 × 0.65 = 234,000

Total gross profit lift

- 81,120 + 234,000 = 315,120

AI ROI

- Net = 315,120 − 180,000 = 135,120

- ROI = 135,120 ÷ 180,000 ≈ 75% for the quarter

- CAC payback view: if AI reduces blended cost per opportunity by ~12% and lifts conversion, payback often shortens by 1-2 quarters in B2B service motions

From leading to lagging to dollars

- Leading indicators show adoption: daily active users, assisted notes, next-step acceptance

- Lagging indicators show outputs: faster proposals, tighter forecasts, higher stage conversion

- Dollars follow: more wins at the same or lower cost, shorter cycles, higher gross profit

Scorecard template to keep everyone aligned

- Inputs and enablement: data readiness 80+; provisioned users 95% in pilot teams; policy compliance 100%

- Usage: DAU 60% weekly; assisted notes on 65% of calls; next-step acceptance 60%+

- Outcomes: proposal turnaround down 30%; forecast accuracy +5 to +10 points; stage-two-to-three conversion +6 to +10 points; win rate +5 to +12 points; sales cycle down 10 to 20%

- Financials: bookings lift tracked and attributed; gross profit lift tracked; all-in AI costs captured; ROI reported quarterly and on a trailing 12-month basis

Final thought for CEOs who want ownership without extra meetings

- Keep the framework tight and repeatable: one ladder, four layers, clear owners

- Hold a 15-minute weekly usage huddle with RevOps and managers - no slides, just adoption, blockers, and quick wins

- Run a monthly results review with Finance and Sales leadership - decisions only; keep what works, pause what doesn’t, set the next test

- Publish a two-page quarterly summary that maps leading indicators to lagging indicators to gross profit; that’s a story everyone can follow

Keep your tool choices simple and your definitions strict. When the basics are tight and the cadence is steady, the framework does the heavy lifting.

.svg)