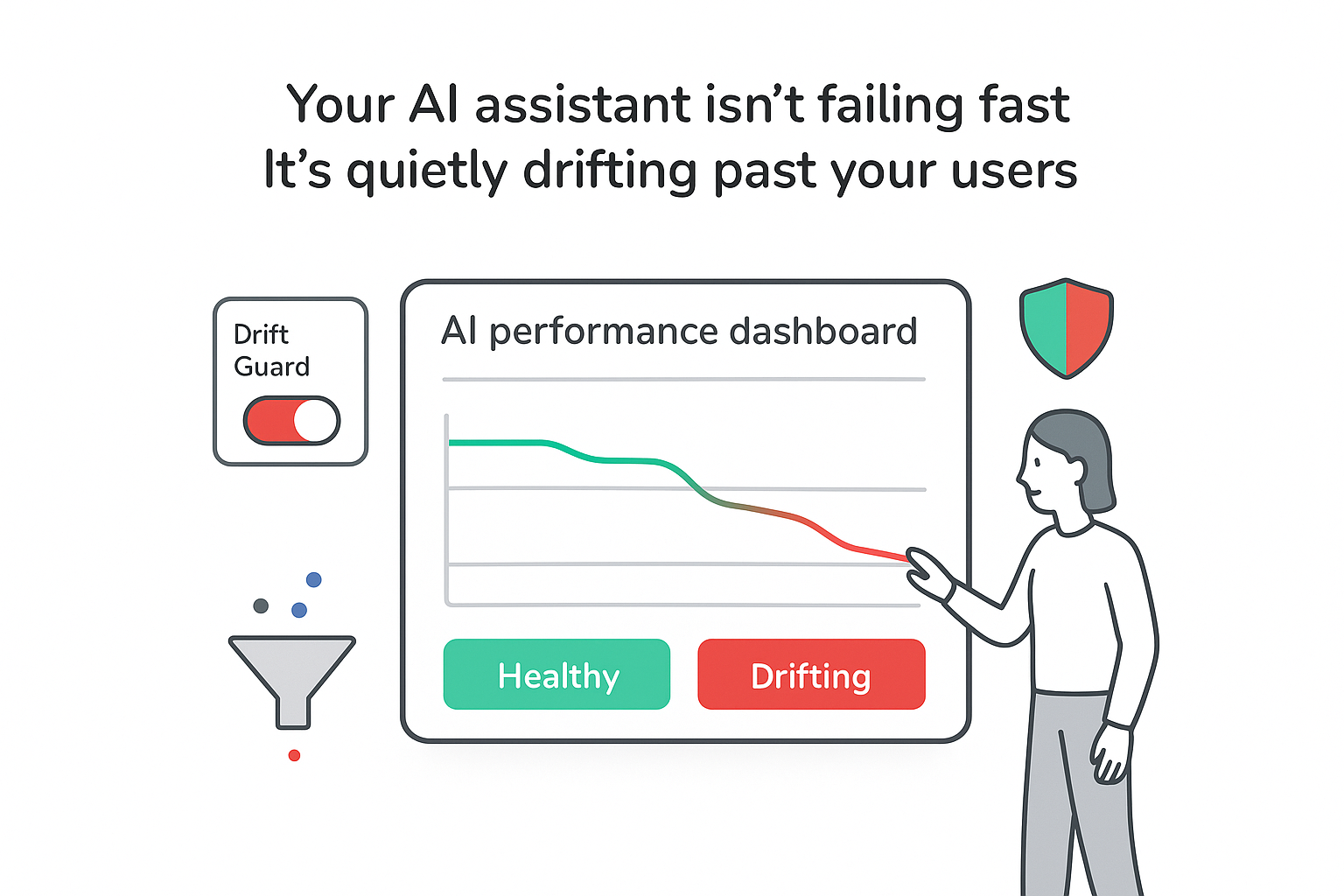

Your AI agents rarely fail overnight. In my experience, they fade. The support bot that once handled tickets cleanly starts giving odd answers. The sales assistant that used to qualify leads well begins to miss intent or recommend the wrong tier. Internally, a copilot starts sounding slightly “off,” quoting old policies or outdated pricing. On paper, the model looks unchanged. In production, performance slides.

That slow decay has a name: data drift.

Why data drift quietly breaks LLMs and AI agents

The pattern is predictable. In staging, an LLM setup looks great: demo calls are smooth, sample chats are accurate, and everything sounds on-brand. Then it ships to real users - and a few weeks later, the cracks show:

- A support assistant suggests legacy SLAs that no longer exist.

- A sales bot keeps recommending a package retired last quarter.

- An internal copilot points people to a process doc that was archived months ago.

Nothing “broke” in an obvious way. The base model still responds. Infrastructure looks fine. But the system your team trusted now creates friction with customers, annoys staff, and quietly chips away at revenue.

I separate this from one-off hallucinations. Hallucinations are isolated made-up facts that can happen even in a stable environment. Data drift is systemic: inputs, documents, APIs, or business rules change, and the AI stack does not keep up. For a B2B service business, that usually translates into lower-quality leads, workflow failures when integrations change, and (most damaging) internal teams losing trust because they do not know when the agent will be wrong. If you are using chat as a conversion surface, drift becomes even more expensive - see Do AI chatbots increase conversions on small business sites?

What data drift is (and how it differs from concept drift)

Data drift happens when the data your model or agent sees in production no longer resembles the data it was trained on or evaluated against. You validated the system on one snapshot of reality, then customers, processes, and tools moved on.

In practice, drift can appear in structured sources (CRM fields, ticket properties, billing data, pipeline stages) and unstructured sources (playbooks, SOW templates, knowledge base articles, chat logs). For LLMs and agentic systems, it also shows up in the surrounding stack: retrieval indexes fall out of date, prompts still reflect old product language, and tool schemas change without the agent understanding the new contract. This is one reason teams investing in marketing data lakes that serve LLM use cases tend to recover faster when systems change.

It also helps to draw a clean line between data drift and concept drift. Data drift is about how inputs change (for example, your pipeline shifts from SMB-heavy to enterprise-heavy). Concept drift is about how the definition of “correct” changes (for example, “qualified lead” used to mean “demo booked,” and now it means “budget confirmed plus NDA signed”). Concept drift is why an agent can behave consistently and still be wrong for the business.

The three drift types I see most in production

When I diagnose drift, I usually reduce it to three questions: did the structure change, did patterns change, or did meaning change?

Schema drift (structure changes): fields are renamed, added, removed, or their types shift; API payloads change shape; required parameters appear. Agents may keep responding, but with missing context or wrong assumptions.

Statistical drift (pattern changes): distributions shift - new regions, currencies, ticket topics, jargon, or deal sizes become common. The schema looks the same, but what flows through it is different.

Concept drift (meaning changes): the relationship between inputs and the “right” output changes - lead qualification rules tighten, priority definitions evolve, or SLAs start depending on customer tier instead of issue type.

Here’s the quick view I use to make this concrete for LLM and agent setups:

| Drift type | Plain description | LLM/agent example |

|---|---|---|

| Schema drift | Structure or field names change | Tool expects plan_tier, source now uses subscription_level |

| Statistical drift | Value ranges or patterns shift | New regions/currencies appear; lead scoring skews high or low |

| Concept drift | Business meaning or rules change | “Qualified lead” definition changes; routing logic stays old |

In real systems, I almost never see these in isolation. That’s why one-time tuning is not enough for a live assistant.

Why drift happens (especially in B2B service companies)

Drift is not a sign your team built something “bad.” It’s a sign the business is alive.

The causes I see most often are product and service changes (new packages, renamed tiers, pricing updates), shifting customer behavior and language (a new vertical brings new terms and priorities), data pipeline or integration refactors (fields consolidated, sources merged, event names updated), knowledge base churn (docs updated, archived, reorganized), and API or tooling changes (new versions, deprecations, altered error behavior). When these changes break downstream expectations, you often get the AI equivalent of data downtime - the system is “up,” but outputs are no longer reliable.

The important point is this: most of these changes are healthy business progress. Drift is what happens when the AI layer trails behind that progress.

How I detect drift before users complain

Detection does not have to be expensive or complicated. What you want is a small set of signals that tell you the system is sliding before it becomes a customer-visible incident.

Prompt and intent shift: sample and cluster anonymized prompts over time. If a growing share of new prompts sits far from historical clusters, user intent has changed (new industry, new service line, new terminology).

Structured-field stability: track simple distributions on a few high-leverage fields (industry, region, deal size, customer tier, ticket priority). When those move sharply, downstream behaviors tend to move with them. Metrics like population stability index (PSI) can quantify “how much changed” between time windows.

Retrieval quality in RAG setups: log what gets retrieved and how often retrieval actually supports the answer. If relevant documents stop appearing near the top results - or answers stop using retrieved docs - performance will decay even if the base model is strong.

Tool-call reliability: for agents that call tools, watch tool usage rate and tool failure rate. A spike in errors (or a sudden drop in tool usage) is often the earliest sign that a schema or API contract changed.

I do not try to monitor everything at once. I pick a few signals tied to business-critical workflows and make sure they are reliable. If you want a deeper monitoring mindset across your stack, this companion piece on Data Pipeline Observability is a useful reference point.

Turning drift signals into evals and alerts that matter

Metrics tell you something changed. Evaluations tell you whether the change matters.

What works well in practice is maintaining a small “golden set” of high-value scenarios for the workflows you care about most: lead qualification, support triage, proposal and SOW drafting, and internal policy questions. Then run those scenarios on a schedule (daily or weekly) and track a simple pass rate over time. When that score slides, it’s often the first dependable indicator that drift is affecting outcomes - not just inputs. If SOW generation is a key workflow for you, see Auto-drafting statements of work with clause libraries and AI for how teams typically structure “golden” examples around legal and delivery constraints.

For retrieval-heavy systems, add two lightweight checks: whether at least one relevant document appears in the top results for each golden prompt, and whether the final answer actually uses (or cites) that document when it should. Separately, for structured outputs and tool calls, strict formatting checks catch early degradation: parsing failures, missing required fields, or outputs that no longer match the current business contract.

On alerts, keep it boring and high-signal:

a sustained drop in golden-set pass rate

a sustained drop in retrieval hit rate or grounding

a spike in tool-call failures

a rising rate of format violations

Then make sure there is a clear owner for each workflow so “someone” does not turn into “no one.” For additional coverage against subtle regressions, it also helps to borrow techniques from red teaming your marketing AI stack.

How I fix drift fast (without overcorrecting)

When drift shows up, I focus on targeted fixes rather than dramatic rebuilds.

When prompts and retrieval feel stale

If new services fail while older scenarios still work, update system instructions to reflect current product names, tiers, and rules; refresh examples to match how customers talk now; and ensure the knowledge base content being retrieved reflects current reality. I try hard not to treat “retrain the model” as the first move. In many cases, fresh instructions plus current knowledge gets most of the way back.

When retrieval has drifted

If relevant docs no longer show up, or the agent keeps pulling outdated sources, reindex updated content, revisit document chunking and metadata, and add a simple “low-confidence retrieval” behavior so the agent asks clarifying questions instead of guessing.

When tools and integrations are the issue

If tool calls fail after deployments, or the agent stops using tools it relied on, stabilize versioning for system prompts and tool schemas and run a small set of tool-in-the-loop tests on every change. Most agent “personality regressions” I have seen are really change-management problems: too many edits across environments with no single source of truth. This is where a practical rollout plan like Change management for rolling out AI across marketing teams pays for itself.

Making drift boring over the long term

You cannot stop drift, but you can make it predictable. The long-term approach is a loop: define the handful of workflows that truly matter, instrument enough logging to measure drift and outcomes, review trends regularly with both business and technical owners, and treat prompt, retrieval, and schema changes like real releases (versioned, tested, and measured).

One last note: drift is not limited to machine learning. Even without an ML model, any data-driven system - dashboards, rule engines, reporting pipelines - can “drift” when schema changes or category meanings evolve. That’s why drift management tends to pay off twice: it protects the AI layer and the decisions the business makes from the same underlying data.

.svg)