If you run a B2B service company, you want speed, predictability, and healthy margins. An AI prompt library turns your team’s know-how into repeatable building blocks for today’s leading models. The result is less rework, more consistency, and measurable ROI I can show on a dashboard, not just sense in my gut. It can sound like a tradeoff between quality and speed. It usually is. Yet with prompt standardization and ownership, I get both.

What I mean by an AI prompt library

An AI prompt library is a shared, searchable hub of approved prompt templates with context, usage rules, and owners. It becomes my source of truth for how to talk to AI systems to get on-brand, reliable outputs in less time. For CEOs and operators in service firms, it pays off in three ways: it saves hours on repeat tasks, it locks in standard tone and structure, and it cuts errors by making every prompt traceable and testable.

TL;DR

- What it is: A central, approved set of prompts and context blocks, tracked with metadata and version control, so teams get consistent, high-quality outputs from AI.

- Who it’s for: B2B service teams across sales, marketing, client delivery, ops, and finance.

- Fast wins in 30–60 days: 30–50% less time on repeat tasks, fewer revisions, faster onboarding, cleaner compliance (ranges vary by task and baseline).

- What this guide covers: Benefits, prompt categories, how I build a prompt library, governance, tooling approach, and FAQs.

A strong library adds accountability that many leaders crave after rough agency experiences. Each prompt has an owner, an approval workflow, and a scorecard. That means reliable outputs and a way to point to where things improved. Instead of guessing which prompt helped a proposal convert or why campaign copy drifted off voice, I can trace it.

Benefits I can measure quickly

The benefits are simple to state and simple to measure. I anchor them in baselines so improvements are visible and defensible.

- Time saved on repeat tasks: 30–50% for activities like briefs, emails, summaries, ad variants, and weekly reports. I track baseline minutes per task vs. after the library goes live.

- Consistent brand voice: A shared tone pack and prompt templates make copy feel the same across channels and teams.

- Fewer errors: Standard “do” and “don’t” rules reduce compliance misses and factual slip-ups.

- Faster onboarding: New hires learn from the best prompts and see how to use them with examples and context blocks.

- Compliance-ready outputs: Prompts include disclaimers, sensitivity checks, and banned content rules.

- Higher throughput: More tasks completed per person per week with less micromanagement.

Suggested KPIs for an executive view

- Average minutes per task before vs. after

- Usage rate by prompt: unique users, runs per week

- Top performers: prompts linked to conversion or engagement gains

- Retirement rate: underperformers removed or revised each month

- Revision count: how often outputs need edits to reach final

- Coverage: percent of common tasks with approved prompts

These metrics make the prompt library a managed asset, not a pile of ad-hoc chats. With a light dashboard, I can see where the library drives results and where it needs work.

How I organize prompts so people can find them

To keep a B2B prompt library useful, I organize it the way teams actually work.

By function

- Sales outreach

- Demand gen

- Content and SEO

- Client delivery

- Customer success

- HR and recruiting

- Finance and ops

- Compliance

By task

- Briefs

- Emails

- Reports

- Summaries

- Ideation

- QA

- Research

By modality

- Text

- Tables

- Code snippets

- Slides

Tagging model

- Format: [Function] - [Task] - [Audience] - vX - Owner

- Example: Content and SEO - Brief - CFOs - v1.3 - A.Singh

Why this layout works: functions mirror the org chart, tasks mirror how people search, and modalities reveal output style. Tags make search and reuse fast. If I’m starting from a messy set of notes, I tag the top 50 prompts using this model. The payoff shows up when someone finds the right prompt in seconds.

How I build and roll out a prompt library

Here is a numbered plan that works for B2B teams and respects how leaders track ROI.

- Identify repetitive use cases with clear ROI. I pull the top 10 recurring tasks from the last 60 days (proposal sections, prospecting emails, monthly performance summaries, keyword briefs, QBR decks, onboarding messages). I estimate time per task and pick five where time savings or fewer errors matter most.

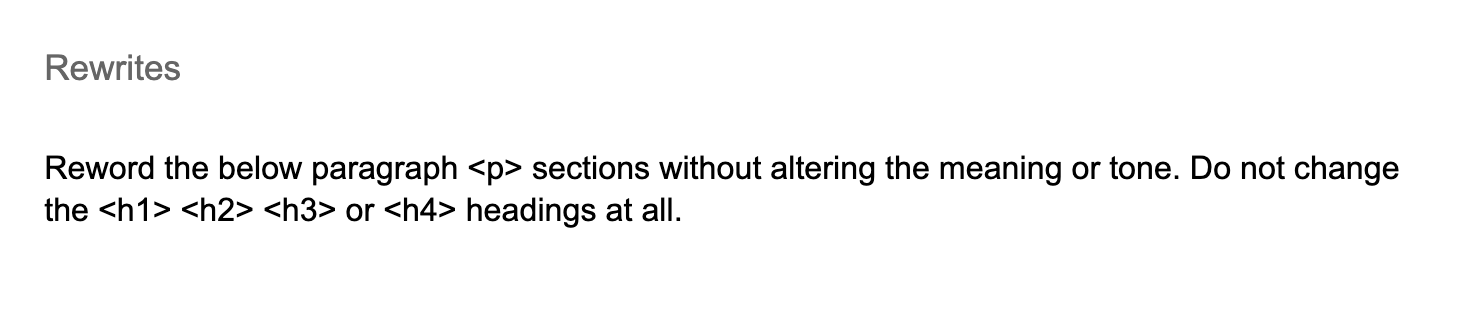

- Draft prompts plus context blocks. For each use case, I write a base prompt and separate context blocks. I include role, audience, constraints, variables, tone, and specific “do or don’t” rules. I keep context reusable (brand voice summary, buyer personas, compliance notes, template structures). Separating prompts from context multiplies reuse and avoids duplication.

- Test across target models and score results. I run the same prompt against my main models, capture inputs and outputs, and score quality with a simple rubric: relevance, tone match, factual checks, and edit effort. I record which model and parameters gave the best output for that task.

- Add prompt metadata. Each prompt carries key fields: owner, version, model, parameters, length range, tone, variables, “do or don’t,” target audience, and related assets. Metadata is how I get search, audit, and training right - and it becomes the backbone of performance tracking.

- Naming convention and version control. I use a clear naming pattern (e.g., [Function]-[Task]-v1.1) and document changes between versions with a change log. That is version control in plain language.

- Centralize in a single source of truth. I pick one system and stick with it. Search, tags, and relations connect prompts with context blocks, owners, and examples. I avoid shadow libraries in personal docs so everyone uses the same hub.

- Permissions and approvals. I define who can propose, edit, approve, and publish. Editors draft. Approvers review for quality and compliance. Publishing moves a prompt to the approved folder and marks it as current.

- Roll out with lightweight training. I record a short screen video that shows how to find, use, and adapt prompts. I share a one-pager on naming, tags, and owners. I ask managers to pick two prompts and use them in their next task so adoption starts immediately.

- Measure and iterate. I set a review cadence - weekly for high-use prompts, monthly for others. I track usage, edit rates, and outcomes. I retire stale prompts and refresh context blocks when brand voice or product messaging shifts.

I often pilot fast: choose one function and one repeat task (like outreach emails), draft 10 prompts with context blocks, ship to three users, track runs and edit time for a week, keep the top five, revise the rest, and publish v1.

Governance that actually sticks

Governance sounds heavy. It does not need to be. What matters is clear ownership, controls that match your risk profile, and a cadence that keeps content fresh.

Ownership and roles (RACI-style)

- Prompt owner: drafts, maintains, and updates the prompt

- Approver: senior lead who checks quality and compliance

- Contributors: team members who suggest changes

- Informed: stakeholders who rely on outputs

Permissions and workflow

- Viewer: can use approved prompts

- Editor: can draft and propose changes

- Approver: can move drafts to approved

- Admin: manages structure, tags, and users

- Workflow: propose → peer review (optional) → quality gate → publish → monitor

Model usage rules and data policy

- Approved models and endpoints by task type

- Parameters and temperature ranges per task

- When to use system messages vs. user prompts

- Data rules: no client names or confidential details unless anonymized and permitted; store outputs and logs per retention policy

Quality gates and bias checks

- Accuracy: passes factual checks or cites sources

- Tone: matches brand voice and audience

- Compliance: meets policy rules

- Efficiency: reduces edit time by an agreed percent

- Performance: meets baseline conversion or engagement where relevant

- Red-team high-impact prompts and test with a small “golden” dataset of edge cases to catch unintended bias

Cadence, change logs, and deprecation

- High-use prompts: weekly checks; medium-use: monthly; full sweep: quarterly

- Keep change logs: who changed what and why

- Flag outdated prompts as deprecated, keep 30 days for rollback, then archive

Risks and quick mitigations

- Prompt sprawl: enforce one source of truth and tags

- Stale content: schedule reviews and auto-expire old versions

- Shadow libraries: restrict private folders and elevate the main hub in daily tools

- Compliance breaches: add explicit “do or don’t” blocks and require checks before publish

Tooling architecture without vendor lock-in

I use two layers: a knowledge hub for documentation and approvals, and an insertion layer that gets prompts into daily workflows fast. I avoid over-indexing on any single vendor and make sure everything is exportable.

Selection criteria

- Strong search and filtering, including tags and full text

- Relations and templates for repeat fields

- Variables and placeholders for quick customization

- Approvals, versioning, and audit trails

- Analytics for prompt performance tracking

- Access control and SSO

- Browser-level surfacing inside email, CRM, CMS, and support tools

- Integrations with team chat, browsers, and core data sources

- Easy export so nothing is locked in

Practical tool examples

Knowledge hubs: Notion, Coda, Confluence, Slab, Guru, Airtable. Insertion layer and text expanders: Text Blaze, Briskine, Typedesk, Magical (see G2: Text expanders category for comparisons).

Common pitfalls

- Tool sprawl that splits the source of truth

- No standard fields for metadata

- Weak permissions and unclear ownership

- No analytics, so value cannot be demonstrated

FAQs

How often should I update a prompt library?

I tie updates to usage and performance. High-use prompts get a weekly check, others monthly. I schedule a quarterly sweep to retire stale content and refresh context blocks. That cadence keeps quality high without adding churn.

Can a prompt library help a small team?

Yes. Small teams feel the gains fast because time saved on repeat tasks compounds. Even a lightweight hub with shared templates increases reuse rates and reduces edit time within a few weeks.

How do I test prompts in a prompt library?

I run A/B tests with a small golden dataset and score outputs for accuracy, tone, and edit effort. I track results by model and parameters in metadata. Prompts that hit the bar get approved; others get revised or retired.

How do I keep a prompt library organized over time?

Clear naming, tags, and owners; one source of truth; defined permission levels; and a deprecation rule. The taxonomy and governance sections above outline a setup that scales.

Conclusion

Standardization, speed, and accountability are the heart of a healthy AI program in a service business. An AI prompt library delivers all three by trimming the drag of repetitive work, locking in a consistent voice, and tying outcomes to clear owners. I spend less time checking copy and more time on higher-value projects - while knowing exactly which prompts moved the needle.

.svg)