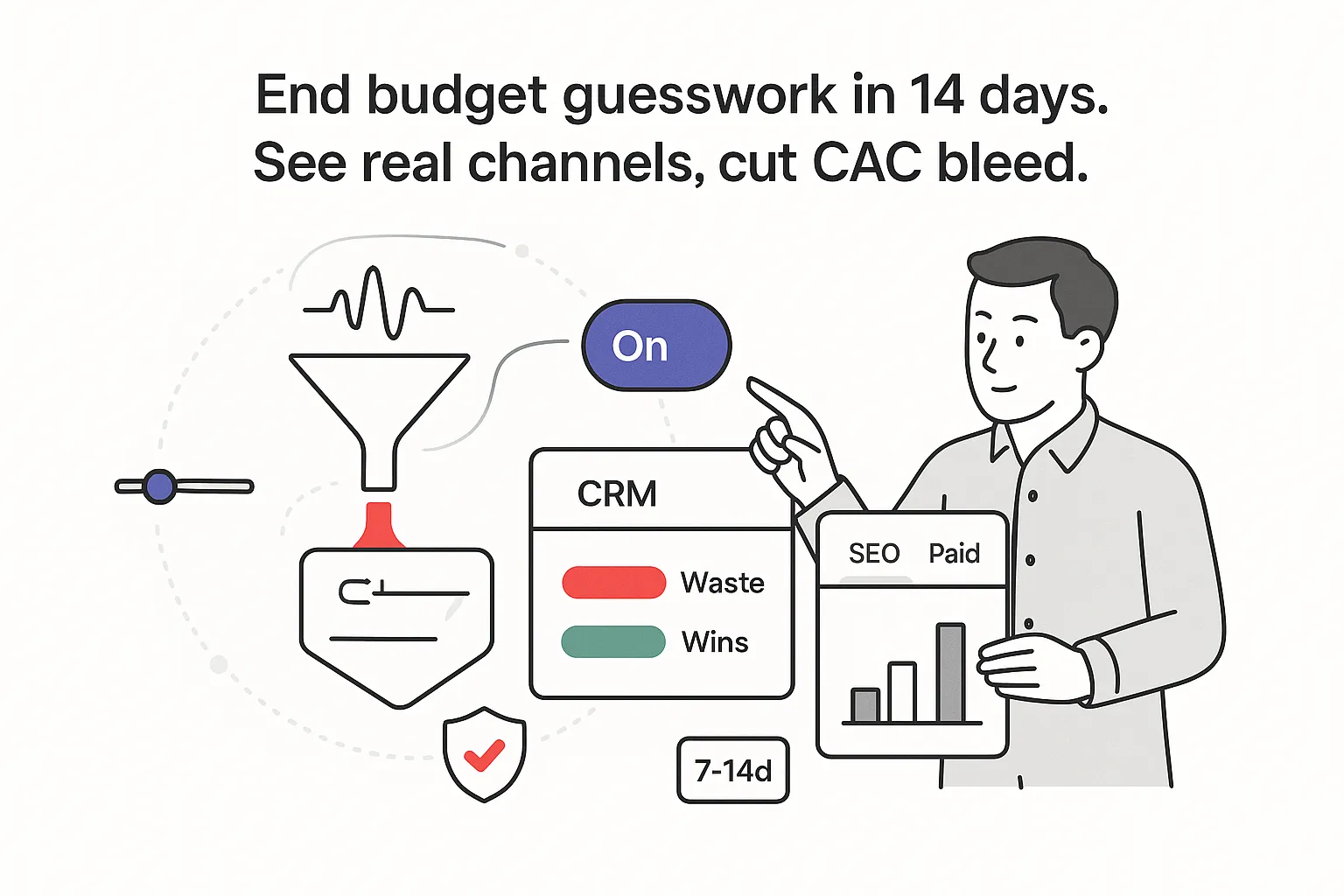

If my gut tells me the marketing mix is off, it usually is. The fastest way I’ve found to fix it is to listen to what buyers actually say - not in a brand survey, but in real conversations. When I pull call recordings into marketing attribution, I bridge the gap between instinct and clean numbers. That one shift turns fuzzy channel debates into crisp decisions: less CAC waste, more pipeline from the channels that actually move deals.

From gut feel to grounded attribution

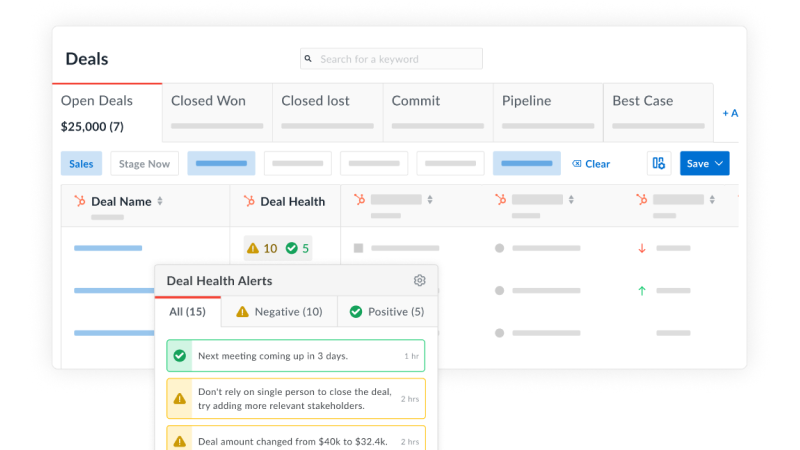

CEOs want the story tied to revenue. A simple way I get there is by capturing “How did you hear about us?” (HDYHAU) moments from sales calls and piping them into CRM fields and dashboards. It’s quick to stand up and it drives signal early. Within 7–14 days, I typically start seeing patterns that guide spend across SEO, paid search, partner, referral, podcast, and community. It isn’t perfect on day one. It is directional and concrete, and it sharpens with a week of tuning and QA.

Before I route call recordings into attribution, channel arguments feel endless. After I route them, the picture steadies. Here’s how the numbers usually shift once the HDYHAU loop is live and trustworthy (after basic guardrails and a week of QA):

- SQLs by channel: clearer splits, far fewer “unknowns,” and real visibility into “dark social” mentions (word-of-mouth via social, communities, and podcasts that rarely show up in click data)

- Pipeline dollars: mapped to first touch based on actual buyer language rather than assumed last-click

- Win rate: lift where message and audience match, often on SEO and referral

- CAC: trimmed by cutting spend on channels that drive clicks but not conversations

One early pattern keeps repeating: SEO vs. paid gets real. When answers flag “Googled you” or “found your guide,” that signals organic discovery, not a branded click. In the first month, I often reallocate 10–20% away from expensive clicks toward durable demand, with CAC stepping down as the mix shifts.

For a quick walkthrough of the workflow, see Stack & Scale.

The AI-driven workflow at a glance

I keep the system simple and observable:

- 1) Trigger by schedule or webhook

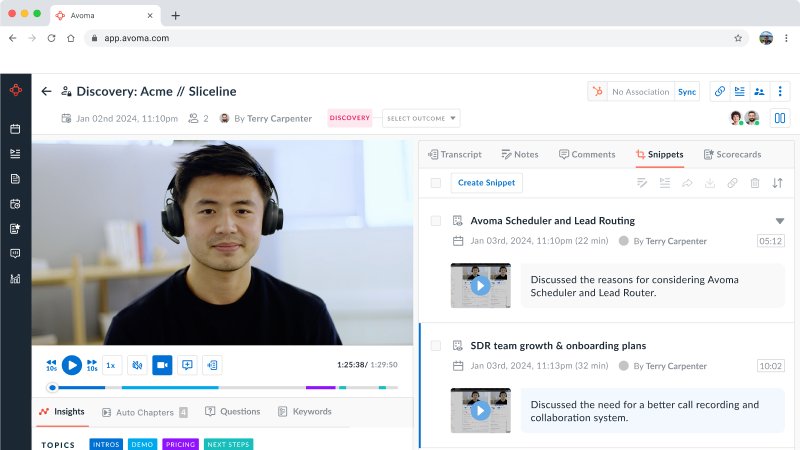

- 2) Fetch recent calls from the conversation intelligence platform

- 3) Filter by tracker or meeting type (discovery, demo)

- 4) Loop over each call with rate-limit awareness

- 5) Pull transcript with timestamps and speaker tags

- 6) Use an LLM to extract the verbatim HDYHAU Q and A

- 7) Use an LLM to classify the answer into a channel taxonomy

- 8) Sanitize JSON and validate against a strict schema

- 9) Upsert fields to the database and CRM

- 10) Activate insights through dashboards and alerts

- 11) Log errors for retries and QA sampling

I use the stack I already trust: my recorder, my iPaaS or workflow tool, my warehouse and BI layer, and my CRM. No rip-and-replace. The pattern scales cleanly from one team to many.

Making sales call recordings reliable and compliant

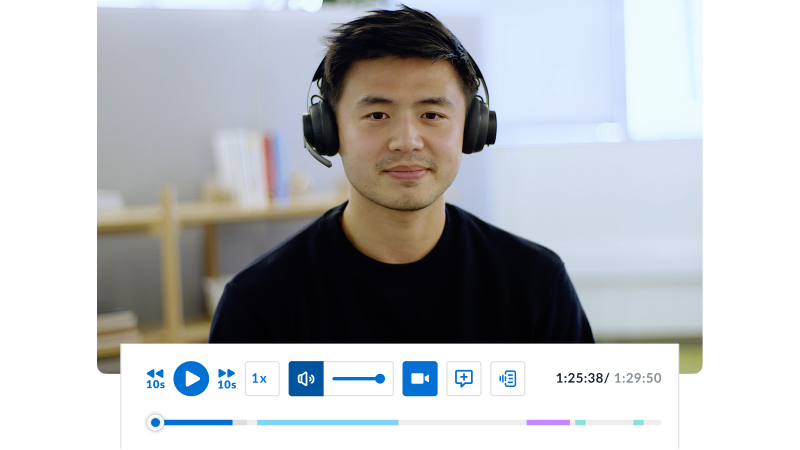

Recording and transcription should feel boring and safe - that’s the point. I lock down a few basics so the rest of the system hums.

Policy and consent:

- Enable auto-recording for inbound discovery and demos, with a clear voice prompt at the start.

- Respect two-party consent laws. Add a visual cue in the invite so nothing catches people off guard.

Storage and retention:

- Store transcripts with role-based access and a retention window aligned to policy.

- If audio must be stored, encrypt at rest and in transit; align with standards like SOC 2 and ISO.

PII handling:

- Redact emails, phone numbers, and credit card details; mask before sending text to an LLM.

- Keep a redaction step so downstream systems never see raw PII.

Accuracy:

- Aim for a low word error rate; external mics improve accuracy more than many expect.

- Turn on speaker diarization to pinpoint exact buyer quotes.

Coverage:

- Record 100% of inbound discovery and demos to make the HDYHAU signal sturdy.

- If selling across regions, test accents and languages and tune settings accordingly.

Integrations:

- Confirm the recorder connects to the CRM for contact matching and meeting logging.

- Confirm the workflow tool can call the recorder’s API on a schedule.

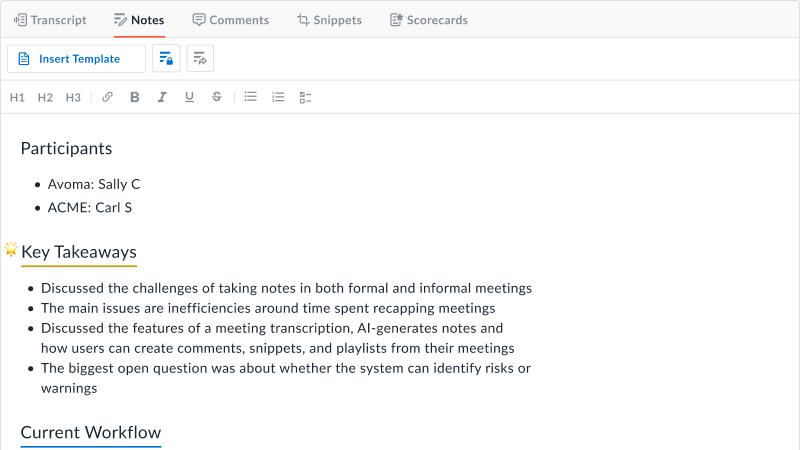

This is not busywork. Auto-recordings and AI notes reduce frantic typing. Reps listen better, follow-ups improve, and the data quality rises. The right toolset even removes the need to manually write notes so sellers can stay present.

The implementation loop, step by step

Here’s the concrete build I use to turn call recordings into marketing attribution. It mirrors standard ops patterns with a focus on HDYHAU.

1) Trigger with variables

I accept JSON like:

{"gong_tracker_id": "TRACKER_ID","from": "YYYY-MM-DDTHH:mm:ssZ","to": "YYYY-MM-DDTHH:mm:ssZ"}These set the topic to track and the date range.

2) Fetch recent calls via API

Call the recording platform’s endpoint for calls between from and to. Request fields for trackers, participants, titles, and links. Handle pagination beyond 100 calls. See Gong’s v2/calls/extensive for a reference endpoint.

3) Filter by tracker or meeting type

Select calls that match the HDYHAU tracker or that are discovery or demo. Shape a minimal payload with id, title, start time, and URL.

4) Loop over matching calls

Process one call at a time, throttled for rate limits.

5a) Get transcript

Pull the transcript with timestamps and speaker tags. Keep the call id tied to the text.

Example request body:

{ "filter": { "callIds": ["{{call_id}}"] } }Reference: Gong /v2/calls/transcript.

5b) LLM prompt to extract verbatim HDYHAU with timestamps

I use a long-context model and a tight prompt.

You will receive a full call transcript with timestamps and speaker tags.

Task:

1) Find where the salesperson asks how the prospect heard about the company.

2) Return only JSON with keys:

{"sales_rep": {"text": "verbatim question","start_ts": "00:12:04","end_ts": "00:12:08"},"prospect": {"text": "verbatim answer","start_ts": "00:12:09","end_ts": "00:12:18"}}

If not found, return:

{"not_found": true}

No extra text.5c) LLM classify into channel taxonomy

Keep the taxonomy simple at first:

["SEO","Paid Search","Social Organic","Referral","Community","Event","Podcast","Partner","Direct or Brand","Dark Social","Email","N/A"]Classification prompt:

Input JSON contains the prospect’s verbatim HDYHAU answer. Classify it into one or two labels from the list.

Rules:

- Do not infer channels not stated or clearly implied.

- If the answer says Google or found you on a guide without an ad mention, return SEO.

- If unclear, return N/A.

Output only:

{"excerpt": "verbatim answer","labels": ["SEO"],"confidence": 0.92,"rationale": "one short sentence"}5d) Sanitize JSON and validate schema

LLMs sometimes return extra tokens. Strip code fences and parse with a strict schema.

Minimal JSON schema:

{

"type": "object",

"properties": {

"excerpt": {"type": "string"},

"labels": {"type": "array","items": {"type": "string"}},

"confidence": {"type": "number"},

"rationale": {"type": "string"}

},

"required": ["excerpt","labels","confidence"]

}5e) Upsert to DB and CRM

Map fields to Contact, Company, and Deal:

- Contact.hdyhau_excerpt

- Contact.hdyhau_labels

- Deal.first_touch_channel

- Deal.hdyhau_confidence

- Company.last_reported_channel

5f) Optional activations

- Send a notification to marketing when “SEO” or “Podcast” is detected with confidence above 0.85. Consider Automated alerts tied to keywords.

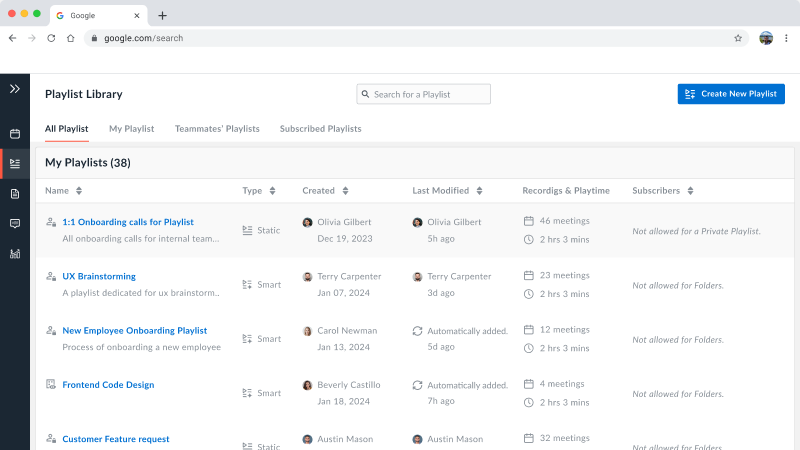

- Create a Touchpoint record for the channel with the call timestamp. You can share snippets of phone calls or compile playlists for enablement.

5g) Global error logging and retries

Log every failure with call_id, step, and error. Retry transient errors with backoff. Send items that fail three times to a dead-letter queue for review.

Minimal Python snippet for sanitize and upsert:

import json

import re

import psycopg2

def clean_json(raw):

if isinstance(raw, dict):

return raw

text = str(raw).strip()

text = re.sub(r"^```[\w]*\s*|\s*```$", "", text).strip()

try:

return json.loads(text)

except Exception:

return {"parse_error": True}

def upsert_touchpoint(conn, call_id, contact_id, labels, excerpt, confidence, ts):

with conn.cursor() as cur:

cur.execute("""

insert into touchpoints(call_id, contact_id, labels, excerpt, confidence, occurred_at)

values(%s,%s,%s,%s,%s,%s)

on conflict (call_id) do update set

labels = excluded.labels,

excerpt = excluded.excerpt,

confidence = excluded.confidence,

occurred_at = excluded.occurred_at

""", (call_id, contact_id, labels, excerpt, confidence, ts))

conn.commit()I keep scope small and write to CRM early so the value shows up fast.

Timeline:

- Day 1: wire the trigger, fetch calls, extract HDYHAU, classify to a temp table.

- Day 2: sanitize JSON, upsert to CRM custom fields, build a basic dashboard.

- Week 1: add guardrails, alerts, QA sampling, retries, and cost controls.

Roles:

- RevOps: create CRM fields and rules, confirm object mapping, own dashboards.

- Sales Ops: ensure recording coverage, consent language, and meeting types.

- Data or Engineer: build ETL, LLM steps, schema validation, and retries.

- Marketing: define the channel taxonomy, set acceptance criteria, and review QA samples.

Dependencies:

- Access to the call platform and its API.

- API keys for the workflow tool and the CRM.

- A data store (even a simple relational database) for audit trails.

Budget:

- SaaS fees already carried.

- LLM inference at a few cents per processed call with trimmed context.

- A small weekly block for QA and tuning.

KPIs:

- Percent of calls processed out of total discovery and demos.

- Extraction precision and recall on labeled samples.

- Attribution coverage rate at the deal level.

- Incremental pipeline tagged to SEO after reallocation decisions.

Guardrails that keep the signal trustworthy

Good guardrails keep call-recording attribution reliable at scale and keep costs in check:

- Confidence thresholds: accept classifications ≥0.85; below 0.70, send to review.

- Few-shot exemplars: include 2–3 short examples for edge cases (e.g., branded search vs. SEO).

- Logprob gating: if the model exposes token probabilities, require a minimum average for label tokens.

- PII redaction: run redaction before any model call; keep a reversible token map if rehydration is needed.

- No guessing: ban inferring channels not stated; “I saw you online” returns N/A.

- Fallback flows: if extractor returns not_found, try a second pass with a relaxed prompt; if still empty, mark as no_data.

- Rate-limit handling: throttle loops and add jitter so APIs aren’t tripped.

- Cost estimation: target a few cents per call by trimming transcripts to relevant chunks.

- QA sampling: spot-check 10% weekly; track precision and recall separately.

- Governance: version prompts, store changelogs, and A/B test classification prompts before shipping updates.

- Acceptance criteria: >90% precision for channel labels on a labeled sample; ≥70% recall in month one, then improve.

- Bias note: HDYHAU is self-reported; treat it as directional. Calibrate with complementary signals (e.g., branded query trends, referral codes, community membership) when available.

Forecasting, examples, and edge cases

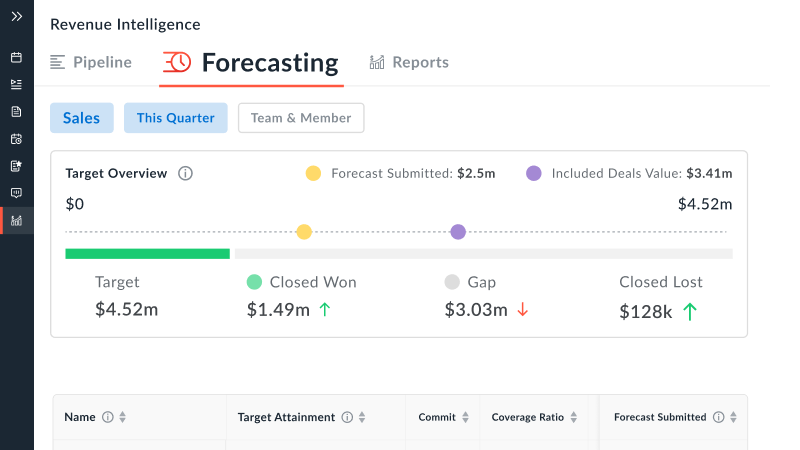

Once call-to-attribution flows, I tie the HDYHAU label to first touch on the deal and measure conversion from MQL to SQL to Opportunity to Closed Won by channel. A simple forecasting loop:

- 1) Take trailing 90-day HDYHAU volume per channel.

- 2) Apply observed conversion rates by stage and channel.

- 3) Multiply by average deal size and cycle length by channel.

- 4) Compare projected pipeline and expected revenue across SEO, paid, partner, referral, podcast, and community.

This answers simple questions with confidence: How much revenue next quarter should I expect from SEO if content velocity holds; what happens to meetings and pipeline if I trim paid search by 20% and shift to partner; which channels produce higher win rates without driving CAC up. I connect this to CRM first-touch fields and the BI layer for trending. One dashboard with channel mix, pipeline contribution, and three lines that matter - volume, conversion, deal size - turns attribution from a quarterly debate into a weekly input for planning. For deeper pipeline reporting context, see how to forecast the revenue.

Two quick examples show how this lands even when the starting point is messy:

- Example 1: At a B2B services firm, I needed to prove search was doing more than branded clicks. I shipped an MVP that tagged HDYHAU answers to SEO when buyers said they Googled a problem or mentioned finding a guide. Within three weeks, SEO accounted for 31% of qualified demos while paid looked overcounted. I shifted 28% of budget from paid search to search content and technical fixes. CAC for that segment dropped 19% the next quarter, and leadership refocused on the content buyers actually named.

- Example 2: At a mid-market tech provider, dark social and podcasts were suspected but invisible in reports. Once HDYHAU snippets were captured and classified, I saw steady mentions of a niche podcast and a private community. Marketing mirrored the language heard there; sales used snippets for openers and proof points. Win rate lifted 12% in the segment that named those channels, and the reporting stack finally counted word of mouth without guesswork.

Edge cases are normal. What about multi-source answers like “I saw a LinkedIn post, then searched your brand,” or branded search that started with a podcast mention? I record both the verbatim excerpt and the classification with confidence. Rules stay plain: if the buyer names two sources, I store two labels with a split weight or keep the secondary as a note. The pipeline math is cleaner later.

Turning call recordings into marketing attribution doesn’t have to be complex; it has to be intentional. I extract the exact words customers use to explain how they found us, keep the loop tight, store it in the CRM, watch the first two weeks of signal, tune, and then let the numbers guide the next dollar. If you want to layer this into coaching and enablement, explore hit quota faster, agenda adherence, and custom topics for a lightweight VOC program you can socialize with an easy-to-manage VOC cadence.

.svg)