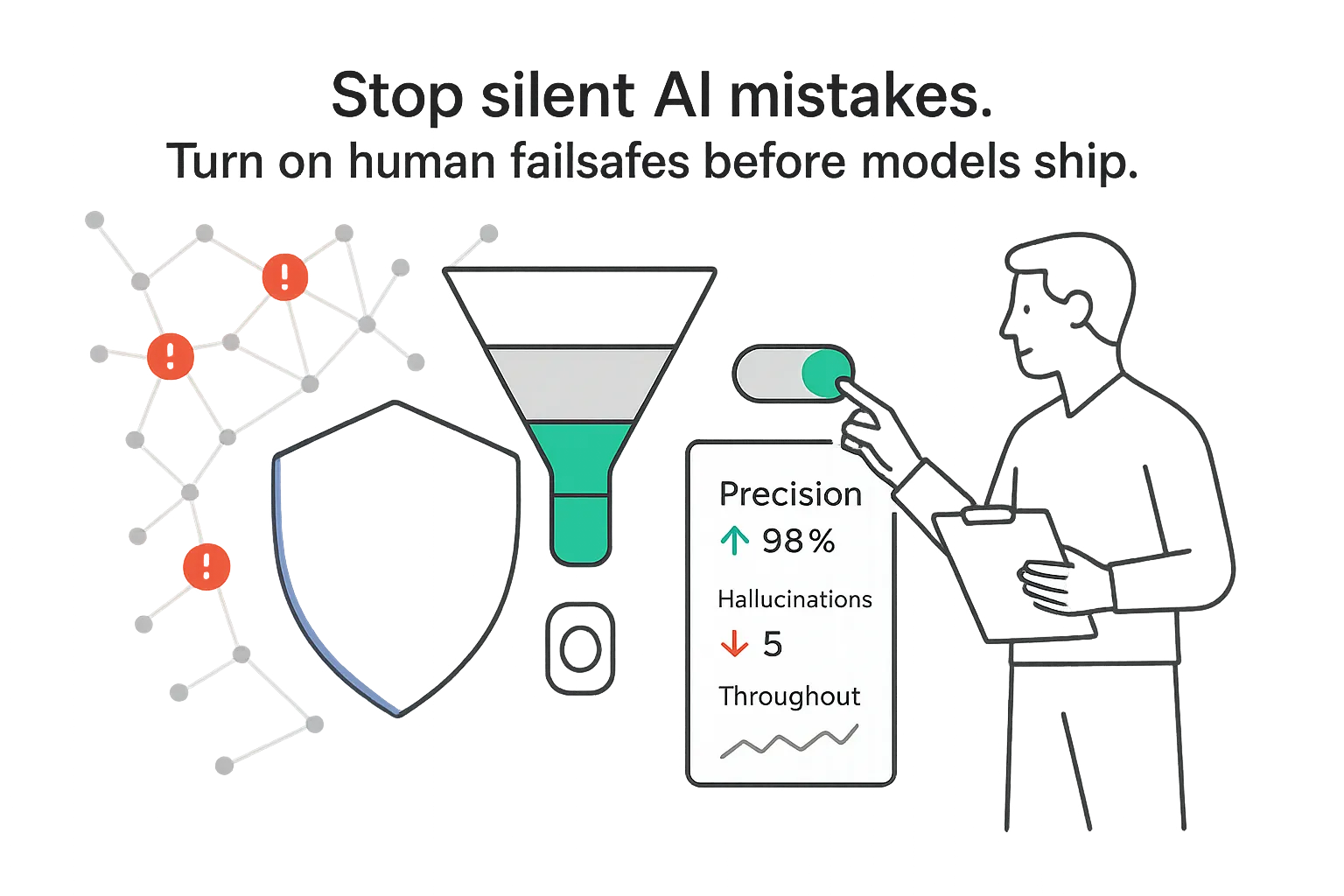

Your models can write, see, and even act. Yet one small miss can snowball into churned users, audit headaches, or a very public oops (see the drive-through that ordered 18000 waters). That is why I put human-in-the-loop (HITL) back in the spotlight. It is not nostalgia for manual work. It is a pragmatic layer that keeps AI safe, accurate, and profitable without grinding operations to a halt. If you run a B2B service business and want steady pipeline gains without babysitting, this is the operating system you have been missing. If you are experimenting with autonomous agents, you have likely seen disappointing results on messy real workflows and even basic real-world office tasks without the right human oversight.

What I mean by human-in-the-loop

Human-in-the-loop means I intentionally place people inside AI workflows to review, label, correct, approve, and teach models. The point is a tight feedback loop so the system learns from real decisions, not guesswork.

How it differs from other oversight modes:

- Human-in-the-loop: Humans act before a result is final. I use this for high-risk or high-ambiguity tasks. Triggers include low confidence, sensitive content, or named entities in regulated contexts. Risk profile is low to moderate because errors are caught pre-release.

- Human-on-the-loop: Humans supervise after results or in batches. I use this for medium-risk tasks where audits matter. Triggers include random sampling, drift alerts, or periodic QA. Risk is moderate because issues get caught later.

- Human-out-of-the-loop: Full automation. I only use this for low-risk, fully repeatable tasks with stable environments. Risk rises if the environment shifts because no review occurs until metrics degrade.

Two concrete examples I lean on:

- LLM content review and approval: For a RAG assistant that drafts client emails or summaries, I route outputs with low confidence, policy mentions, or sensitive entities to a reviewer. The person approves, edits, or rejects. Corrections become training examples for the next update.

- Computer vision annotation and QA: For defect detection, I have humans annotate ambiguous frames and validate flags near the decision boundary. A gold set keeps label quality consistent across shifts and versions.

Why HITL matters - and how I measure it

HITL is not just safer; it is measurably better when you track the right signals. Directionally, here is what I see across deployments and public case studies (your ranges will vary - treat these as hypotheses to validate; frameworks like the NIST AI RMF, ISO/IEC 23894, and the Stanford AI Index offer helpful context):

- Accuracy lift: 5-20% in precision and 3-15% in recall once feedback loops stabilize.

- Reduced hallucinations: 40-80% fewer flagged hallucinations when low-confidence outputs fall back to human review.

- Bias mitigation: 20-60% drop in flagged bias cases after targeted audits and corrective fine-tuning.

- Safety and compliance: Policy violations trend down with four-eyes review on sensitive outputs.

- Time-to-value: Controlled workflows ship in weeks, not months, while the model improves in production.

Quick wins I use without a big rebuild:

- Confidence thresholds with human fallback. If confidence is below a threshold or a sensitive topic is detected, route to a reviewer.

- Guardrail rules. If output contains PII, legal terms, or medical claims, pause and send to a domain specialist.

- Disagreement sampling. When the model and heuristic checks disagree, send to review. These are high-value training cases.

KPIs I track in plain numbers:

- Precision and recall by task and segment

- Cost per corrected error and trend over time

- Turnaround time per review and per batch

- Approved-without-edit rate (should rise week over week)

- Production drift rate (e.g., change in error patterns or embedding distributions)

Use cases where HITL pays off fast

I prioritize high-impact, compliance-heavy areas first. They deliver visible confidence and ROI.

- LLM applications

- RAG answer review for low similarity to sources, citation mismatches, or policy terms

- Summarization QA when outputs include legal, medical, or financial sections

- Red teaming with adversarial prompts; feed findings back to training. Avoid letting models judge themselves blindly - see LLM-as-judges and a 2024 study on evaluator limits.

- CX and chatbots

- Smart handoffs if sentiment turns negative, intent is unclear, or VIP accounts appear

- Escalation on claims and refunds based on value thresholds or repeat contact

- Content moderation

- Human checks for borderline content on hate, self-harm, or election topics

- Daily samples per model version to catch regressions

- Contract and medical document extraction

- SME validation for fields like indemnity, jurisdiction, dosage, or contraindications

- Triggers on missing fields, redlines, or unusually long clauses

- Fintech fraud review

- Human review for high-risk transactions with novel device fingerprints, impossible travel, or sudden pattern shifts

- Computer vision labeling

- Edge cases like occlusions, rare defects, or new packaging

- Adjudication to expand and maintain the gold set

- Safety-critical oversight

- Manufacturing: Inspectors verify defects that could trigger recalls; sampling scales with severity

- Healthcare: Radiology-assist tools route uncertain readings to specialists and log rationales for audit

Each use case needs simple triggers: low confidence threshold, high-risk topic, novelty detection, or disagreement between independent checks.

A workflow that keeps you fast and accountable

I keep the flow boring, observable, and reversible across LLM and vision projects.

- Data intake and routing: Collect inputs/outputs with metadata; attach policy labels like low risk, regulated, high impact.

- Confidence scoring: Combine model scores with rules (e.g., log probabilities, retrieval relevance, guardrail checks).

- Triage rules: If below threshold or sensitive, route to human; else pass through with logging.

- Assignment to reviewers: Route by domain and seniority using skills tags; protect PII with role-based permissions.

- Annotation and review: Approve, correct, or reject; require concise rationales for corrections.

- Adjudication: A second reviewer or lead resolves tough items; track agreement rates to monitor clarity.

- Feedback capture: Store original, correction, and rationale as structured data; treat it as training gold.

- Model update: Batch fine-tune or update prompts; record versions and experiment IDs.

- Deployment: Roll out behind a flag; use canary traffic; test on holdout gold sets.

- Monitoring: Watch SLAs/SLOs; measure precision, recall, turnaround time, review backlog, and drift alerts.

I bring evaluation inside the same loop:

- Training phase: Inter-annotator agreement (aim for ≥0.8 on key labels); fixed gold sets for weekly comparisons; label quality audits (spot-check 5-10% by a senior reviewer). Also, avoid over-relying on models to judge models - see LLM-as-judges and a 2024 study highlighting where human evaluation still wins.

- Production phase: Drift detection (input mix and embedding space); prioritize disagreement sampling; set SLA by risk (e.g., 15 minutes for high risk, 4 hours for standard).

Accountability and escalation I rely on:

- Responsible: Assigned reviewer completes the task

- Accountable: Domain lead signs off on adjudications and weekly metrics

- Consulted: Legal, compliance, or safety teams on flagged categories

- Informed: Product and data stakeholders via dashboards/alerts

- Escalation: Auto-route high-severity policy hits to a lead; if a regression appears, pause rollout and revert to last known good

The people: selecting, training, and staffing reviewers

The right human depends on the job and the stakes.

What I look for:

- Technical fluency: Comfortable with prompts, confidence scores, and annotation interfaces

- Domain expertise: Contracts, healthcare, finance, safety - context is everything

- Cognitive skills: Pattern spotting, careful reading, and calm decision-making with thin context

- Communication: Clear rationales that teach both teammates and the model

- Reliability: Consistent SLAs and steady quality across shifts

How I structure teams:

- In-house SMEs for compliance-heavy or brand-sensitive work (higher cost, higher trust)

- Managed workforce for volume labeling, red teaming, and surge demand with tight QA rubrics

- Hybrid setups pairing SMEs for high risk with a managed team for scale through clear routing rules

Training and calibration I keep light but disciplined:

- Playbooks with examples and policy notes

- Weekly calibration on disagreements to tighten consistency

- QA rubrics that score clarity, correctness, and policy adherence

- Incentives that reward quality and consistency, not just speed; four-eyes review for sensitive tasks

Cost and throughput planning I do up front:

- Estimate minutes per item by task and risk (e.g., 2 minutes for standard summaries, about 7 minutes for specific clause checks)

- Concurrency limits to avoid quality dips

- Coverage hours aligned to user peaks and regulatory deadlines

- Total cost tracked as cost per reviewed item and cost per corrected error; both should drop as the model learns

Platforms and build-vs-buy tradeoffs to consider

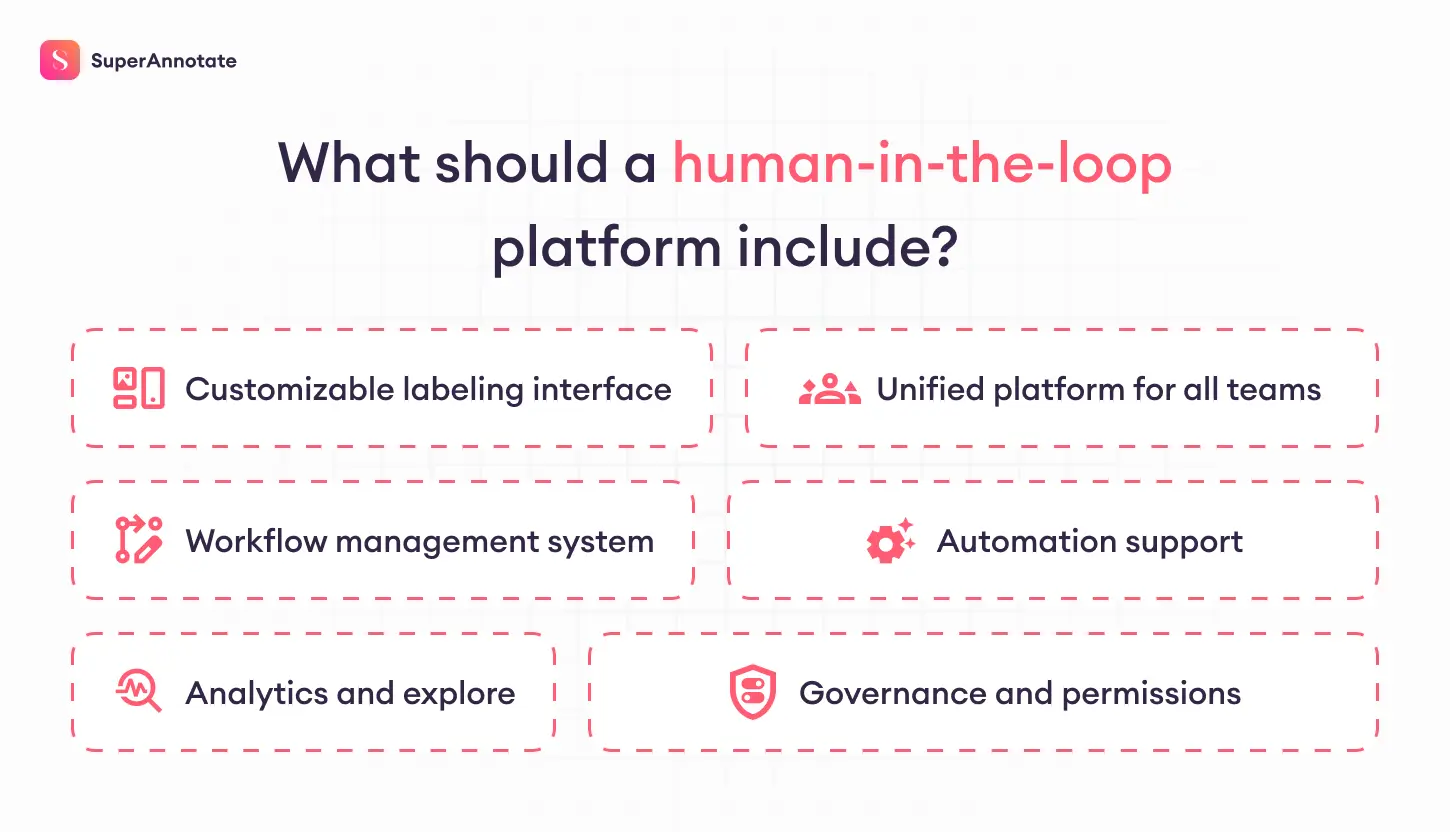

A workable platform does more than annotation; it runs the full feedback loop with governance.

- Task routing by skills, queues, and priorities

- Text and image annotation with versioning and diffs

- Immutable audit trails for who did what, when

- Prompt and log capture for analysis

- Red-team sandboxes for safe stress tests

- RBAC and PII controls, including field-level masks and data residency options

- Compliance modes (e.g., SOC 2, HIPAA-friendly configurations) and retention settings

- Integrations for alerts, issue tracking, version control, and data storage

- Experiment tracking that ties model versions to metrics and feedback data

- Active learning queues that surface the most informative items

For a deeper dive into capabilities and implementation details, see the platform Documentation, and when you need vetted teams to scale human feedback, use the Marketplace.

Build vs. buy:

- Build when deep customization is essential and you can maintain controls, privacy, and uptime

- Buy when speed, audits, and ready integrations matter most; evaluate security, data flows, failover, and pricing by seat/volume

Risks to manage - before they manage you

Skipping oversight or choosing the wrong setup carries obvious and hidden costs. I mitigate them with explicit controls and metrics.

Key risks and controls:

- Accuracy degradation: Control with gold sets, weekly A/B checks, and threshold-based sampling

- Biased outcomes: Run periodic bias audits; use diverse reviewer panels for sensitive topics

- Compliance breaches: Kill switches for policy hits; four-eyes review for high-risk outputs

- Safety incidents: Mandatory human review near risk boundaries and tight SLAs

- Rework costs: Clear instructions and QA rubrics; audit 5-10% of work

- Reduced trust: Publish reviewer presence for sensitive flows; show change logs for fixes

- Model collapse from overusing synthetic data: Limit self-generated training data and keep a human-sourced stream. See discussions of synthetic data, how models can reinforce their own biases, a pragmatic solution, and evidence that mixing human and synthetic data outperforms synthetic-only.

I use a simple risk matrix: high likelihood/high impact items get mandatory review, second approvals, and real-time alerts; low likelihood/low impact items get sampling and weekly audits. I tie each control to metrics so I know if it is working.

A practical 90-day starter plan

You do not need to overhaul everything. I start small, prove value, then expand.

What I do:

- Pick the highest-risk slice of one workflow

- Define five core KPIs: precision, recall, calibration error, turnaround time, and cost per corrected error

- Set confidence thresholds; escalate the ambiguous and let the obvious pass

- Maintain a gold set; refresh a small portion monthly to reflect new reality

- Hold weekly calibration sessions; record decisions in a single source of truth

- Log prompts, outputs, corrections, rationales, and version IDs for audit and learning

- Align incentives with quality and speed; reward consistency, not shortcuts

- Validate legal and policy requirements up front; map which outputs need which checks

- Iterate A/B tests on routing rules, prompts, and training data selection

In practice, I ship a pilot on one high-value flow, set thresholds and routes, measure the five KPIs weekly, fix the biggest error class first, expand to the next two flows, then tune the model with the new gold data. This keeps the loop tight, the risks bounded, and the gains compounding.

.svg)