If you lead a service business and wonder whether LLMs on top of a data lake actually move the needle, I get it. The idea sounds great, then stalls on ROI, timelines, and control. Here is a practical view of the outcomes you can expect, how fast, and what a responsible setup looks like - without extra complexity.

LLMs on a data lake: what actually moves the needle

I start with the business case, not the model. When I connect an LLM to curated lake data, I shorten response loops, tighten governance, and cut manual research time. That translates into faster answers for prospects and customers and lower cost per interaction. In B2B support and success, realistic 90-day targets look like:

- Lead response time down 40–60%

- Customer satisfaction up 5–10 points

- Self-serve resolution at 25–40% for tier-one issues

- First contact resolution up 10–20%

- Agent handle time down 20–35%

- Support ticket deflection at 20–35% for repeated questions

- Research time saved per rep at 30–50%

Those ranges depend on baseline content quality, channel mix, and your access controls. I always baseline current metrics first so the lift is attributable and defensible.

ROI you can defend in 90 days

I keep ROI simple and explicit. ROI% = (total gains − total costs) / total costs × 100. Gains include deflected tickets, labor saved on research, and revenue lift from faster follow-up. Costs include platform fees, storage/compute, and build or integration work.

Example (monthly):

- 12,000 support tickets at $8 fully loaded per ticket. A 25% deflection saves 3,000 tickets → $24,000.

- 10 agents spend 2 hours/day on knowledge lookup. A 40% cut saves 8 hours/day total. At $45/hour, ~ $8,000/month.

- Faster lead follow-up lifts win rate by 5 percentage points on a $400,000 monthly pipeline. Additional closed revenue ≈ $20,000; at 25% average margin → $5,000 in margin.

Total gains ≈ $37,000/month. If monthly operating plus amortized build costs are $18,000, ROI ≈ (37 − 18) / 18 = 106%/month. Even if I halve the gains to be conservative, the ROI stays slightly positive. Interpret the win-rate effect carefully; use your actual pipeline, margin, and baseline conversion. For credibility, also show payback period (costs/gains) and sensitivity (for example, ±10 percentage-point deflection).

A 90-day plan with clear KPIs

- Days 1–14: Data readiness and pilot plan. Connect lake zones for docs, tickets, FAQs, CRM notes. Clean and tag PII. Define the three highest-volume intents. Baseline KPIs. Aim for quick wins that prove deflection and time saved.

- Days 15–45: Build RAG pipeline and guardrails. Ingest, chunk, embed, and index curated docs. Add citations and confidence scoring. Roll to a controlled user group. Target 20% containment with ≥95% policy-compliant answers.

- Days 46–90: Expand content set and policy checks. Add row/column filters and masking. Soft-launch on a segment. Target 25–35% deflection, 20% handle-time cut, and ≥30% research-time saved. Review costs weekly and trim context to hold median latency near 2 seconds where feasible.

Choosing models for the job

Large Language Models turn natural language into a programmable interface. Different choices materially affect business value.

- Cost versus latency: High-accuracy models often cost more and can be slower. For high-volume chat and search, start with a mid-size model and add reranking to boost quality.

- Safety and guardrails: Combine system prompts, policy checkers, and output filters to enforce privacy and compliance.

- Fine-tuning versus prompt patterns: For style and format, prompt patterns usually suffice. For domain terminology and tone, light fine-tuning can help. For facts, rely on retrieval, not the model’s memory.

Simple guidance by use case

- Internal search and support: Retrieval on your lake with a reliable mid-size model.

- Customer-facing chat with PII risk: A closed model with strong content filters, masking, and auditable logging.

- Summaries and Q&A on long documents: A model with strong long-context performance plus citations.

Validate model choices on a small, representative test set (gold answers, policy traps, and edge cases) before scaling.

The lake, the warehouse, and governance that keeps you safe

A data lake stores raw and refined data without forcing a fixed schema at write time. You apply the schema at read time, which keeps storage simple and supports text, PDFs, audio transcripts, images, sensor streams, and logs. Parquet is a common columnar format for efficient storage and fast reads. Table formats like Delta Lake, Apache Iceberg, and Apache Hudi add versioning, time travel, and transactional writes. For a practical view on building a central lake and integrating heterogeneous sources, see Smart production through data integration.

Lake versus warehouse versus lakehouse

- Warehouse: Structured tables with fixed schema and strong SQL performance. Great for BI and finance.

- Lake: Flexible storage for raw and semi-structured data. Great for AI and text-heavy use.

- Lakehouse: Lake flexibility with warehouse-style governance using formats like Delta or Iceberg.

Governance is non-negotiable

- Handle PII with tagging, row/column-level security, masking, and redaction.

- Apply data contracts so models don’t ingest broken fields.

- Maintain lineage so any answer traces to file and version.

Vector stores accelerate retrieval, but they don’t replace the lake. Treat the vector index as a fast lookup layer that points back to governed sources.

Private-cloud pattern without lock-in

- Network and identity: Keep traffic inside your cloud via private endpoints; integrate with your identity provider for role-based access.

- Storage and indexing: Raw/curated/serving zones in object storage with Delta/Iceberg tables; scheduled embedding jobs into a managed vector index.

- Policy and model layers: Apply masking and access rules before prompts; use content filters, rate limits, and versioned models.

- Observability and cost: Central logs, traces, cost dashboards, and alerting; caching and batching to control spend.

Azure (OpenAI + private networking), AWS (Bedrock + VPC endpoints), and Google Cloud (Vertex AI + private services) all support this pattern. Choose based on where the core data lives and the simplest path to private networking and identity. For sensor and machine data ingestion patterns, see Edge-to-Cloud Telemtry: Data Transfer with MQTT and Azure IoT.

Retrieval-augmented integration, end to end

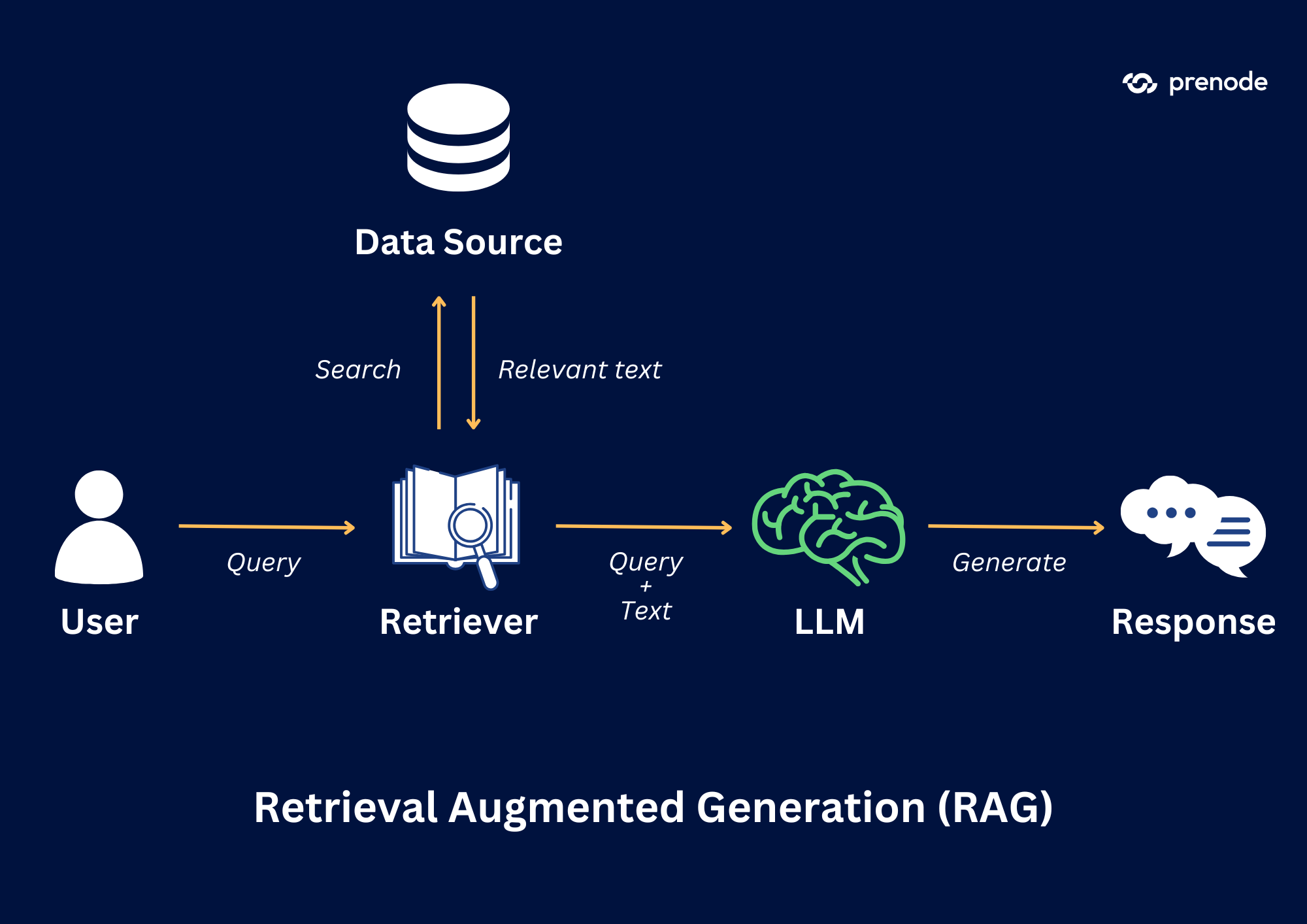

The magic happens when the model doesn’t guess. It retrieves facts from the lake and composes answers with citations - that’s retrieval-augmented generation (RAG). Standards like the Model Context Protocol (MCP) - bridge between AI and the world of data help LLMs access governed tools and data safely.

Data integration and processing

- Land raw files, then transform to curated tables (ETL/ELT). Tag purpose, owner, freshness, and sensitivity.

- Convert text into embeddings and store vectors in a managed index. Tune chunk size to preserve meaning while fitting context.

- Use an LLM to draft SQL or Spark code, then validate with tests and small samples.

Contextualized querying

- Semantic search retrieves meaning beyond keywords; hybrid search blends keywords, filters, and vectors.

- Query planning maps a natural-language question to retrieval steps, applies filters (for example, business unit or region), and narrows candidates.

Business policy enforcement

- Access control ties user identity to entitlements; only retrieve what the user is allowed to see.

- Masking and redaction remove PII before prompting; never write PII to logs.

- Scope and style guardrails set allow/deny lists and enforce answer boundaries.

Retrieval quality controls

- Chunking on semantic boundaries (headings/sections).

- Reranking to lift the best candidates.

- Citations with file names and sections so users can verify.

Observe and improve

- Log prompts, retrieval sets, answers, ratings, and latency.

- Review weekly with a clear rubric: factual accuracy, citation coverage, policy compliance, tone, and cost per session.

- Maintain an offline test set for regression checks; track online signals like citation click-through and human-reviewed escalations.

A pragmatic customer chatbot that measures up

A straightforward use case ships fast and measures clearly. Ingest FAQs, resolved tickets, product guides, order policies, and CRM notes; build a retrieval layer with citations; add policy checks for regulated claims and PII; and provide a clean handoff to a human when confidence drops or access rules block an answer.

What it does in practice

- Understands intent and retrieves the right passages from the lake.

- Produces a concise answer with two or three citations.

- Enforces rules before showing the answer; if content is restricted, it summarizes public info and offers a human handoff.

- Logs every turn with sources and confidence.

How I measure

- Containment rate: Percent of sessions solved without human help.

- Escalation accuracy: Percent of escalations the team agrees were needed.

- Cost per session: Model calls plus platform cost versus baseline.

- CSAT: Thumbs up/down or a short rating.

A mini build plan (no buzzwords)

- Start with the top ten intents and the docs that actually answer them.

- Clean obvious junk, tag sensitive fields, and chunk content.

- Build a small RAG stack with citations, policy checks, and human handoff.

- Test with agents first, then open to a small customer segment.

- Tweak chunking and prompts; trim context to keep latency low.

- Expand content only after the first set meets targets.

Practical next steps

- Run a short data readiness review to locate raw and curated content, identify sensitive fields, and pick three high-volume intents.

- Plan a two-week pilot with a small RAG pipeline, citations, and policy checks. Aim for ~15% deflection and ~20% handle-time cut on a controlled group.

- Create an evaluation rubric for factual accuracy, citations, policy compliance, tone, latency, and cost per session; score weekly and track trends.

- Prepare a go-live plan with guardrails, handoff criteria, alerting, logging, and a rollback path; expand reach when targets hold for two weeks.

Known risks and how I mitigate them

There are real risks; most are manageable with simple controls and clear ownership.

- Data quality: Bad source data slips into answers. Mitigate with curated tables, freshness checks, and required citations.

- Hallucinations: The model invents facts. Mitigate with strict retrieval-only prompts, answer length caps, and refusal rules outside scope.

- Prompt injection: Malicious content instructs the model. Mitigate with input filters, allow lists, and a policy layer that rejects unsafe instructions.

- Privacy and PII: Sensitive data leaks. Mitigate with masking before retrieval, field-level redaction, scoped indexes, and encrypted storage.

- Latency and cost: Large prompts and outputs drag. Mitigate with caching, context trimming, and smaller models for reranking/classification.

- Versioning and drift: Content changes. Mitigate with time-travel table formats, index refresh schedules, and model version tags.

- Evaluation gaps: Quality is hard to prove. Mitigate with offline golden sets plus online checks (answer ratings, citation click-rate, human-reviewed escalations).

- Safe fallback: When confidence is low, hand off to a human with retrieved context so they start ahead.

- Audit logs: Keep complete logs for prompts, retrieved chunks, and outputs linked to user identity and time.

When I anchor the investment to clear outcomes, govern the data, and enforce retrieval with citations, the path is repeatable - not magical. The return compounds quickly, and the team feels the difference without babysitting the system.

.svg)