If you lead a B2B service firm, you know the RFP grind: long hours, too many reviewers, and the uneasy feeling that speed may be undercutting quality. I want faster responses and better win rates without babysitting the process - and I want measurable gains, not warm promises. This is where LLMs for RFPs start to pay off. Not as a shiny toy, but as a practical engine for time-to-value, consistent messaging, and a steady lift in pipeline quality.

LLMs for RFPs: What They Do and Why It Matters

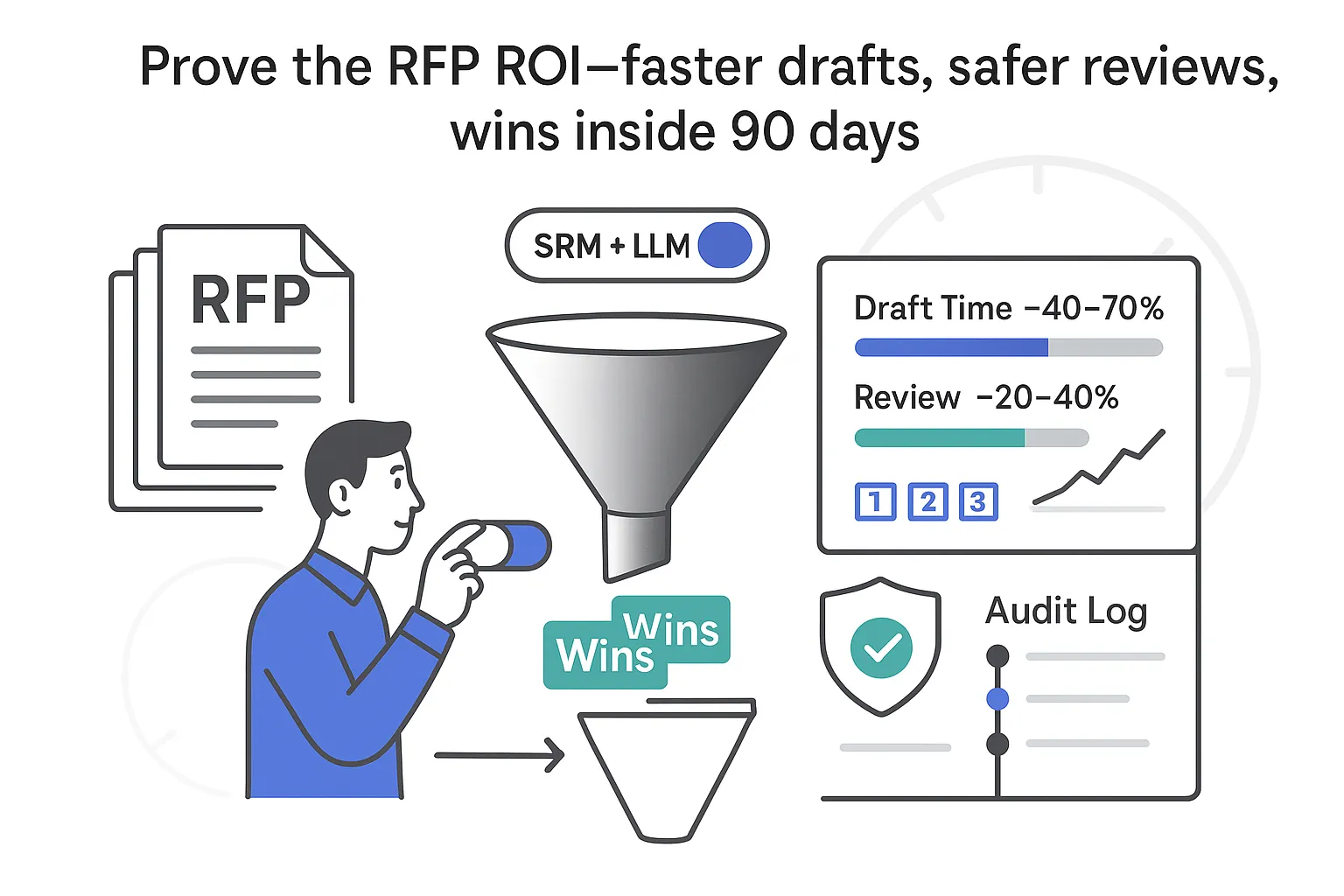

I start with outcomes. Teams using LLMs for RFPs commonly see first-draft creation times drop from days to hours, sometimes minutes for smaller sets. Reviewer hours shrink when prompts, templates, and retrieval live inside a clear workflow. Cost per proposal falls because fewer high-value people get pulled into low-value copying and formatting.

In plain terms, a Large Language Model predicts the next word with context that feels natural. Trained on large text corpora and guided by your content, it can turn past proposals, product notes, and compliance answers into consistent, on-brand drafts. It does not replace experts; it speeds them up and makes the work more predictable.

Think of Strategic Response Management (SRM) as the system that connects your content library, people, and timelines so hard-won knowledge gets reused across RFPs, RFIs, DDQs, and security questionnaires. LLMs plug into SRM to search, draft, and suggest edits based on sources you approve. The promise is simple: faster cycle times, steadier quality, and less chaos.

Metrics That Prove It

A few numbers I track from week one, with ranges drawn from internal pilots and public benchmarks (APMP 2023, Loopio State of RFP 2024, RFPIO 2024, McKinsey 2023). Actuals vary by complexity, domain, and content maturity.

- First-draft time per section: down 40 to 70 percent

- Reviewer hours per RFP: down 20 to 40 percent with clear review gates

- Cost per proposal: down 25 to 50 percent when templates, retrieval, and style rules are enforced

- Win rate lift: often 5 to 15 percent over two to three quarters as messaging gets consistent and compliance tightens

That is the pain and the promise: less swivel-chair work, more repeatable wins.

Mapping LLMs to the RFP Workflow

Here is how LLM capabilities map to the workflow you already know.

- Intake: Auto-tag by industry, product scope, compliance needs, and due dates; route to the right squad based on a simple matrix to cut thread-hunting.

- Retrieval: Use retrieval-augmented generation (RAG) to pull facts from your approved knowledge base, past proposals, and policy docs; limit queries to verified sources to ground answers and reduce hallucinations.

- Drafting: Generate structured first drafts that fill standard sections with current messaging, pricing notes, and compliance language; insert variable fields for client name, region, SLAs, and integrations; lock these fields to prevent accidental edits.

- Review: Spin up reviewer tasks automatically so SMEs see only the sections they own; run style and tone checks before it hits legal or leadership to reduce late redlines.

- Approval: Enforce version control, change logs, and sign-off rules at final gates; export in the requested format without breaking templates or brand.

I also watch for small, high-leverage details: variable fields for fast customization, verified retrieval paths for accuracy, and review scopes that match SME accountability.

Enabling Non-Technical Teams and Trustworthy Retrieval

My sales and proposal teams do not need to be prompt engineers. Plain-language prompts work: “Write a 200-word response explaining our implementation timeline using the enterprise template with security citations.” Combine that with context packs and variable fields. The result is consistent drafts junior staff can assemble while experts focus on precision edits.

RAG turns your LLM into a librarian with receipts. When the model only searches verified sources, people can ask targeted questions and get answers with citations. Example: “Show me SOC 2 language updated after June 2024 with references to backups and key management.” Speed matters, but speed with evidence sustains trust.

Measurable checkpoints I monitor:

- Draft creation time per section

- Number of reviewer comments per round

- SME hours per proposal

- Approval cycle time

- Percentage of responses with citations

Collaboration, Roles, and Governance

LLMs for RFPs work best when the process is clear and the tools feel familiar. The goal is fewer status meetings and zero micromanagement.

- Roles and ownership: Proposal lead owns timelines and acceptance criteria; a content librarian maintains the knowledge base and archives old content; an SME pool (technical, legal, security, finance) owns specific sections; an approver handles final sign-off; an auditor monitors version history, metrics, and compliance notes.

- Version control and audit trails: Every draft, edit, and comment should be tracked. That trail protects you in security reviews and shows leadership where time goes. Keep a single source of truth for templates and glossaries so your voice stays consistent.

- Tool integrations: Connect to the document editor your team already uses for drafting and redlines, the project management system for due dates and workloads, and the chat platform for notifications and quick huddles. Use SSO for access control by role.

- Governance controls that keep quality high: Style guides embedded in the editor (voice, reading level, sentence length), templates for the industries you serve most, SME review gates triggered by topic tags (security, data privacy, pricing), and content-rot checks that flag outdated or duplicate entries.

- Review gates that scale: Define gate criteria by section and risk level; map each gate to a role, not a person; require citations for claims on security, privacy, or compliance; enforce a two-pass rule for legal and security sections; auto-archive content that fails periodic review.

What LLMs Don’t Do - and How I Control Risk

LLMs are powerful, but they are not magic. Risk comes from poor setup and weak controls. I name the risk, then cap it with process and tech.

- Generalized responses lack specificity: Mitigate with domain-tuned prompts, industry templates, and RAG limited to approved sources; add client context packs so drafts speak to their environment and constraints.

- Inconsistent quality and tone: Mitigate with in-editor style and tone guides, brand glossaries, and reading-level checks; use SME gates for high-risk sections.

- Weak collaboration scaffolding: Mitigate by connecting LLM drafting to your document editor, PM system, and chat; enforce version control and approvals with audit logs.

- Manual customization drag: Mitigate with variable fields for names, regions, SLAs, and integrations; constrain retrieval to the latest compliance FAQs and policy snippets; keep human-in-the-loop reviews where risk is high.

This is not about trusting AI blindly. It is about giving it boundaries and giving people clear jobs.

ROI You Can Defend

I prefer a simple, honest model to quantify value.

- Value pillars: industry-specific expertise (anchored by context libraries and compliance FAQs), consistency and cohesion (style rules, glossary, and templates), collaboration and workflow management (roles, SLAs, integrations), tailored customization with automation (templates plus variable fields), and increased efficiency (before/after benchmarks across drafting, review, and approval).

- Simple ROI model: hours saved per RFP (drafting down 40–70 percent, review down 20–40 percent), multiplied by your blended hourly rate across proposal managers and SMEs, minus tool and integration costs.

- Example: Baseline 60 total hours per midsize RFP at a blended 120 dollars per hour is 7,200 dollars per proposal. After implementation, 32–40 total hours brings cost to 3,840–4,800 dollars. Savings of 2,400–3,360 dollars per proposal. At 8 RFPs per month, that is 19,200–26,880 dollars saved monthly, not counting win-rate lift.

- Time-to-value timeline: Days 1–30 import past proposals, set templates, add context libraries, connect SSO and your PM tool, and run the first two RFPs with guided review gates. Days 31–60 expand SME gates, finalize style rules, roll out variable fields for pricing and SLAs, and set content-rot checks. Days 61–90 publish before/after metrics, tune prompts by industry, and extend to security questionnaires and DDQs.

Even conservative assumptions typically show payback within a quarter.

Security and Data Privacy Expectations

Security is table stakes. I expect these controls from any AI RFP solution that touches client data or internal knowledge.

- Certifications and controls: SOC 2 Type II, ISO 27001, SSO support, role-based access control with least-privilege defaults, data residency options (US/EU/region of choice), encryption in transit (TLS 1.2+) and at rest (AES-256).

- Data handling: Retention controls by workspace with clear purge options, redaction of PII before storage or model input, zero-retain model choices where prompts and outputs are not used for training, private model or on-prem options when required, and managed key services or HSMs for key management.

- Governance and procurement: NDA workflows, vendor DPA coverage (subprocessors, residency, incident SLAs), annual third-party penetration tests with remediation tracking, audit logs for access/edits/exports/approvals, and legal holds for discovery.

- Practical procurement checks: Validate SOC 2 Type II and ISO 27001 reports with dates and scope; confirm data residency and zero-retain options in writing; review the RBAC model and how permissions map to your org chart; ask for pen-test summaries and remediation timelines; inspect audit log exports and retention policies; verify SSO, provisioning, and MFA; test PII redaction on sample RFPs before rollout.

Ethics That Build Trust

Ethics are not theory here; they protect your brand and your buyers.

- Disclosure in proposals: Include a short note stating that AI-assisted drafting is used with human review.

- Avoid hallucinations: Use retrieval constraints with grounded citations; if there is no source, the model should return a gap, not a guess.

- Bias testing: Review your knowledge base for biased or outdated examples and run spot checks on sensitive topics. For context on model bias risks, see reporting on the Gemini AI model’s responses showed ‘bias’.

- Human oversight: Require SME sign-off on legal, security, privacy, and pricing sections.

- Record-keeping: Keep citations, prompts, and review notes for audit.

This proposal includes AI-assisted drafting constrained to our approved knowledge base and templates. All responses that reference security, privacy, compliance, or pricing have been reviewed and approved by the appropriate subject matter experts. Citations are provided where applicable. No client data was used to train external models.

That single paragraph heads off questions and builds trust.

Final Thoughts and FAQs

LLMs for RFPs can feel like a contradiction: fast, yet dependent on clear guardrails; powerful, yet only as strong as the process around them. Set boundaries, wire them into your workflow, and measure the results. Mitigate limitations. Enforce review gates. Prove ROI with hours and dollars. Secure the data. Govern the ethics. Many teams start with a small set of live RFPs in the first 30 days, track cycle time, win rate, and reviewer hours saved, then expand once the gains are visible.

Q: How hard is it to switch from the current RFP process?

A: Migration usually takes 2 to 4 weeks. Week 1 covers SSO setup, importing past proposals, and seeding the knowledge base. Week 2 adds templates, variable fields, and style rules. Weeks 3 and 4 roll out reviewer gates and content-rot checks. Most teams keep working during the cutover because drafting and review stay in the document editor and PM system you already use.

Q: What security practices should I expect from an AI RFP solution?

A: Look for SOC 2 Type II, ISO 27001, SSO, RBAC, data residency options, and zero-retain settings. Require a signed DPA, annual pen tests, encryption in transit and at rest, PII redaction, and full audit logs. Confirm provisioning and MFA support, and ask for proof before moving any live data.

Q: How much time will I actually save with LLMs?

A: Typical ranges are 40 to 70 percent faster drafting and 20 to 40 percent less reviewer time, with higher savings on repetitive sections like company overviews, security, and compliance FAQs. Complex, bespoke deals save less on drafting but still gain from verified retrieval, templates, and cleaner reviews. Plug your hours into the ROI model above to quantify monthly impact.

.svg)