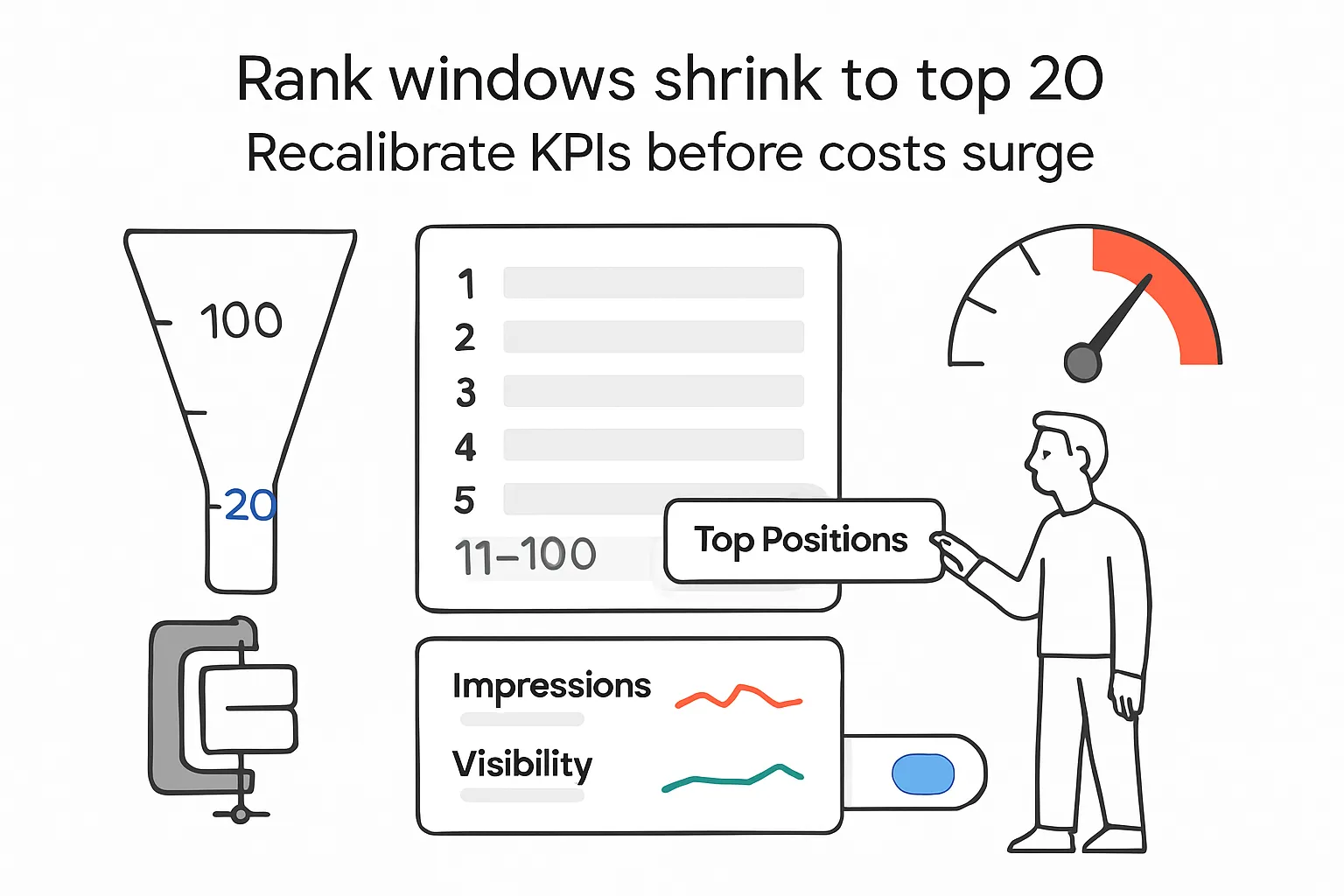

Google’s removal of the num=100 parameter compresses third-party rank tracking to the top 10-20, drives roughly 10x more requests to reconstruct a full top-100 view, and coincides with lower Google Search Console impressions. The practical shift: rebase SEO KPIs around position buckets, SERP feature share, and on-site outcomes rather than long-tail rank counts.

Rank tracking after Google disables num=100

Google disabled a query parameter (num=100) that let tools fetch 100 organic results per query. That single change forces rank trackers to either make about 10x more requests to collect the same top-100 view or accept a narrower window focused on the top 10-20. At the same time, practitioners report marked drops in GSC impressions and unique queries around the change, suggesting past measurements overweighted page-3+ visibility that rarely drove clicks.

Net effect: rank tracking concentrates on the parts of the SERP that move traffic and revenue, while deep-SERP visibility becomes a premium add-on instead of table stakes.

Key Takeaways

- Expect a market shift to top-10/20 rank coverage; top-21-100 becomes a paid premium or disappears - plan coverage and budgets accordingly.

- Rebase SEO KPIs to position buckets (P1-3, 4-10, 11-20), SERP feature share, and session outcomes; de-emphasize long-tail keyword counts.

- Annotate reporting: set a "num=100 off" line in GSC to avoid reading impression declines as demand loss.

- Concentrate effort on page-2 to page-1 lifts; positions 21-100 carry near-zero traffic and weak signal for prioritization.

- Vendor consolidation likely: tools that fund deep crawling may sell access to others; negotiate API or sampling terms early.

Situation Snapshot

- Event: Google removed the num=100 parameter used by SEO tools to pull 100 organic results per query. To rebuild top-100 coverage, tools now need about 10x more paginated requests per keyword per location and device.

- Vendor reactions: SpyFu says it will try to keep top-100 alive, noting the cost could be unsustainable without ecosystem support, per this post. Ahrefs’ CMO Tim Soulo has observed the future is top-10/20, with 21-100 offering limited actionability.

- GSC shifts: An analysis across 300+ properties reports that 87.7% saw impression drops and 77.6% saw fewer unique queries after the change, as shared by Tyler Gargula (LinkedIn profile).

- Anti-scraping posture: Reporting indicates Google is investing in modeling and counter-measures against SERP scraping.

Undisputed: num=100 is disabled; scraping costs rise; vendors are reassessing; many accounts show lower GSC impressions. Causality between scraping and GSC metrics is suggested in reports but not officially confirmed.

Breakdown & Mechanics

Data economics

- Before: one fetch could retrieve 100 results (num=100). After: default is about 10 per page. To keep top-100, tools must perform 10 paginated fetches per keyword per location and device.

- Cost model (assumption): Cost approx Q x P x R x C, where Q = keyword-location-device combos, P = pages per SERP (10 to 100 results implies P x 10), R = refresh rate, C = marginal cost per fetch (IPs, parsing, anti-bot losses). Even with C in low fractions of a cent, total opex scales near-linearly with P and R.

Signal quality

- Most clicks happen in the top 10; page-2+ carries very low cumulative CTR. Actionable SEO decisions typically come from movements within P1-20, not P21-100.

- Practical read: seeing ranks at 21 vs 91 often means "indexed but not competitive" - which rarely changes prioritization. Positions 11-20, by contrast, indicate near-fit where content, UX, or intent matching can move the needle.

GSC metrics interaction

Reported pattern: Impression counts and unique queries declined after num=100 was removed. Two non-exclusive mechanisms could explain it:

- (A) Widespread scraping with num=100 increased the set of measurable low-position appearances - inflating impression tallies for page-3+.

- (B) Changes to pagination or infinite scroll tied to num=100 altered what GSC counts as an impression for deeper ranks.

Caution: Google has not confirmed causality; treat the correlation as a prompt to re-benchmark, not proof of past data error.

Summary flow

num=100 removal -> 10x crawl cost -> tools narrow to top-20 -> fewer recorded deep-SERP appearances -> GSC impression and query counts drop -> marketers recalibrate KPIs to position buckets and on-site outcomes.

Impact Assessment

Organic Search

- Direction: Measurement compresses to top-10/20; deep-SERP visibility becomes sparse.

- Effect size: High for teams reliant on top-100 dashboards and keyword volume; moderate for programs already focused on page-1 share.

- Winners: Sites with strong page-1/2 presence; teams geared to 11-20 -> 1-10 lifts.

- Losers: Strategies built on counting thousands of low-rank keywords; vendors selling depth without new economics.

- Actions:

- Pivot KPIs to position buckets and share-of-page-1, including SERP features.

- Build a page-2 pipeline: intent match checks, UX gaps, content completeness, internal links.

- Annotate the change date in analytics; compare clicks and conversions, not impressions, to gauge real impact.

Paid Search

- Direction: Minimal direct effect; indirect budget pressure possible if SEO appears to lose impressions on reports.

- Effect size: Low near term; moderate if finance reallocates on misread KPIs.

- Actions:

- Align narratives: clarify that impression declines reflect measurement shifts, not demand collapse.

- Use PPC query insights to inform SEO for high-value intents where organic sits at 11-20.

Analytics & Reporting

- Direction: GSC becomes tighter; rank trackers show fewer deep results or price them high.

- Effect size: High for dashboarding and goal setting.

- Actions:

- Freeze pre-change baselines; create new post-change baselines.

- Standardize metrics: P1-3, P4-10, P11-20 coverage; SERP feature occupancy; organic sessions and revenue by intent cluster.

- If deeper ranks must be monitored, sample high-value clusters rather than full portfolios to contain cost.

Vendor Strategy & Ops

- Direction: Pricing models and APIs shift; some tools form data-sharing alliances.

- Effect size: Moderate to high depending on reliance on top-100.

- Actions:

- Renegotiate SLAs to specify position coverage and refresh cadence, for example a guaranteed top-20.

- Evaluate the cost of deep-SERP add-ons vs business impact; cut non-essential depth.

Content & CRO

- Direction: Greater focus on meeting intent and UX for terms where you are near page-1.

- Actions:

- Prioritize updates where you sit 11-20 and the term has commercial value.

- Pair content refresh with SERP intent audits and CRO experiments to convert incremental rank gains into revenue.

Scenarios & Probabilities

- Base case (Likely): Industry standardizes on top-10/20 rank tracking; deep-SERP data is sold as premium. GSC impressions remain lower vs pre-change baselines. Budgets shift slightly from data collection to analysis and on-site improvements.

- Upside case (Possible): A few vendors absorb cost and resell depth via API; sampling and modeling fill gaps for 21-100 at lower cost. Marketers get optional depth without material price hikes on core plans.

- Downside case (Edge): Google escalates anti-scraping controls, limiting even top-20 depth or freshness. Rank tracking latency rises; reliance on GSC and panel-based estimates grows; reporting gets noisier.

Risks, Unknowns, Limitations

- Causality: The link between num=100 and GSC impression changes is correlational; Google has not published a causal explanation.

- Data scope: The 300+ property analysis is sizable but not universal; verticals with heavy news or topical flux may behave differently.

- Vendor heterogeneity: Tools differ in crawl methods, anti-bot success, and cache use; experiences will vary.

- Policy changes: If Google ships an official ranking distribution API or alters impression definitions, this analysis will need revision.

- Measurement artifacts: Infinite scroll, personalization, and SERP feature density can skew both rank and impression interpretations across devices.

Sources

- Search Engine Journal (R. Montti), Sep 2025 - "The Future Of Rank Tracking Can Go Two Ways."

- Search Engine Journal, Sep 2025 - "Google Modifies Search Results Parameter Affecting SEO Tools."

- Mike Roberts (SpyFu), Sep 2025, LinkedIn post - "Top 100 doesn't have to die..."

- Tim Soulo (Ahrefs), Sep 2025, X/Twitter post - Thoughts on top-20 focus and actionability below 20.

- Tyler Gargula, Sep 2025, LinkedIn update - Analysis of 300+ GSC properties showing impression and query count declines. See also his LinkedIn profile.

.svg)