If I want organic growth that feels repeatable instead of random, I start with structure. Website sitemaps may look boring next to splashy campaigns, but they quietly speed up discovery, cut crawl waste, and make reporting unambiguous. For B2B service leaders, that combo turns into pipeline I can forecast. I keep it simple, practical, and yes - human.

Why are website sitemaps important?

As a CEO or founder, outcomes matter. Website sitemaps help search engines find and understand your most important pages faster, which means:

- Quicker visibility for high-value service pages and case studies

- Reduced crawl waste so bots spend time on what drives revenue

- Faster discovery of new content, launches, or rebrands

- Clearer accountability in Google Search Console, tied to measurable KPIs

Tie that to pipeline and it gets real. When website sitemaps mirror your portfolio and buyer journey, I can track:

- Pages submitted vs pages indexed for each service line

- Time to index after publishing

- Index coverage by funnel stage

- Impressions and clicks on bottom-of-funnel pages where deals start

Beyond the numbers, website sitemaps bring order to complex sites. They help organize large architectures, improve search engine crawling and visibility, surface indexing issues, and even reinforce UX by acting as a structured directory. They also function as a project artifact teams can agree on. No more guessing which URLs count. No more mystery around what should be discoverable.

Here’s a B2B twist I use a lot: segment sitemaps by revenue-driving themes like service lines, industries, use cases, locations, resources, and case studies. Now you can monitor indexation and growth by pipeline stage. If your bottom-of-funnel sitemap lags on coverage, fix that first. It’s dependable prioritization.

What is a sitemap?

A sitemap is a machine-readable file that lists the canonical URLs you want search engines to discover and understand. It tells crawlers which pages matter, when they changed, and how your site is organized. It helps discovery; it doesn’t grant rankings by itself (per Google recommends, sitemaps are a discovery hint, not a ranking signal).

There are three things people often mix up:

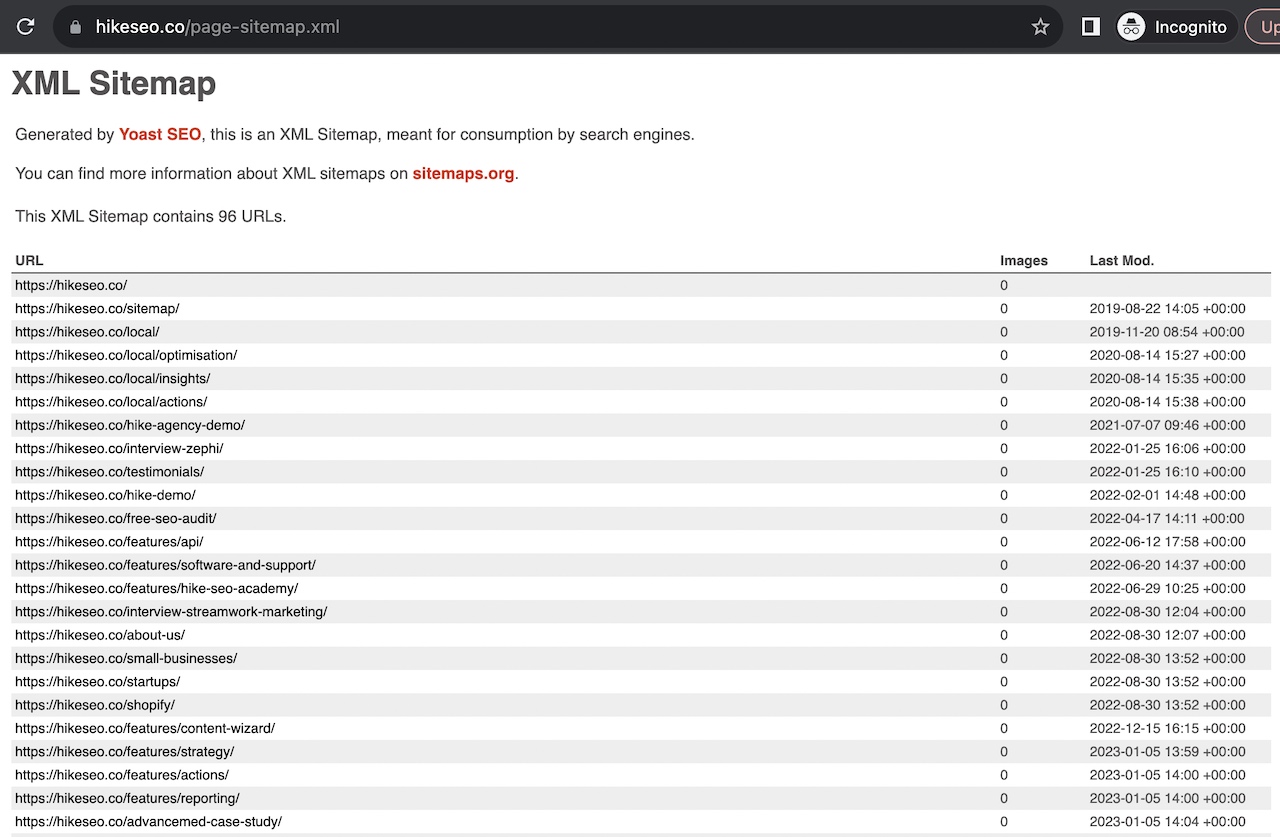

- XML sitemap: made for bots, not humans. This is the primary file that search engines read.

- HTML sitemap: a normal web page that lists links for users. Helpful on large or complex sites as an extra path to content.

- Visual IA map: a planning diagram for your team that shows how content groups connect. It’s not read by search engines, but it should influence how you build your XML files.

Sitemaps matter most when you have lots of URLs, a deep architecture, frequent updates, a new domain with few backlinks, rich media like images or video, multilingual content with hreflang, or multiple subdomains. In B2B, add triggers like multiple service lines, industry pages, a resource library, gated assets, or regional pages. If your site is small and well linked internally, you might be fine without one - but most growing service businesses benefit from the clarity a sitemap brings.

Types of website sitemaps

Each type has a job (see Google’s overview of Sitemaps):

- XML sitemap: the core file for pages you want indexed

- HTML sitemap: a human-friendly page to aid discovery on very large sites

- Image sitemap: points to image URLs and details like captions or licensing; useful for optimizing images on a website

- Video sitemap: adds metadata like duration and age rating

- News sitemap: used by publishers to flag fresh news content

- RSS or Atom feed: not a sitemap per se, but can help discovery on frequently updated blogs

- Sitemap index: a parent file that lists multiple child sitemaps

When to use which:

- Lots of images or a media library: add image sitemaps

- Many videos or webinars: use video sitemaps

- News or near-daily publishing: consider a news sitemap

- 50k URLs or multiple content families: use a sitemap index to group them cleanly

XML sitemaps

A clean XML setup pays off. I follow these rules to avoid the most common headaches:

- Include only canonical, indexable URLs that return 200 OK

- Use absolute HTTPS URLs and a consistent format

- Cap each sitemap at 50,000 URLs or 50 MB uncompressed and gzip if large

- Use a sitemap index to split by content type or business theme

- Add lastmod only when content meaningfully changes (Google treats it as a hint)

- Skip changefreq and priority (most modern crawlers ignore them)

- Exclude noindex or robots.txt file-blocked pages, redirects, 4xx and 5xx URLs, duplicate variants, and UTM or filter parameters

- Normalize trailing slashes and cases to avoid near duplicates

- Reference your sitemap location in robots.txt with a full URL

- Create separate sitemaps for subdomains when used

Hreflang support matters in B2B regions. You can include hreflang tags in each URL entry or use a separate hreflang sitemap. Pick one method and be consistent. Match pairs cleanly across languages and regions with correct return links. See Google’s guide on International and multilingual sites.

For reporting, I split sitemaps into:

- Services

- Industries or verticals

- Use cases or solutions

- Case studies and references

- Resources and articles

- Locations or regions

- Productized services, if relevant

A minimal example I share to show structure:

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>https://example.com/services/paid-media/</loc>

<lastmod>2025-08-15</lastmod>

</url>

<url>

<loc>https://example.com/case-studies/saas-leadgen/</loc>

<lastmod>2025-07-30</lastmod>

</url>

</urlset>

And the parent:

<?xml version="1.0" encoding="UTF-8"?>

<sitemapindex xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<sitemap>

<loc>https://example.com/sitemaps/services.xml</loc>

<lastmod>2025-08-15</lastmod>

</sitemap>

<sitemap>

<loc>https://example.com/sitemaps/case-studies.xml</loc>

<lastmod>2025-07-30</lastmod>

</sitemap>

</sitemapindex>

How to create a sitemap

Here’s a practical sequence I use for complex B2B sites that avoids noise and keeps the focus on revenue:

-

Crawl and inventory

Use a site crawler to collect every reachable URL. Flag orphan pages, canonicals, redirect chains, 4xx and 5xx responses, and parameterized variants. Export lists so you know what truly exists vs what you want to keep. -

Define URL rules

Decide on canonical patterns. Choose trailing slash or not and stick to it. Remove tracking parameters from internal links. Map redirects to a clean target state. Agree on how pagination, filters, and archives should behave. -

Segment by business intent

Group by services, industries, resources, case studies, blog, locations, and productized services if you have them. This is where website sitemaps become a management tool. Each group becomes a child sitemap you can track in isolation. -

Generate the files

- WordPress: common SEO plugins handle XML generation and can segment by post type or taxonomy - for example, Google XML Sitemaps

- Shopify: the platform generates one automatically

- Webflow: built-in setting to create a sitemap

- Custom stack: write a script that pulls canonical 200 URLs from your database or CMS and emits static XML on deploy, or use a generator like XML-Sitemaps.com

Ensure files update when you publish or edit content. Automate it so there’s no manual step to miss during a busy launch.

-

Quality gates

Before you ship, validate each URL meets these checks: 200 OK, indexable, canonical to itself, has a meaningful lastmod, and is not blocked by robots.txt file. Sample a few entries from each child sitemap to confirm titles and content match your intent. -

Placement and access

Host your parent index at a predictable path like https://example.com/sitemap.xml and place child maps under a clear folder such as /sitemaps/. Add the full URL of the parent in robots.txt like this:Sitemap: https://example.com/sitemap.xml

-

Multilingual and hreflang

If you operate across countries or languages, include hreflang in your sitemap entries or maintain a dedicated hreflang sitemap. Check that every language version references the others and that return links match. See Google’s guidance on International and multilingual sites.

Pages to exclude

Keep noise out. Thank-you pages, internal search results, filter or facet URLs, thin tag or author archives, staging or test paths, duplicate URLs with different parameters, and pagination variants that add no standalone value should be left out. If a page is noindex, it does not belong in website sitemaps. Learn more about handling duplicate content and avoiding broken links.

Governance that keeps it tidy

- Name an owner in marketing ops or SEO who maintains the files

- Review counts after every major publish or redesign

- Log changes in your release notes so teams know what moved

- Report monthly on coverage and time to index for key segments

Submit a sitemap to Google

Google can find your sitemap on its own, but submission gives you control and reporting. Here’s the short path I follow:

- Open Google Search Console and pick your property

- Go to Sitemaps

- Enter the URL of your parent index, for example sitemap.xml

- Submit

- If you use subdomains, submit a sitemap for each verified property

Do the same in Bing Webmaster Tools. Include the parent URL in robots.txt, and make sure the server returns a 200 status with the correct XML content type. Some engines support ping endpoints; Google has de-emphasized the need for pings, so Search Console plus robots.txt is enough. Bing still accepts a ping if you want an extra nudge.

What to expect

Discovery can be quick, but indexing depends on site authority, internal linking, content quality, and server health. New service pages might index in hours or days. Keep internal links strong from your menu, hubs, and recent posts to help crawlers find context. Indexing is not guaranteed, but accurate sitemaps improve the odds that the right pages are seen sooner.

Submit the index first, then let search engines discover each child sitemap listed inside. That keeps the setup clean and easy to maintain.

Checking sitemaps for errors

Healthy website sitemaps are boring. Problems show up fast if something slips. I validate with an XML validator or a crawler, then use Google Search Console for the rest.

What to check:

- Server returns 200 OK and the file is reachable

- XML encoding is valid and URLs are absolute HTTPS

- No redirects, 4xx, or 5xx entries in any child file

- No non-canonical or parameterized URLs

- No pages blocked by robots.txt or meta noindex

- Hreflang pairs match across languages and return links are correct

- lastmod reflects a real content change

- You stay under 50,000 URLs or 50 MB per file and use gzip when needed

- No duplicate URLs across different child sitemaps

Use coverage reporting

In Search Console, review the Sitemaps and Page Indexing reports. Compare Submitted vs Indexed counts by segment. Watch for Indexed, not submitted URLs that should live in a sitemap. Watch for Submitted, not indexed and prioritize fixes on bottom-of-funnel pages first.

Monitoring cadence

Weekly checks for active publishers or large teams. Monthly for stable sites. Set alerts for Couldn’t fetch errors or sudden drops in indexed counts. Assign a named owner so this never becomes nobody’s job.

Executive rollup that actually helps

For each child sitemap tied to revenue, I track four numbers: URLs submitted, URLs indexed, time to index for new pages, and clicks to those pages from search. Share deltas month over month. If your services sitemap shows slow indexing, that gets top priority.

Information architecture

Website sitemaps work best when they mirror a clear information architecture. I start by mapping your ecosystem of stakeholders, service lines, industries, and jobs customers need to get done. It looks like a planning exercise - and it is - but it pays dividends in crawling and conversion.

A practical mini-workflow:

- Content audit: list every page and asset, mark owners, and note performance

- Card sorting and tree testing: group topics the way buyers think

- Cluster by topic and service: build hub pages that link to focused spokes

- Set depth limits: keep key pages within three clicks from your main hubs

- Internal linking: ensure every important page is reachable from hubs and body copy, not just the menu

- Routes by buyer stage: education, evaluation, proof, contact

Think of your portfolio as both unbundled and bundled. Assets live in clear groups by topic or format, then recombine into guided paths for decision makers. Your sitemap segments should reflect that. One child file for education content, one for case studies, one for primary service pages. Now crawling lines up with the way you sell.

Connect the stack from engagement to outcome. Awareness content brings visitors in. Evaluation content helps them compare. Proof in the form of case studies reduces friction. Service pages and contact convert. Each layer needs representation in your website sitemaps and in your internal links. When that alignment clicks, reporting stops being messy and starts being useful.

Ownership matters here too. Define who updates the IA, who approves new hubs, and how changes flow into your sitemap generation. Add a simple SLA for publishing sitemaps on release days so search engines see the new structure right away. For additional context on the relationship between IA and sitemaps, Nielsen Norman Group has a solid primer.

FAQs

Do I need a sitemap for a small site?

If you have fewer than 500 pages and strong internal links, search engines can usually find everything. Many small sites still use website sitemaps because the reporting helps catch issues early.

How often should I update a sitemap?

Update it when content changes or publishes. If your CMS auto-generates sitemaps, that happens on its own. For custom stacks, refresh on deploy.

Do priority and changefreq matter?

Most engines ignore them. Focus on accurate URLs and lastmod.

Can I put hreflang in sitemaps?

Yes. You can include hreflang in each URL entry or use a separate hreflang sitemap. Keep pairs consistent across languages and regions.

Should paginated pages be in the sitemap?

Usually not, unless they carry unique value and you want them indexed. For most B2B sites, put the main listing page in website sitemaps and make sure key items are linked directly.

Can subdomains have separate sitemaps?

Yes. Each subdomain can host its own sitemap and should be verified as a separate property in Search Console.

Sitemap vs robots.txt: what’s the difference?

A sitemap lists URLs you want crawled and indexed. robots.txt tells crawlers what they should or should not request. You can reference your sitemap location inside robots.txt.

What are the limits and how do sitemap indexes help?

Each sitemap caps at 50,000 URLs or 50 MB uncompressed. A sitemap index lists multiple child sitemaps so you can split by content type or business theme.

How do I find my sitemap?

Check robots.txt for a Sitemap line, try /sitemap.xml or /sitemap_index.xml, or look in Google Search Console under Sitemaps.

Quick-win tips

- Segment money pages so you can monitor indexation where revenue lives

- Keep lastmod truthful so search engines learn your update rhythm

- Fix coverage issues first on bottom-of-funnel content like services and case studies

A clean structure makes growth calmer. When information architecture, internal links, and website sitemaps work together, crawling gets faster, reporting gets clearer, and the pipeline becomes more predictable.

.svg)