If you run a B2B service business, you care about clean pipelines and reliable growth. That is exactly where crawl budget becomes a sneaky lever. It is not flashy and it is easy to overlook. Yet when search engines spend time on the right URLs at the right cadence, new service pages, case studies, and thought leadership pieces get crawled and indexed faster. In my experience, that often leads to more visibility and higher-quality lead flow, which can contribute to lower customer acquisition costs.

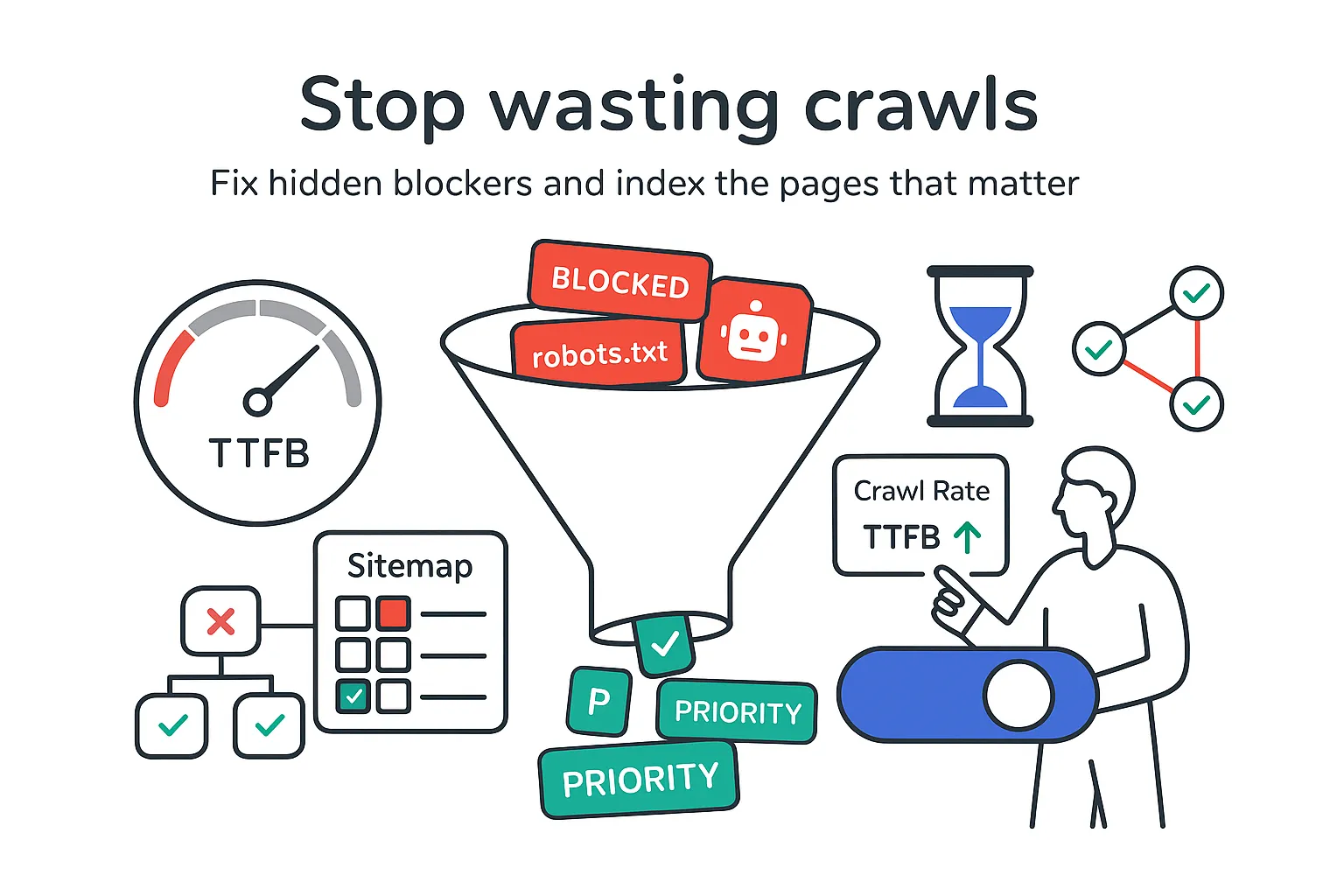

crawl budget quick wins

I look for fixes that move the needle fast. I treat this like a short sprint that clears the biggest clogs first, then buys time for deeper work.

- Verify robots.txt and meta robots. Make sure you are not accidentally blocking important paths. Watch for tricky patterns like Disallow: /services or X-Robots-Tag: noindex in HTTP headers on PDFs or other non-HTML files. Keep robots.txt lean, allow primary paths and assets needed for rendering (CSS/JS), and block only true crawl traps like endless filter URLs. See Google’s guidance on the robots.txt file.

- Submit a fresh XML sitemap. Include only indexable 200 URLs. No 3xx, 4xx, or 5xx. If the site is large, split sitemaps by section and reference them in a sitemap index file. Use accurate <lastmod> dates and avoid spammy updates. This guides discovery and sets expectations. Reference: Google’s overview on sitemaps.

- Fix 5xx and 4xx errors, plus long redirect chains. Start with templates that influence revenue: services, pricing, case studies, location pages, and the top blog posts that convert. Keep redirects to a single hop and update internal links to point to the final URL. More on redirects and how to find and fix broken links.

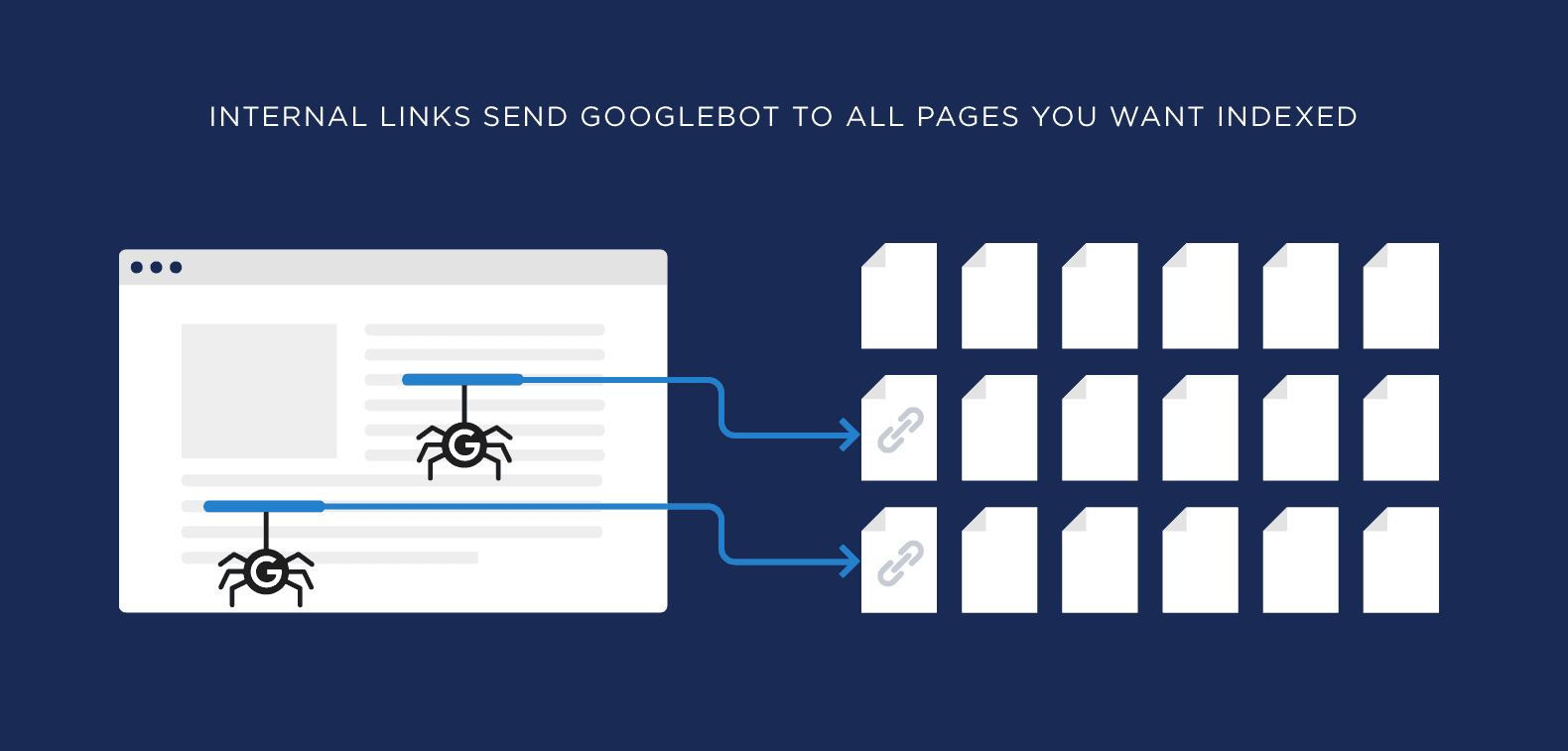

- Internally link orphan money pages. If a page generates leads but sits alone, add links from your homepage, navigation, relevant hubs, and 2-3 strong articles. Crawlers follow links. If it matters, link to it with descriptive anchors. Learn more about internal linking.

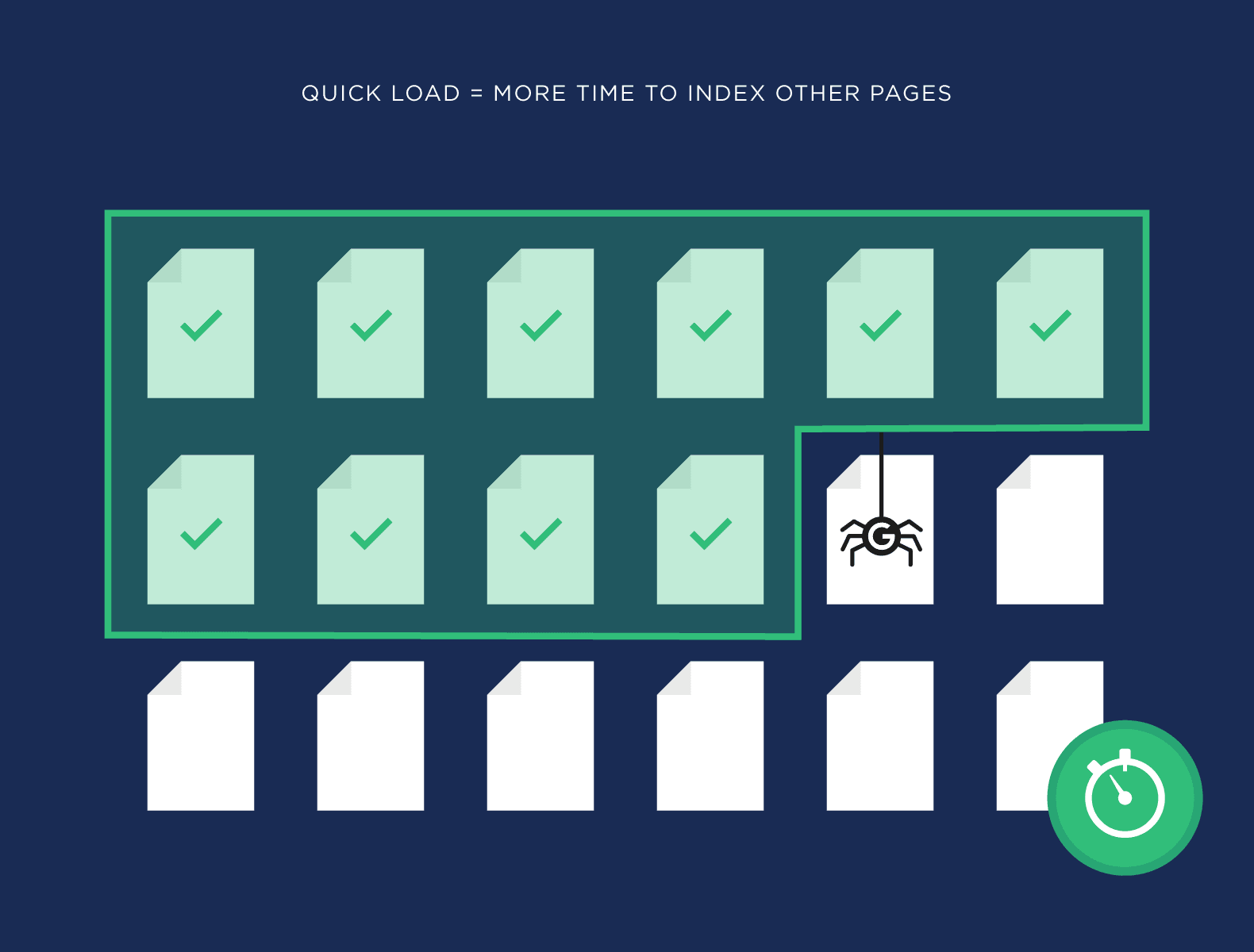

- Speed up TTFB. Reduce server latency with sensible caching and efficient database queries. Compress assets and serve static resources efficiently. Lower latency means bots can crawl more URLs per visit and are less likely to back off. See guidance on page speed and minimizing render-blocking resources. A CDN can help.

- Send honest freshness signals. Keep Last-Modified and ETag headers accurate on key URLs. When content changes, reflect it. Stable 200s with honest dates help drive consistent recrawls; unchanged content can legitimately return a 304 response code.

- Request indexing only for top-priority launches. Use on-demand requests sparingly for new or materially updated pages. It is rate-limited and not a guarantee. Manage expectations inside Google Search Console (GSC).

What to expect on timing

- Days: indexing improvements for high-authority pages, updated sitemaps, and pages that gained solid internal links.

- 1-3 weeks: broader recrawls across sections, especially if you fixed latency or stability issues.

- Ongoing: once crawlers learn your site is reliable and fresh, recrawl frequency for priority content tends to rise.

what is crawl budget

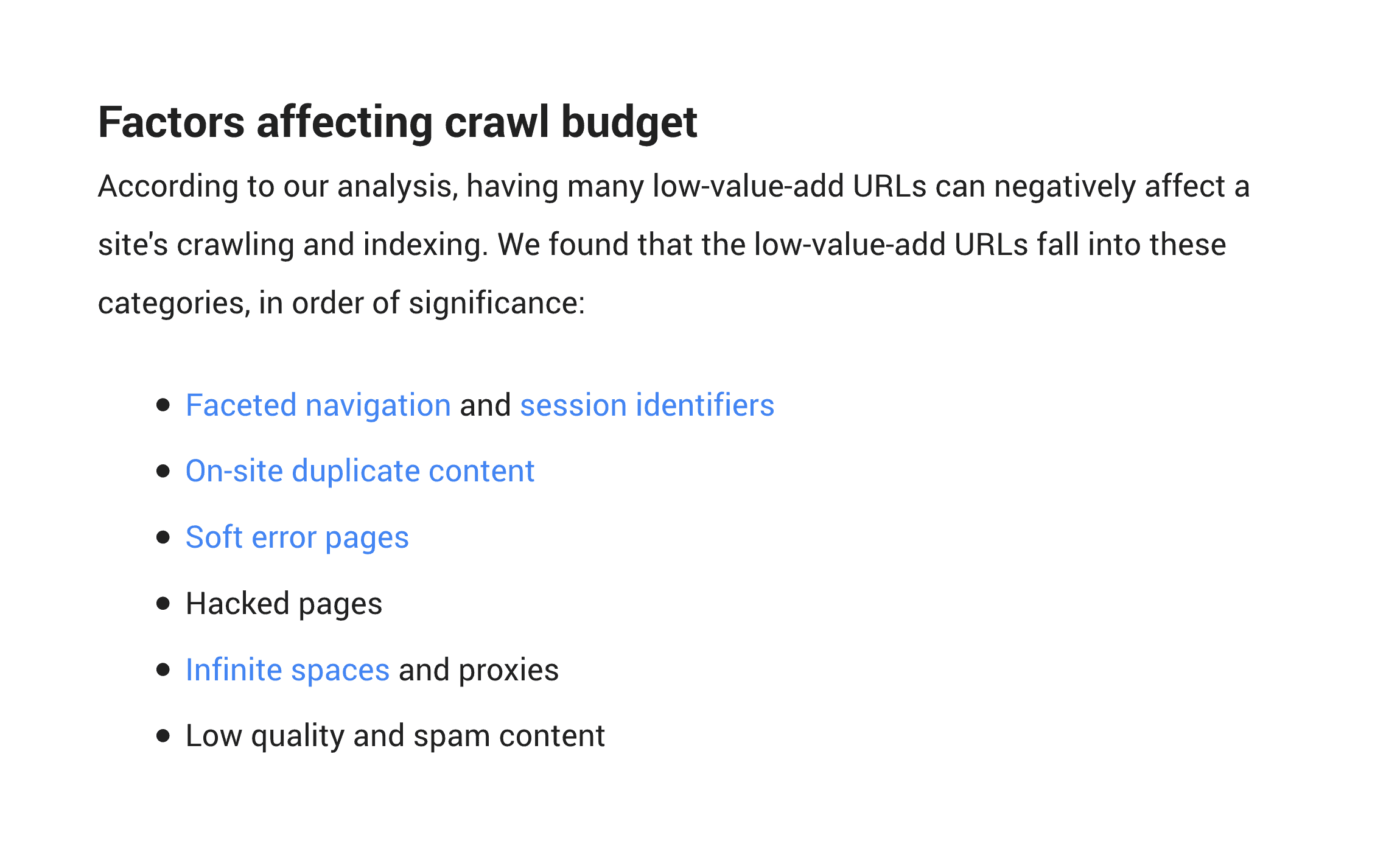

Crawl budget is the amount of crawling a search engine allocates to your site within a window of time. Think of it as attention plus capacity. Google states that crawl budget is shaped by crawl rate limit and crawl demand, and the limit that Googlebot has depends on your server’s health.

- Crawl rate limit (capacity): how fast and how much a crawler can hit your servers without causing strain. If your site times out or throws errors, the crawler slows down.

- Crawl demand (interest): how much the crawler wants to fetch your URLs. Popular, well-linked, and frequently updated pages get more attention.

A quick clarity check on stages:

- Discovery: the crawler finds a URL via links, sitemaps, or prior knowledge.

- Crawl: the crawler fetches the page and critical resources. See how Googlebot operates, including processing only the first 15 MB of each page.

- Indexation: the system decides whether to store and rank the page. Crawling is necessary but does not guarantee inclusion.

Crawl budget changes over time with server health, site stability, content updates, internal linking, backlinks, duplication control, and perceived usefulness. If your site keeps returning fast, consistent 200s on pages users care about, your effective crawl tends to trend up. If the site is slow or chaotic, it can trend down.

how Google calculates crawl budget

Two levers steer crawler behavior: how much it can crawl without hurting your site and how much it wants to crawl because your content seems valuable.

Crawl rate is driven by:

- Server health and latency: faster TTFB and reliable responses mean higher crawl throughput. Consider long-life caching for static assets and avoid unnecessary no-cache patterns.

- Response codes: a clean pattern of 200s builds trust. Timeouts, 5xx spikes, and long chains say slow down. Avoid excessive temporary 302 usage.

- Throttling: if Google senses strain, it backs off and tests again later.

Crawl demand is influenced by:

- Popularity: URLs with strong internal links and credible backlinks earn more visits.

- Freshness and staleness: pages that change more often get recrawled more; stale pages settle into lower frequency.

- Change signals: accurate Last-Modified headers, updating sitemaps, and clear internal link structures help.

Concrete signals I can shape:

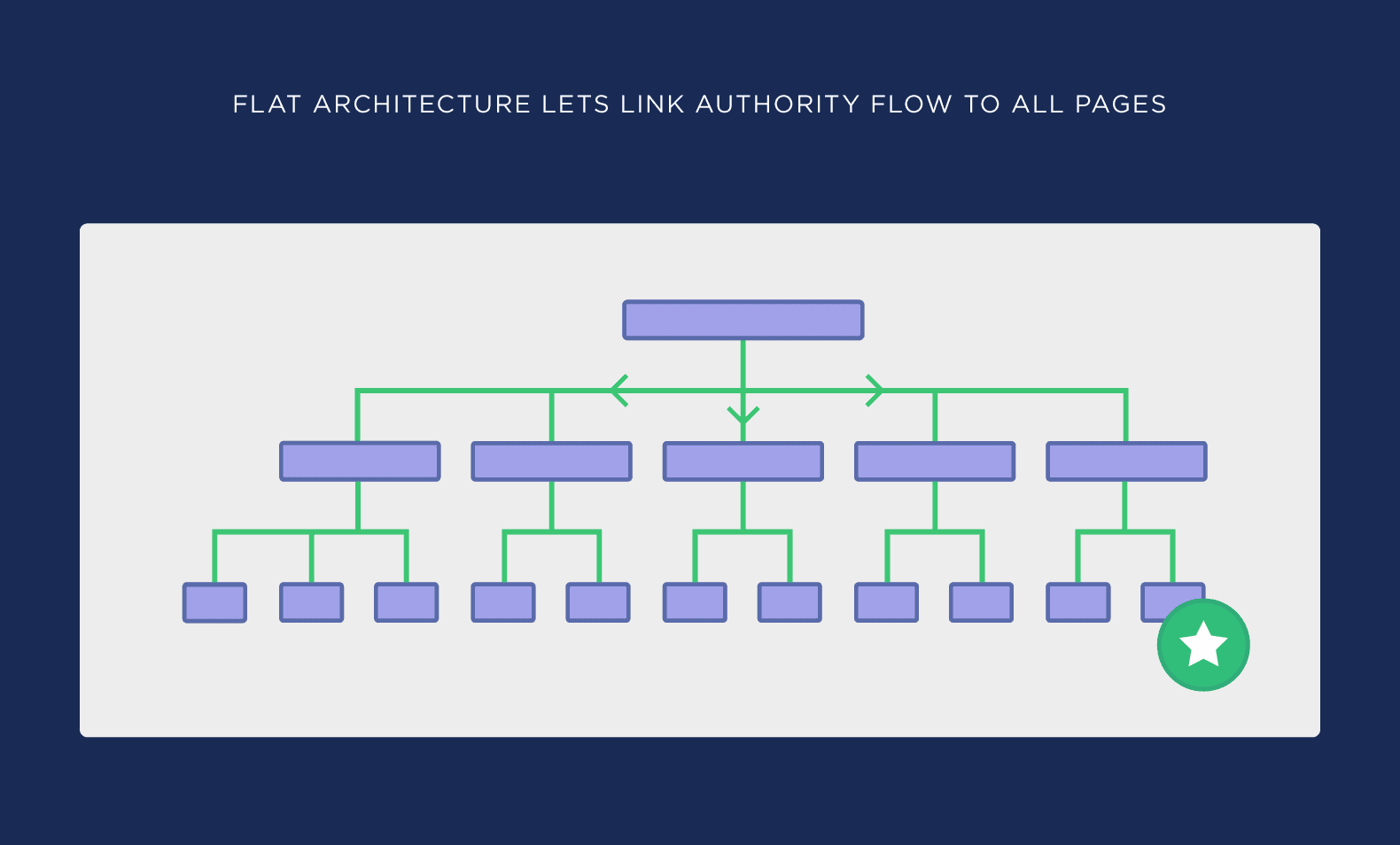

- Internal linking and depth: pages closer to the homepage or included in main navigation are crawled more often. Avoid internal nofollow; it can hinder discovery. See website architecture best practices.

- Canonicalization: clear canonical tags reduce wasted crawling on near duplicates and signal the preferred URL.

- Sitemaps: clean entries that reflect reality help crawlers see what matters most.

- Stable 200s: minimize 3xx churn and 4xx noise around important sections; remove soft 404s and unstable URLs.

In short, steady performance and clear signals boost crawl capacity, while real demand cues raise crawl frequency on the pages that count. For large sites, see Google’s documentation on managing Crawl budget.

crawl budget importance

Does crawl budget matter for B2B service sites? Yes - and sometimes not as much as it seems. Google has publicly noted that crawl budget is not a concern for most small sites. If you have only a few hundred URLs, content quality, unique value, and internal linking usually come first. Still, even small sites benefit when bots stop wasting time on broken links, duplicates, and slow servers.

For larger B2B sites, national service networks, brands with heavy blogs, or parameterized URLs, crawl budget becomes a lever tied to revenue. Faster indexation of:

- New service pages

- Updated pricing or features

- Case studies and proof assets

- Thought leadership tied to current trends

can bring qualified traffic forward by days or weeks. When you sell to busy decision-makers, that timing shift matters. More of the right pages crawled, indexed, and refreshed tends to deliver steadier inbound demand at a lower marginal cost than simply adding paid spend.

When it is not the bottleneck:

- Small sites with thin or overlapping content

- Sites with heavy noindex usage or weak internal links

- Sites where most new pages add little distinct value

When it is a real lever:

- Thousands of URLs, heavy archives, or frequent updates

- Complex parameter rules and faceted navigation

- Multiple service areas and deep resource libraries

calculate crawl budget

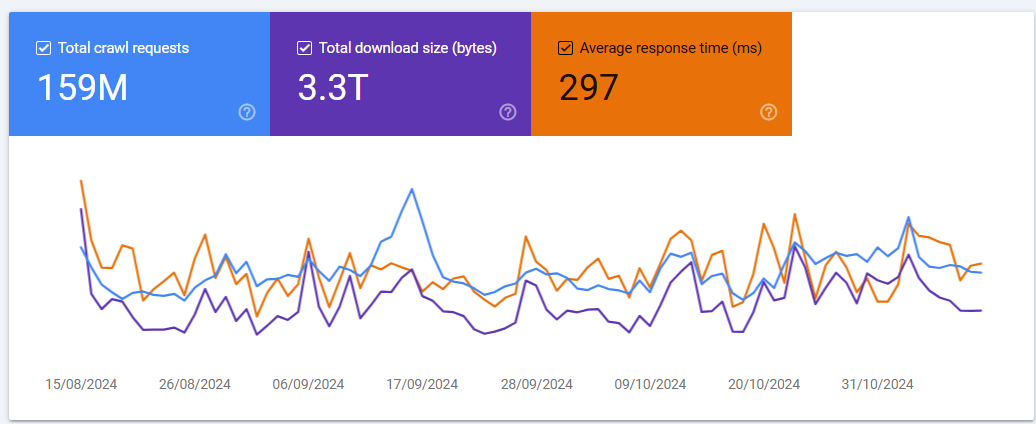

You will not see a single crawl budget number, but you can estimate it and track whether the useful portion is rising. Start with GSC’s Crawl Stats Report and your server logs. If you are new to GSC, here is an overview of Google Search Console.

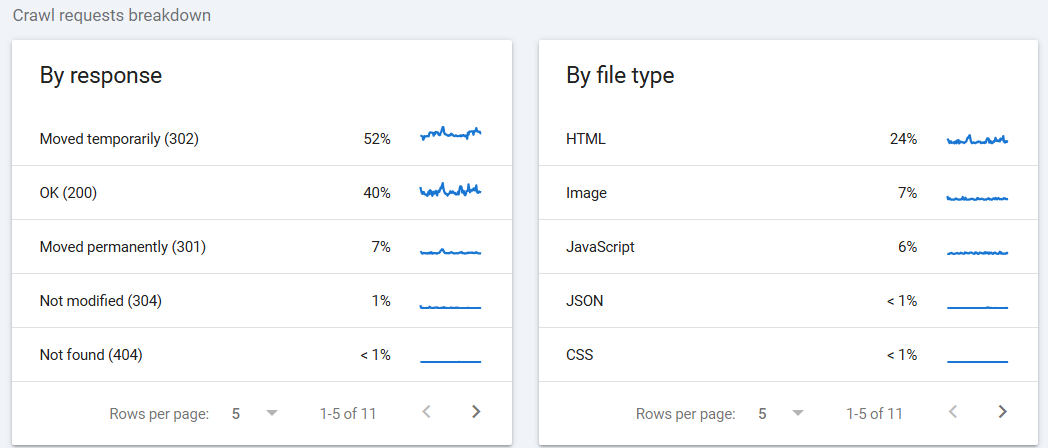

- Pull Crawl stats

Capture total crawl requests, average response time, and total download size over a recent period. Review breakdowns by response code, file type, and crawler type. Look for heavy crawling on 3xx, 4xx, 5xx, or static assets that do not need repeated fetches.

- Baseline daily activity

Divide crawl requests by days in the period for a rough daily average. Treat this as directional rather than exact. - Analyze server logs

Gather logs for at least two weeks (longer for large sites). Filter for known crawler user agents (for example, Googlebot). Group by directory to see where crawling concentrates. Flag wasted requests on parameters, duplicates, soft 404s, and long redirect chains. Note average response time for key sections. A practical guide to log file data and a helpful tool like Semrush Log File Analyzer can speed this up. - Estimate the effective portion hitting important sections

Build a URL inventory marking high-value pages: services, pricing, case studies, topic hubs, and conversion helpers. Calculate what share of crawler hits landed on those URLs within the log window. - Track recrawl timing

Pick a sample of key pages, update content and headers, and watch how long it takes for recrawl, reindexation, and SERP snippets to refresh. This timing trend is gold. - Measure useful crawl percent

Useful crawl percent = (Crawl requests to important pages ÷ Total crawl requests) × 100. Track weekly. The goal is for it to rise as you cut waste and reinforce signals to your best content. - Baseline crawl latency

Monitor average response time and timeouts by section. Tie improvements in TTFB and error rates to increases in total URLs crawled per visit.

This gives you a living picture of crawl allocation, not just a snapshot.

crawl budget optimization

Once I know where time is being spent, I run a focused playbook - reducing waste and increasing visibility of conversion pages.

- Internal linking

Promote priority pages from the main nav, footer, hubs, and within relevant articles. Keep important pages within three clicks of the homepage. Use descriptive anchor text aligned to search intent. Avoid orphaning and avoid internal nofollow on important links. Read more on internal links.

- XML sitemaps

Keep them clean and fresh: only indexable 200s. Split by section when big (for example, services, case studies, blog, resources). Reference them via a sitemap index. Regenerate on publish/update and ensure <lastmod> reflects real changes. See Google’s sitemaps guidance. - robots.txt

Block infinite faceted/filtered URLs that create endless combinations. Allow critical paths and assets needed for rendering. Keep the file simple with comments on changes. Do not use robots.txt to hide duplicates you want deindexed - use canonical or noindex for that. Reference the robots.txt file docs. - URL parameters

Consolidate where possible. Use canonical tags to the preferred URL. Keep parameter order consistent. Avoid session IDs in URLs. If a parameter creates true duplicates, prevent crawling at scale while preserving index control via canonical or noindex. - Duplicates and canonicals

Remove thin tag and archive pages that add little search value. Canonicalize near-duplicates to a single strong page. Use self-referencing canonicals on unique pages. Ensure hreflang (if used) aligns with canonicals. Learn about duplicate content and implementing canonical tags. - Status hygiene

Fix 4xx and 5xx at the template level first, then page level. Avoid redirect chains; use one clean 301 hop. Update internal links to the final destination. Use 410 for permanently removed content to reduce unnecessary recrawls. More on redirect chains. - Speed and TTFB

Tune server performance and caching. Compress and right-size assets. Serve modern image formats when practical. Aim for low and consistent TTFB, especially on money sections. Check Core Web Vitals and avoid heavy render-blocking resources. - Freshness signals

Update high-value pages with new proof, stats, and examples. Keep Last-Modified and ETag honest. Use 304 Not Modified for content that truly has not changed to save bandwidth and crawl cycles. - Pagination

Avoid crawl traps on endless lists. Provide a view all for small categories when practical, or a clear canonical to the main listing. Make early pages of a series fully crawlable with standard links; avoid relying on infinite scroll alone. See Google’s guidance on their own recommendations for pagination. - External signals

A small number of credible links to service hubs or major guides can raise crawl demand for those sections. Promote pages that actually help buyers decide.

data sources and diagnostics

To understand crawl allocation, I lean on three types of evidence:

- Crawl statistics

Trends in crawl requests, response codes, host status, and indexing outcomes help me see whether fixes are moving the right needles. Start with the GSC Crawl Stats Report. - Site-level crawls

Whole-site scans reveal broken links, redirect chains, duplicate content, misplaced noindex, and internal link depth to priority pages. Run a quick site audit to surface issues fast. - Log analysis and performance checks

Server logs show what bots actually hit by directory, status code, and response time - the closest thing to the truth. Lab and field performance data help tie latency and stability improvements to crawl throughput. See also the page indexing report for index coverage insights.

I keep a simple workflow: scan the site, review crawl stats, inspect logs, fix issues, then recheck the same sources. Repeat on a cadence.

working view to track progress

I maintain a lightweight tracking view so stakeholders can align on priorities and see trend lines without jargon. At minimum, I include:

- URL inventory by section

Fields: URL, section, template type, status code, indexable, canonical target, internal links in, priority tier, last updated. - Crawl stats summary

Weekly totals for crawl requests, average response time, download size, and breakdowns by response code and file type. Add notes on releases or outages. - Log-derived crawl distribution

Bot hits by directory and status code, with average response time per section. Flag spikes and dips. - Priority issues

Severity, pages affected, first-seen date, owner, and fix ETA. Examples: duplicate canonicals on service pages, 5xx spikes, sitemaps including redirects. - KPIs

Useful crawl percent, average recrawl time for key pages, percent of indexable 200s in sitemaps, share of bot hits to money sections, and 4xx/5xx on priority URLs.

This keeps everyone honest and trims rework while giving leadership a clear narrative.

FAQs

Q: Does crawl budget matter for small B2B sites?

A: Usually content quality, uniqueness, and internal linking matter more until the site grows. Still, fix obvious waste like broken links, duplicate pages, and slow servers - those help users and make crawling more efficient.

Q: How long until indexation improves after fixes?

A: Often days for high-authority pages and fresh sitemaps. Broader changes can take 1-3 weeks. Server health and site stability can speed things up; severe errors or slow TTFB can delay recrawls.

Q: Can I see my crawl budget as a single number?

A: No. Use crawl trends and server logs to estimate how much crawler attention reaches your important sections and whether that share is improving. Start with the GSC Crawl Stats report (websites).

Q: Do XML sitemaps increase crawl budget?

A: They guide discovery and hint at priority, but they do not create more budget. Keep them clean, accurate, and aligned with reality.

Q: Are backlinks a crawl budget signal?

A: Yes, indirectly. Quality links can raise crawl demand for linked sections, lifting crawl frequency and recrawl speed. Learn more about backlinks.

Q: Should I block parameter URLs with robots.txt or use noindex?

A: Block true crawl traps via robots.txt to save cycles. For index control, use canonical or noindex (meta or HTTP). Pick one approach per case and keep it consistent.

Q: Is rel=next/prev still used?

A: Google no longer uses it for indexing. Use strong internal links, a practical view all when suitable, and clear canonicals on listings.

For further depth, see Google’s original article Optimize your crawling & indexing, the GSC Crawl Stats report (websites), and this video walkthrough: Complete Guide to Crawl Budget Optimization.

A final note for practical leaders: crawl budget may not be the loudest lever, but it keeps your pipeline tidy. When bots spend time on the right pages and your server stays quick, the rest of your SEO work pays off sooner. The relief you feel when a new case study lands and shows up in search within days instead of weeks is not luck - it is crawl budget working quietly in your favor.

.svg)