My CFO does not care how clever my prompts sound. They care about reliable pipeline growth without surprise bills. If my AI bills have been creeping up while leads are flat, I do not have a strategy problem so much as a cost-control problem. Good news: I can rein things in fast. The trick is to treat tokens like money in the bank, because that is exactly what they are. A few small changes today can shrink spend this week, then set me up for steadier margins next quarter.

Quick wins for AI token optimization

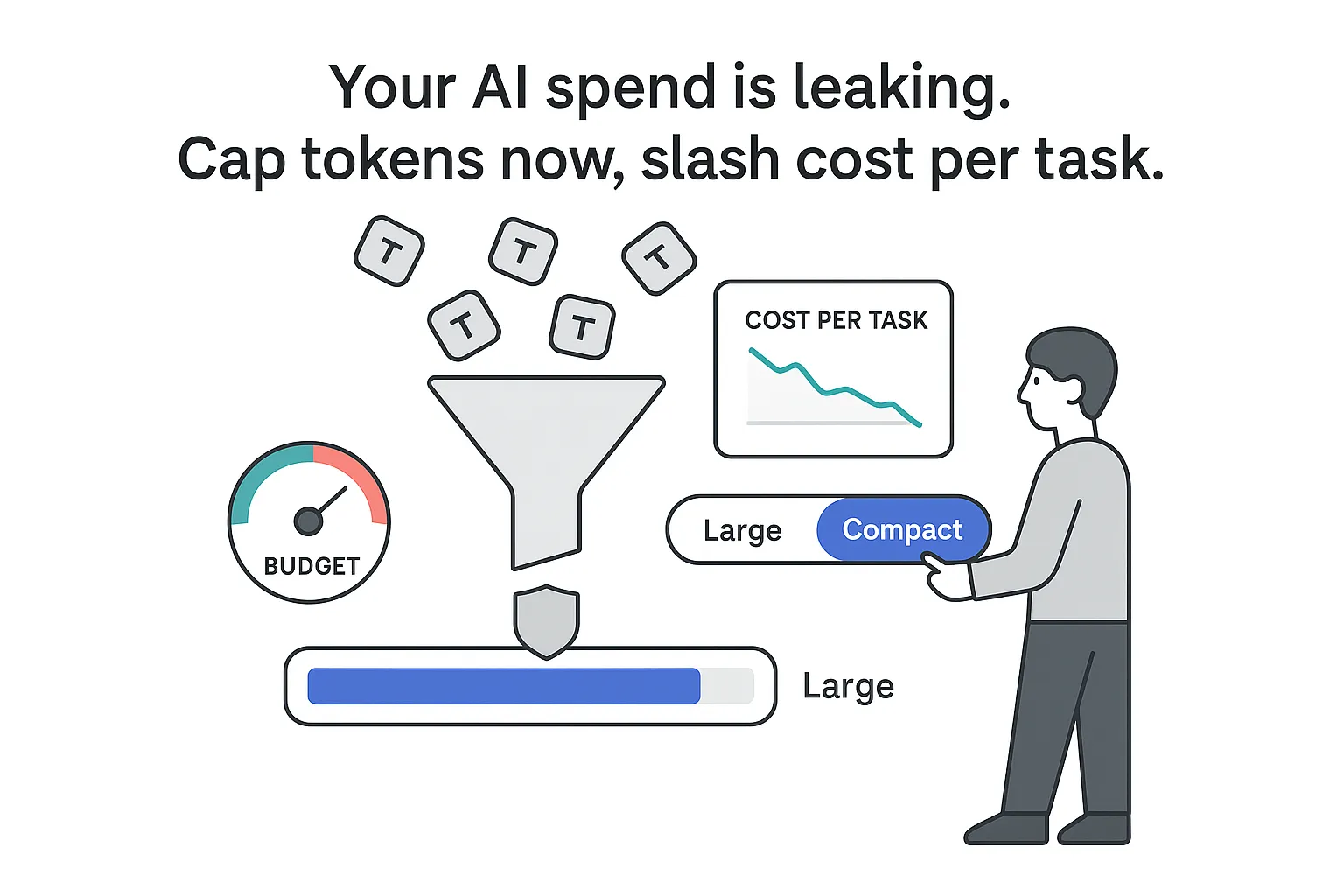

If I want savings in days, not months, I start here. In B2B workflows, these moves typically cut cost per task by 30 to 60 percent once standardized, depending on baseline quality and retry rates.

- I set explicit max_tokens and enforce output constraints. I do not let the model ramble. I cap responses at what the task truly needs - and keep it there.

- I switch non-critical tasks to compact models. Drafts, summaries, and first-pass QA run on a small-footprint option; I reserve my flagship model for high-stakes steps.

- I cache reusable system instructions and retrieved context. If the same role prompts, policies, or FAQs repeat, I store them and reference IDs or templates instead of resending full text.

- I batch similar API calls when latency is flexible. Grouping low-priority tasks trims overhead and idle time.

- I standardize concise templates per use case. Short, consistent prompt frames reduce context bloat and make outputs more predictable.

- I throttle retries and set sensible stop sequences. Silent retries and runaway generations torch budgets; guardrails prevent both.

A counterintuitive truth: longer prompts can be cheaper - when they are purposeful. Two tight examples can prevent three bad iterations. I spend a few tokens once to save many tokens later.

Token costs explained

A token is a small chunk of text that models read and write. Billing is tied to tokens, not characters or words. I think of tokens as the metered units on my AI utility bill.

Basic equation:

Cost = (input tokens x price_in_per_1K) + (output tokens x price_out_per_1K)

Many providers publish separate rates for input and output. If output is billed higher, limiting verbosity is a direct cost win.

Context windows matter. A model’s context window is the maximum number of tokens it can process in one go. Long policies, full transcripts, or entire email threads fill that window fast and burn money in two ways: more input tokens and longer outputs because the model has more to chew on.

A quick B2B example - drafting proposals with AI (illustrative rates; plug in actual provider pricing):

- Prompt: 1,200 tokens of client notes, SOW boilerplate, and three examples

- Output: 900 tokens of proposal draft

- Prices: $0.50 per 1K input tokens and $1.50 per 1K output tokens

Estimated cost per run:

- Input: 1.2 x $0.50 = $0.60

- Output: 0.9 x $1.50 = $1.35

- Total: $1.95

Trim the prompt to 700 tokens by removing unnecessary history and I get:

- Input: 0.7 x $0.50 = $0.35

- Cap output at 600 tokens: 0.6 x $1.50 = $0.90

- Total: $1.25

That is 36 percent off every proposal without lowering the quality bar.

Input and output tokens

Input tokens are everything I send in. Output tokens are everything I get back. For many knowledge tasks, input grows slowly while output grows quickly. That gap is where budget goes to die.

Typical ratios by task:

- Summarization: input heavy, output light. A 3,000-token meeting note becomes a 150-token summary. It is a great bargain when I cap results properly.

- Generation: input light, output heavy. A 150-token brief turns into an 800-token draft. Here, strict output control matters most.

Why output control often beats shaving a sentence off the prompt:

- Output is frequently billed at a higher rate.

- Every extra paragraph compounds downstream effort: my team reads more, edits more, and waits longer.

Tactics that keep outputs lean and useful:

- Force summary lengths. For example, 5 bullets with a 10-word limit each.

- Prefer bullets over paragraphs for internal workflows.

- Request structured JSON for machine ingestion; compact schemas beat florid prose.

- Set stop sequences that end responses at the right place.

The brevity rule is my default. The efficiency exception applies when a couple of examples make the model hit the mark on the first pass.

Pricing and model selection

Provider pricing varies, sometimes by a lot. Per-1K-token rates, input/output splits, and context window sizes all influence unit economics. Larger models often reduce retries for complex tasks; smaller ones often shine for drafts, QA, extraction, and classification.

A practical pattern I apply:

- Draft with a compact model for ideation, outlines, extraction, and first-pass summaries.

- Finalize with a flagship model for customer-facing copy, proposal language, and anything that reaches a prospect.

- Use structured modes that constrain outputs (for example, JSON) to keep paid output tokens tight.

- Batch jobs when latency is not critical to lower overhead for bulk processing.

- Reuse context. Where supported, reference shared system instructions or policy snippets by ID instead of resending full text; honor limits and expirations.

Different vendors have different strengths. External benchmarks help me compare cost and quality side by side using sources like Artificial Analysis and their methodology (see details here) along with a cost-to-run chart. A switch pays off if core tasks run shorter or more accurately on another stack. I let trials guide me and measure cost per task and error rates, not just headline token prices.

For deeper technique work on efficient inference, model distillation can help. See How AI Model Distillation Helps You Build Efficient AI Models.

Hidden costs

The obvious line items show up on the invoice. The less obvious ones bite later.

- Long system prompts. Hidden role instructions count toward tokens; I keep them short and reusable.

- RAG over-retrieval. Pulling ten chunks when three suffice bloats input size; I tune chunking and top_k.

- Too many few-shot examples. Three compact, high-signal examples beat eight long ones.

- Uncontrolled multistep chains. If each step expands context, costs compound by step three.

- Retries and timeouts. Quiet retry storms are budget killers; I add backoff and hard ceilings.

- Latency tax on humans. Slower responses drive idle time and context switching; time has a cost.

- Storage and embeddings. I pay to create and store embeddings; I keep only what drives outcomes.

- Monitoring overhead. Data I never review is just another bill.

I pull this together through total cost of ownership (TCO): API cost + engineering time + ops and latency + rework from bad outputs. A simple B2B KPI lens helps. If my cost per qualified lead rises because verbose drafts slow sales, I am paying twice: once for tokens and again for slower follow-up and lower connect rates.

A quick sketch. Suppose each proposal review meeting costs a senior account director 30 minutes. If token bloat adds 10 minutes to the read and that director sits in six meetings a week, I just spent hours on fluff. Trim output lengths and structure the draft; my cost per opportunity drops because the team moves faster.

Prompt engineering that cuts cost

I treat prompt engineering as an SOP for predictable, low-cost results - tight habits, not fluffy rules.

- I write efficient prompts: role, audience, exact task, output format, and constraints in one short block.

- I use the brevity rule by default; inputs stay lean.

- I apply the efficiency exception when two compact examples will prevent reruns.

- I limit output length with exact caps (words, bullets, fields, or characters).

- I minimize context in multi-turn flows by summarizing history into a short state; I do not resend whole transcripts.

- I replace unguided iteration with criteria-driven loops: ask for one version, then refine against specific feedback.

- I use cheaper models for first drafts and experiments and escalate only when shape and structure are right.

Side-by-side example with token deltas

Scenario: extract key fields from a customer email and format for a CRM.

Costly, vague prompt

- Prompt text:

Please read this entire email thread and tell me what matters. I need a summary and some key points. Anything you think is useful is fine. Also add recommendations for next steps and anything we might have missed. - Input tokens: 1,800

- Output tokens: 900

- Cost (assuming $0.50 per 1K input and $1.50 per 1K output):

Input $0.90, output $1.35, total $2.25 - Problems: long, unstructured output; manual editing required

Optimized, constrained prompt

- Prompt text:

Role: Sales ops assistant.

Task: Extract exact fields from the email thread below.

Audience: CRM.

Output: Valid JSON only. Keys: contact_name, company, job_title, intent, requested_demo, timeline, risks, next_action. Limit each string to 12 words.

Return nothing else. - Input tokens: 1,050

- Output tokens: 250

- Cost:

Input $0.53, output $0.38, total $0.91 - Result: ~60 percent lower cost and less human review time

Measured savings come from structure and limits, not magic words. When I keep prompts boring and specific, I get consistent, inexpensive results.

Model settings that keep bills predictable

Models have dials. I set them thoughtfully once, then avoid micromanaging.

- Temperature and top_p: Lower values push toward consistent, less verbose answers - fewer retries, tighter outputs.

- Max_tokens: I set this aggressively. If a task needs 300 tokens, I do not allow 1,500.

- Frequency and presence penalties: I nudge the model away from repetition and filler.

- Structured modes (e.g., JSON) or function/tool calling: I force compact, machine-friendly outputs. Machines do not need adjectives.

- RAG tuning: I right-size chunks to retrieve fewer, richer passages; I tune top_k to the smallest set that keeps accuracy high and score retrieval quality regularly.

- Batch sizes: When speed is not critical, I batch requests to reduce overhead and stabilize costs across volume.

- Model cascading: I route simple tasks to a cheaper model and escalate only if a validation step fails or confidence drops. Average spend stays low while quality holds for the hard cases.

- Mixture-of-experts concepts: For specialized tasks, I separate steps by skill (classifier → summarizer → formatter) to beat a single giant model on cost and consistency.

- Fine-tuning: For repetitive formats, fine-tuning can reduce prompt length and shrink outputs. It carries an upfront bill and some MLOps care. Learn more about Fine-tuning.

When fine-tuning beats prompt tweaks in B2B workflows:

- The task format is stable for months (e.g., intake fields, FAQ answers, proposal boilerplate).

- I currently resend large examples or instructions to get tone or structure right.

- Output errors still pop up after heavy prompt refinement.

- Volume is high enough that small per-task savings add up weekly.

If three or more match my use case, I run a short trial and measure tokens per task and error rates before and after. If a compact fine-tuned model hits the mark with a much shorter prompt and fewer retries, I get durable savings without constant tinkering.

Monitoring and governance that stick

If I cannot see it, I cannot steer it. I put simple monitoring in place and make it part of my weekly rhythm. For token counting and instrumentation, I use libraries like TikToken, practical guides to count tokens for GPT, and provider docs to count tokens for Gemini.

What I instrument:

- Spend visibility at the account and feature level

- Request tracing with token metrics per run

- Experiment tracking for model/prompt variants

- Production observability with alerts on errors, latency, and cost spikes

KPIs that actually matter:

- Tokens per request and tokens per output word (both should trend down as prompts mature)

- Cost per request and cost per task (tracked by feature, not just globally)

- Cache hit rate for reusable prompts and context

- Retry rate and average latency (persistent elevation signals hidden costs)

- Cost per lead and cost per opportunity (tie model choices to revenue metrics)

- Automatic rollback on sudden token spikes (if a new prompt doubles output tokens, I roll back fast)

Governance without friction:

- I set per-feature quotas and weekly budgets with soft and hard limits.

- I send lightweight nightly reports highlighting spend and the noisiest prompts.

- I A/B test prompts and models with clear win criteria like cost per task and human edit time.

- I run monthly reviews to retire underperforming templates and promote winners.

Here is the mindset shift that makes this stick: I treat AI token optimization like paid media. I would not run search ads without budget caps, conversion tracking, and creative tests. Tokens deserve the same discipline.

Final thought: simpler systems usually beat clever ones. Short prompts, strict outputs, compact models for drafts, premium models for finals, and steady monitoring. Do this, and my AI stack gets cheaper, faster, and easier to manage. I stop arguing about prompts and start hitting revenue targets with less friction. And my CFO finally smiles when the monthly invoice lands.

.svg)