U.S. adults want clear AI labels but lack confidence in spotting AI-made content. New Pew Research Center data quantifies the gap between demand for transparency and users' ability to detect AI.

AI content labeling: U.S. attitudes - Pew 2025

Executive snapshot

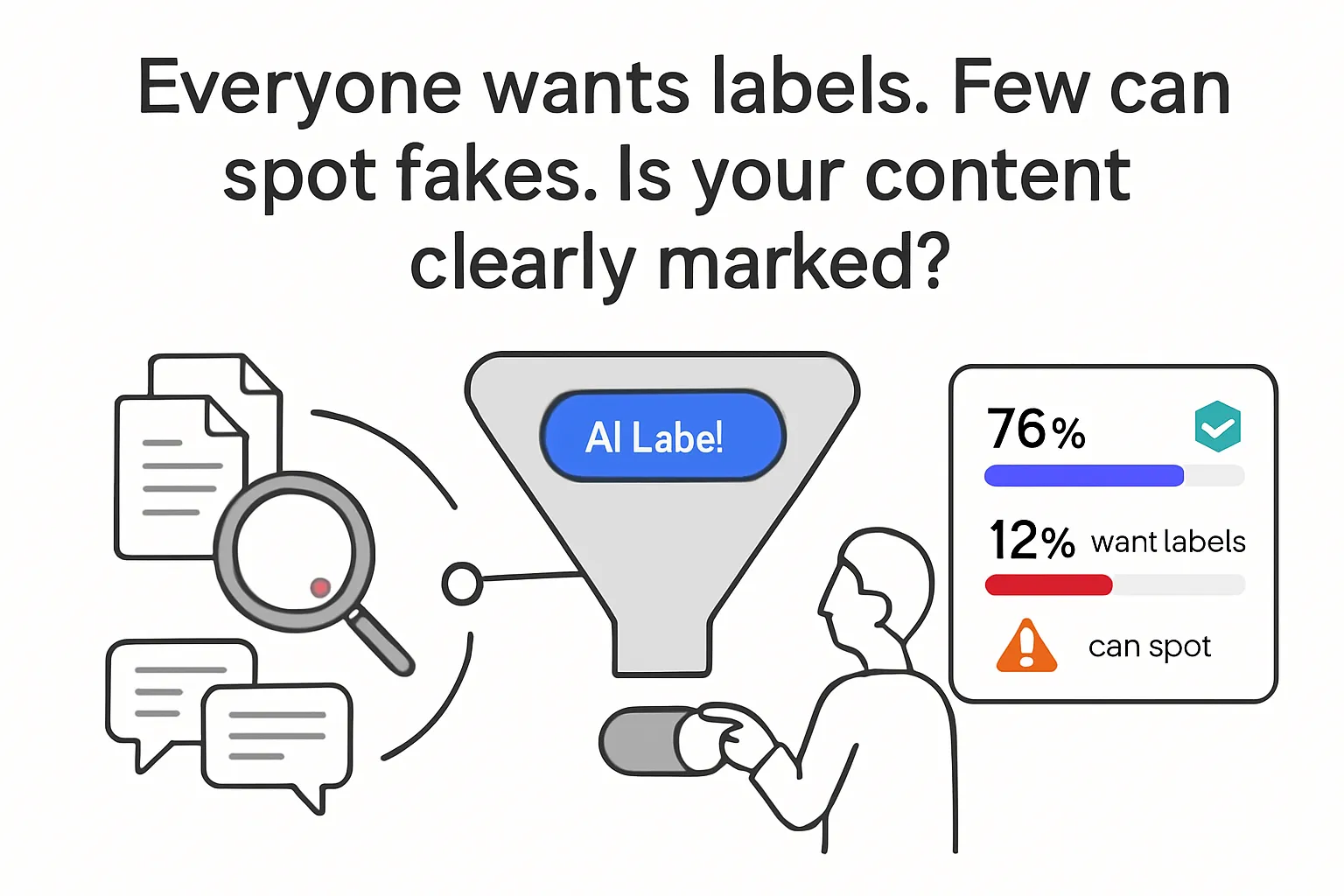

- 76% say it is extremely or very important to know whether pictures, video, or text were made by AI or by people, but only 12% feel confident they could tell the difference.

- About 60% want more control over how AI shows up in their lives, up from 55% a year earlier.

- Roughly half say AI in daily life raises more concerns than excitement; about 10% are more excited than worried.

- Awareness is higher among adults under 30 (62% have heard "a lot" about AI) vs 32% of those 65+.

- 53% believe AI will reduce creative thinking and 50% say it will hinder people's ability to connect with others.

Implication for marketers: Visible, plain-language AI disclosure is likely necessary for trust. Do not assume audiences can self-detect AI use.

Method and source notes

- What was measured: U.S. adults' attitudes toward AI in content and daily life, including the importance of AI labeling, self-rated detection confidence, overall sentiment, desired control, acceptance of AI across domains, and perceived impacts on creativity and relationships.

- Who/when: Pew Research Center wrote "How Americans view AI and its impact on people and society," published September 17, 2025.

- Sample/method: Survey of roughly 5,000 U.S. adults on Pew's nationally representative American Trends Panel; results are weighted to U.S. population benchmarks.

Key limitations and caveats

- Self-reported detection confidence is not the same as actual detection accuracy; the survey did not test recognition performance.

- Acceptance of AI uses is reported at a high level (for example, "data-intensive tasks" vs "personal domains") without detailed sub-use percentages in the summary.

- Margin of error and field dates are not specified in the summary; see Pew's methodology for technical details.

Findings on AI labeling, detection confidence, and sentiment

- Labeling vs detection: 76% rate it as extremely or very important to know whether content was made by AI or people, but only 12% feel confident they could tell AI-generated content from human-made content. This points to a large transparency-capability gap that disclosure must bridge.

- Desire for control and overall sentiment: About 60% want more control over AI in their lives, up from 55% the prior year. About half say AI's growing presence brings more concern than excitement, while about 10% report more excitement than worry.

- Acceptance by domain: Majorities support AI in data-intensive areas such as weather prediction, financial crime detection, fraud investigation, and drug development. About two-thirds oppose AI in personal domains like religious guidance and matchmaking where human judgment is expected.

- Age differences: Awareness is higher among younger adults (62% of under-30s say they have heard a lot about AI vs 32% of those 65+). Higher awareness does not translate to optimism; younger adults are more likely to say AI will harm creative thinking and meaningful relationships.

- Creativity and social connection concerns: 53% say AI will reduce creative thinking; 50% say it will hinder people's ability to connect with others. Few expect improvements, signaling that content which highlights human input may matter for perceived quality and authenticity.

Interpretation and implications for marketing and product teams

- Likely: Prominent, plain-language AI labels at the point of consumption will align with user expectations and reduce uncertainty. Given that only 12% feel confident detecting AI, do not rely on users to infer AI involvement from context or style cues.

- Likely: Human-in-the-loop messaging can mitigate perceived creativity and connection risks. Where AI assists content or service delivery, disclose the human role (editing, oversight, final approval) to address concerns about reduced creativity and social connection.

- Likely: Match AI usage to domain acceptance. For data-heavy back-office tasks (for example, fraud analytics), user resistance is lower. For personal or values-laden services (for example, spiritual advice, matchmaking), expect pushback and consider human-led experiences.

- Likely: Younger audiences may require more reassurance, not less. Despite higher awareness, younger adults are more likely to anticipate negative effects on creativity and relationships, so emphasize human authorship and craft for youth-oriented creative.

- Tentative: Placement and wording of AI disclosures may affect trust and engagement. Test short, non-technical labels (for example, "This image includes AI-generated elements; reviewed by our team") versus detailed provenance notes for comprehension and willingness to proceed.

- Tentative: Provenance standards (for example, visible watermarks plus metadata) could outperform invisible, detector-based approaches for user assurance, given low self-confidence in detection skills.

- Speculative: Over-labeling low-stakes assistive uses may add friction without improving trust. Calibrate disclosure depth to context risk - more detail in health, finance, and legal; concise notices in routine creative support - then measure outcomes.

Contradictions and gaps

- Confidence vs capability: The survey measures self-confidence, not actual ability to detect AI. Real-world detection accuracy could be higher or lower than 12%.

- Use-case granularity: The report groups "data-intensive" and "personal" domains; detailed percentages by specific application are not included in the summary provided here.

- Behavioral effects: The data shows label demand but does not quantify how labels influence engagement, conversion, or brand trust in practice. Controlled experiments are needed to estimate performance trade-offs.

- Temporal dynamics: The increase in desire for control (60% vs 55% last year) suggests a trend, but the cadence and drivers of change are not fully explained.

Sources

Pew Research Center, September 17, 2025. See the full report.

.svg)