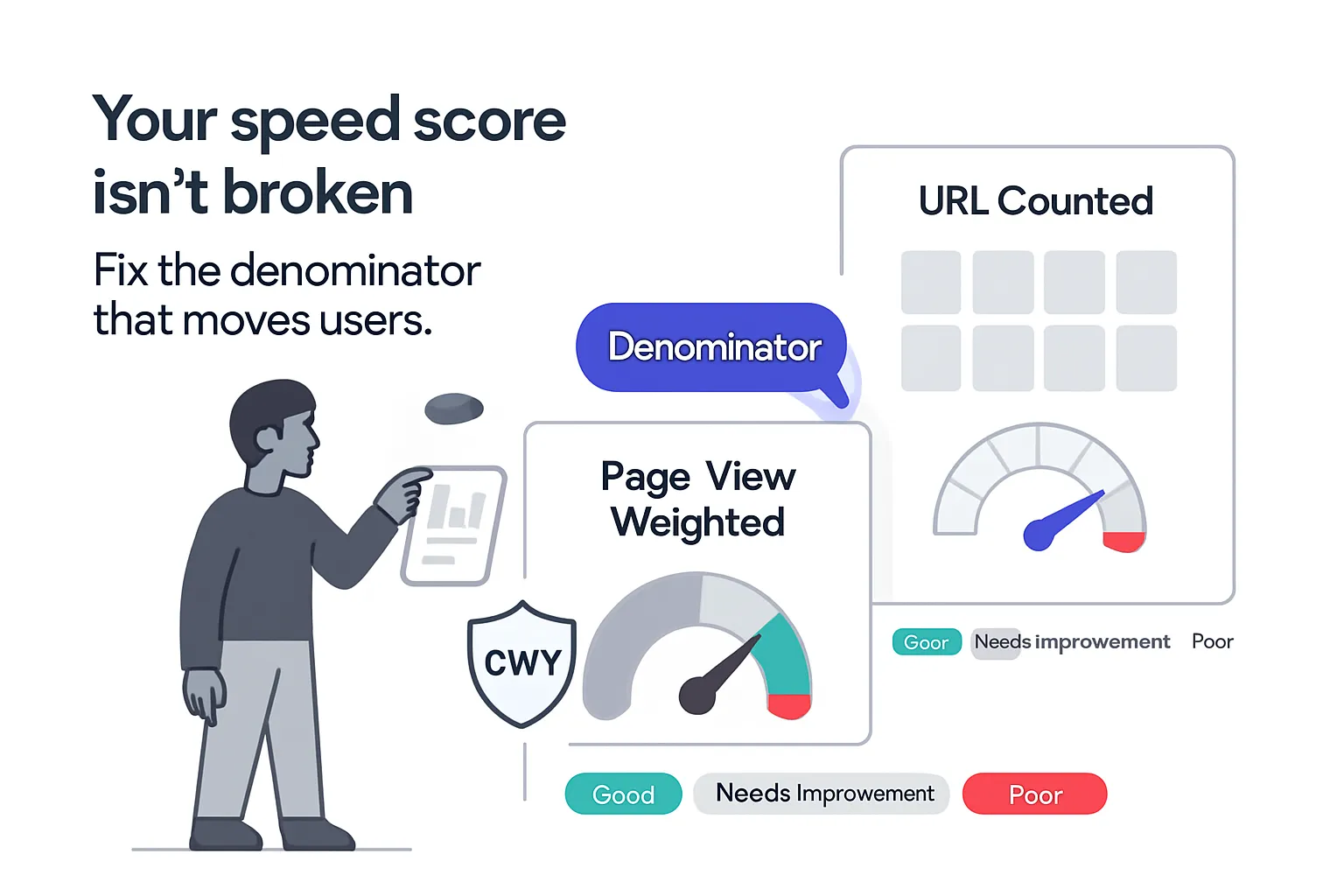

CrUX vs Search Console mismatches on Core Web Vitals are largely a denominator problem - page-view weighting vs URL or group counting. That difference changes which pages you prioritize, how you report progress, and how you connect performance to SEO and paid outcomes.

Core Web Vitals mismatch and what it means for marketers

Google's explanation is straightforward: the Chrome UX Report (CrUX) aggregates real-user field data by page views, while Search Console's Core Web Vitals report aggregates by URLs, often grouped by template. Both can be correct at the same time because they answer different questions. The marketing impact is material: weighted user experience vs template coverage will set different targets, timelines, and success metrics. Google documents the mechanics in its Core Web Vitals report documentation, and Barry Pollard addressed the issue on Bluesky.

Key Takeaways

- Expect systematic gaps. Traffic concentration drives them. If 80% of sessions hit 20% of pages that pass CWV, CrUX can show about 80% good page views while Search Console shows about 20% good URLs. Action: set dual KPIs - % good page views in CrUX for impact now, and % good URL groups in Search Console for site coverage progress.

- Prioritize by revenue and session weight first, then raise template coverage. Action: fix top landing, home, and catalog pages to move real UX and paid outcomes, then improve long-tail templates to lift Search Console's coverage signal.

- Reporting needs denominator clarity. Action: label charts with page-view weighted vs URL or group count and the 28-day window. Do not mix them into one metric without context.

- INP is in scope, and thresholds are strict at the 75th percentile. Action: watch INP ≤200 ms, LCP ≤2.5 s, CLS ≤0.1 at p75 in both tools. Small regressions on high-traffic pages can swing CrUX more than Search Console.

- PPC and SEO readouts should differ. Action: Ads and CRO dashboards should anchor on CrUX for session-weighted UX, while SEO technical backlogs should anchor on Search Console URL groups for coverage of good.

Situation Snapshot

- Trigger: Barry Pollard addressed the issue on Bluesky, explaining why sites can see 90% good in CrUX but only about 50% good URLs in Search Console - each tool aggregates field data differently.

- Facts:

- CrUX is the official field dataset powering PageSpeed Insights and Search Console. It reports a 28-day rolling window and uses the 75th percentile of user experiences. See PageSpeed Insights and the Chrome UX Report methodology.

- Search Console's Core Web Vitals report groups similar URLs and classifies them by CWV thresholds using CrUX field data, split by device. See the official Core Web Vitals report.

- INP replaced FID as a Core Web Vital in 2024. See INP guidance.

Breakdown and mechanics

- Two denominators:

- CrUX: good page views divided by total page views - a user experience share.

- Search Console: good URLs or groups divided by URLs with data - a template coverage share.

- Why gaps emerge:

- Traffic concentration: a few URLs capture most visits.

- Grouping: Search Console clusters similar URLs. One slow component in a template can suppress an entire group.

- Sampling thresholds: low-traffic URLs may lack enough data and be excluded in CrUX APIs, while Search Console may still group them or flag not enough data.

- Cause-effect chain:

- Heavy traffic on fast pages -> CrUX good share rises -> affects Ads and CRO outcomes more than Search Console coverage.

- Many low-traffic templates underperform -> Search Console good URLs lags despite strong CrUX.

- Example (illustrative assumptions):

- Site has 1,000 URLs. Top 50 pages = 80% of page views and pass CWV. The remaining 950 pages fail on INP or LCP.

- CrUX ≈ 80% good page views. Search Console ≈ 5% good URLs (50 of 1,000). A p75 improvement on one high-traffic page can move CrUX by 1 to 2 percentage points. Fixing one long-tail template might jump Search Console by 5 to 10 points if it covers many URLs.

- Shared rules across both tools:

- Metrics judged at p75: LCP ≤2.5 s, INP ≤200 ms, CLS ≤0.1. See CrUX methodology and INP guidance.

- Rolling window ≈ 28 days. Improvements may take weeks to fully reflect. See PageSpeed Insights.

Impact Assessment

Paid Search

- Effect: medium to high on landing page experience and conversion. Limited effect on Google Ads Quality Score directly, but performance can influence landing page experience rating and bounce or engagement. See landing page experience.

- Winners: advertisers with fast, stable top landing pages. CrUX gains align with CPC efficiency and CVR.

- Actions:

- Track CrUX % good page views for paid landing pages. Alert on p75 INP degradation over 200 ms.

- Use device-split CrUX for mobile-first Ads and tie to session-weighted revenue.

- Expect limited movement in Search Console when only a few high-volume pages are optimized.

Organic Search (SEO)

- Effect: low to moderate direct ranking influence. Meaningful for crawl efficiency and user signals. Google treats CWV as part of page experience signals, not a standalone ranking system. See page experience in Search.

- Winners: sites that improve long-tail template performance. Gains in Search Console good URL groups help demonstrate technical quality.

- Actions:

- Maintain dual KPIs: % good page views in CrUX and % good URL groups in Search Console.

- Prioritize templates like product detail, article, category, and filters. Fix shared bottlenecks such as server TTFB, image payloads, and JavaScript bloat to uplift many URLs at once.

- Plan for lag: with a 28-day window, expect 4 to 6 weeks from deploy to stable Search Console improvements.

Creative and UX

- Effect: medium via layout shifts and interaction responsiveness. CLS and INP often stem from design choices.

- Winners: teams that standardize components like fonts, image ratios, and carousels, and prevent late layout shifts.

- Actions:

- Gate component releases on CLS and INP budgets. Monitor p75 in staging and early rollout cohorts.

Operations and Engineering

- Effect: high for platforms with many templates or multi-tenant stacks.

- Winners: organizations that adopt template-level performance SLOs aligned to CWV thresholds and enforce budgets in CI.

- Actions:

- Create a template coverage matrix that tracks URL count, traffic share, revenue share, and current CWV status.

- Schedule work in two tracks: high-impact pages driven by CrUX and a coverage backlog driven by Search Console.

- Monitor TTFB and edge caching on top pages. Profile long-tail pages for JavaScript execution and image delivery.

Scenarios and probabilities

- Base (likely): persistent gaps between CrUX and Search Console for sites with concentrated traffic. Teams adopt dual metrics and template-based remediation. Expect a 10 to 40 point difference on many sites, narrowing as templates are fixed.

- Upside (possible): standardized components and CDN or image optimization uplift multiple templates. Search Console good URLs jumps by 20 to 50 points over 1 to 2 quarters while CrUX remains stable or improves modestly.

- Downside (edge): regressions on high-traffic pages, such as a new JavaScript bundle, depress CrUX by 10 to 20 points quickly while Search Console barely changes, raising paid costs via worse landing experience and lowering CVR until reverted.

Risks, unknowns, limitations

- Data gaps: low-traffic sections may lack sufficient field data. Both CrUX and Search Console can show not enough data, masking true performance.

- Browser bias: CrUX is Chrome-only. Safari and Firefox experiences may differ by audience mix.

- Grouping opacity: Search Console's URL grouping heuristics are not fully transparent. One component regression can suppress large groups.

- Time windows: rolling 28-day aggregation delays visibility of improvements or regressions. Short tests may be underrepresented.

- What could falsify this analysis: Google changes Search Console to page-view weighting or exposes per-URL p75 without grouping, Ads decouples landing experience from performance, or CWV thresholds or metrics change again.

Sources

- Search Engine Journal, 2025-08, News article - Google: Why CrUX and Search Console do not match on Core Web Vitals.

- Google Search Console Help - Core Web Vitals report: support.google.com/webmasters/answer/9205520

- Barry Pollard, 2025-08, Bluesky - page-view vs URL aggregation: bsky.app/profile/tunetheweb.com/post/3lwqpjx76ys27

- Google Search Central - Page experience in Google Search results: developers.google.com/search/docs/appearance/page-experience

- Deloitte Digital, 2020, Report - Milliseconds Make Millions.

- Google Ads Help - About landing page experience: support.google.com/google-ads/answer/6163142

- PageSpeed Insights - Field data window and p75 methodology: pagespeed.web.dev

- Chrome UX Report methodology and coverage: developer.chrome.com/docs/crux/

- web.dev - Interaction to Next Paint (INP) as a Core Web Vital: web.dev/inp

.svg)