Google Cloud researchers introduced DS-STAR on November 6, 2025 via the Google Research blog. The agent automates data science tasks across heterogeneous files and iteratively verifies each plan. According to the accompanying paper, DS-STAR reports higher accuracy on multiple public benchmarks.

DS-STAR announced

Jinsung Yoon and Jaehyun Nam outlined DS-STAR in an official blog post, describing an agent that performs statistical analysis, visualization, and data wrangling, with a preprint available on arXiv.

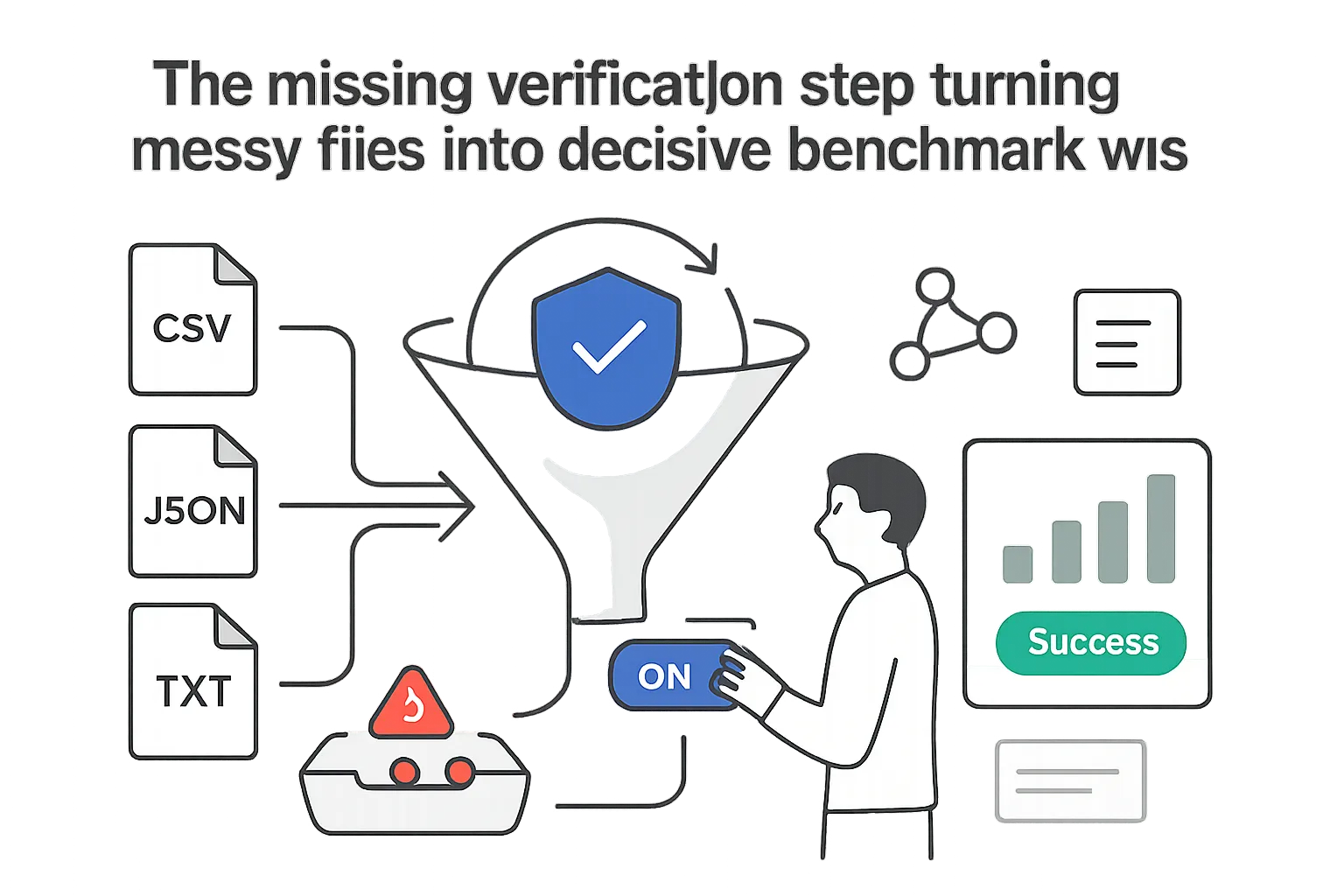

DS-STAR combines a data file analysis module, an LLM-based verification stage, and sequential planning. It summarizes directories containing CSV, JSON, markdown, and unstructured text, then plans, generates code, runs it, and verifies results. This design addresses the challenge of heterogeneous data formats common in real projects.

Prior agents often assume well-structured tabular inputs. Recent research highlights both the promise of LLM-based data science agents and their limitations, and subsequent progress underscores gaps when tasks are open-ended or lack a single ground truth, as seen in KramaBench.

Key details and performance

The framework operates in two stages that iterate until the verifier judges the output sufficient. Planner, Coder, Verifier, and Router agents coordinate execution and refinement. The loop stops when approved or after 10 rounds.

- Outputs include a trained model, processed dataset or database, visualization, or text answer.

- The paper reports 45.2% accuracy on DABStep versus 41.0% best alternative.

- It reports 44.7% on KramaBench versus 39.8% best alternative.

- It reports 38.5% on DA-Code versus 37.0% best alternative.

- DS-STAR ranked first on the DABStep public leaderboard as of September 18, 2025.

- Without the file analyzer, difficult DABStep accuracy dropped to 26.98%.

- Removing the Router reduced performance on easy and hard tasks.

- Hard DABStep tasks averaged 5.6 rounds; easy tasks averaged 3.0 rounds.

- Over half of easy tasks completed in a single round.

The authors evaluated DS-STAR against AutoGen and the DA-Agent baseline from DA-Code across single-file and multi-file tasks, reporting higher accuracy throughout.

Source citations

- Google Research blog announcement

- DS-STAR paper (arXiv)

- DABStep benchmark (arXiv)

- KramaBench benchmark (arXiv)

- DA-Code benchmark and DA-Agent baseline (arXiv)

- DABStep public leaderboard

- AutoGen baseline (arXiv)

- Background on LLM-based data science agents (arXiv)

- Background on data-driven insights

- Progress in data science agents

.svg)