Google announced a visual search upgrade for AI Mode that blends images and natural language in a single conversation. The feature starts rolling out this week in U.S. English. The company shared the update on its official Search blog.

Google AI Mode gets visual and conversational image search

Users can start with text or an image, then refine results with follow-up questions without restarting the query. Each image result links to its source page.

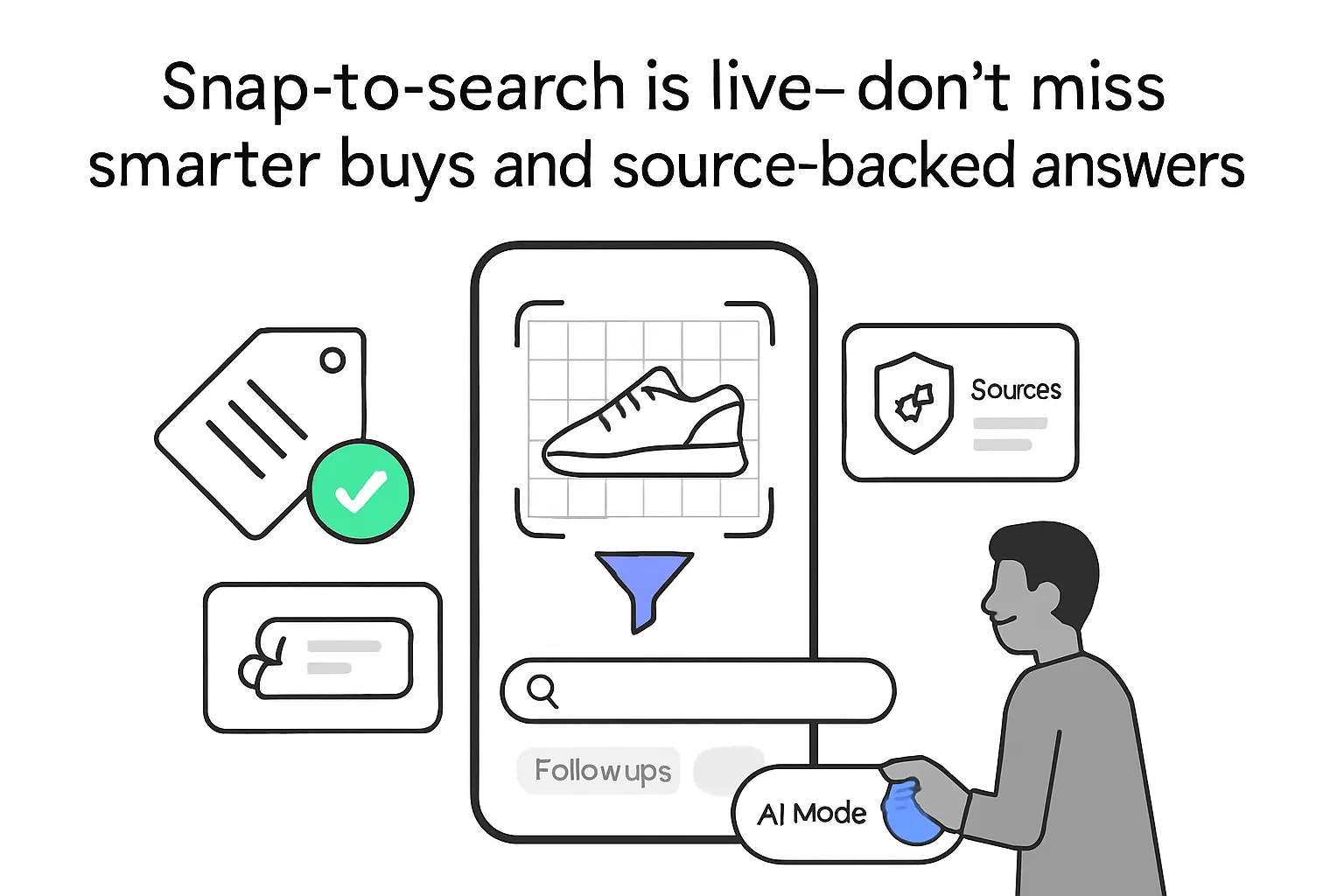

Google highlighted shopping use cases where natural descriptions replace manual filters. On mobile, users can search within a selected image and ask conversational follow-ups about objects they see.

We’ve all been there: staring at a screen, searching for something you can’t quite put into words.

The announcement was authored by Google VPs Robby Stein and Lilian Rincon.

Key details

- Availability - rolling out this week in U.S. English.

- Input modes - start with text or an image and continue conversationally.

- Results - each image links to its source page.

- Query examples - "maximalist bedroom inspiration" refined with "dark tones and bold prints."

- Shopping - describe products conversationally instead of using traditional filters.

- Product query example - "barrel jeans that aren’t too baggy" and "show me ankle length."

- Shopping Graph - more than 50 billion product listings, with more than 2 billion refreshed hourly.

- Product details - listings include reviews, deals, available colors, and stock status.

- Technology - built on Lens and Image Search, using Gemini 2.5 for multimodal and language understanding.

- Technique - visual search fan-out runs related queries in the background.

- Mobile - supports searching within a selected image and conversational follow-ups.

- Conversation continuity - follow-up questions refine results without resubmitting the image.

- Authors - announcement authored by Google VPs Robby Stein and Lilian Rincon.

- Regions - no additional availability beyond U.S. English was announced.

Background context

Google Lens introduced visual search through cameras and images in Search. AI Mode now combines those visual capabilities with conversational follow-ups in a single experience. Google says Gemini 2.5 provides the multimodal foundation for interpreting text and images together.

The Shopping Graph powers product discovery within this experience. Google reports that the index spans billions of listings from major retailers and local shops. Frequent refreshes keep reviews, deals, color options, and stock status current.

Source

Google - Search AI updates, September 2025: update

.svg)