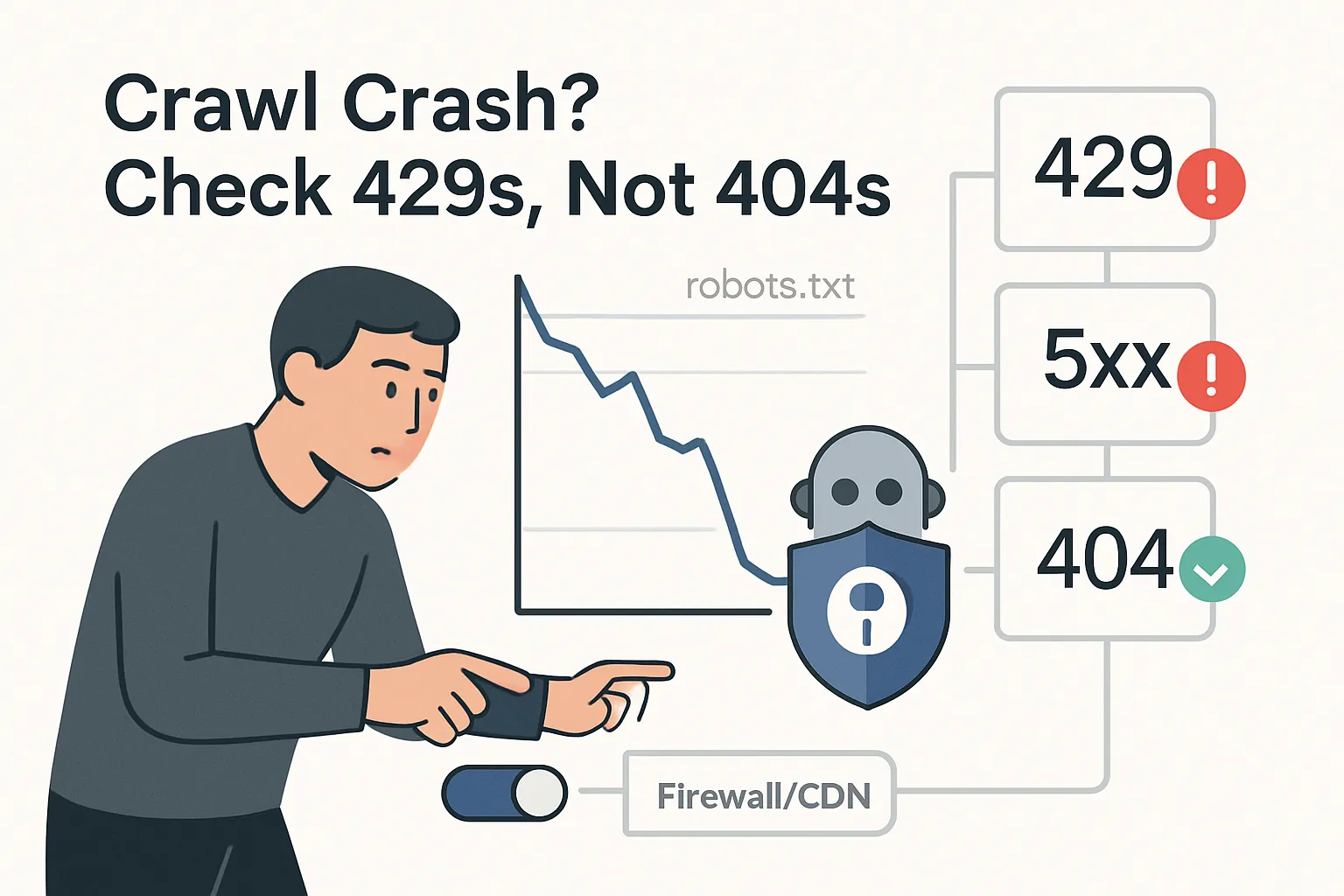

A sudden 80-90% drop in Googlebot crawl volume is more consistent with server availability errors (429/500/503/timeouts) or bot gating than with 404s from broken hreflang links. The practical question for marketers: how to diagnose root cause, estimate recovery windows, and mitigate business impact across SEO and paid.

Googlebot crawl slump: server errors, not 404s, drive sudden drops

The sharp, near-instant decline described in recent coverage and commentary is best explained by Googlebot’s adaptive rate control responding to perceived server stress or access blocks - not a penalty and not a crawl budget loss caused by 404s. Googlebot reduces concurrency quickly when it encounters 5xx/429/timeouts and increases it slowly after stability returns. For marketers, the levers are operational: verify what your servers and CDN returned, confirm no WAF or rate limiter gated Googlebot or AdsBot, and monitor crawl recovery in Search Console.

Key takeaways

- Treat sharp crawl drops as an availability problem, not a content problem. Prioritize log analysis for 429/500/503/timeouts and CDN/WAF blocks. 404s alone rarely trigger a 24-hour, 90% crawl slump.

- Expect fast cut, slow recovery. Googlebot slows quickly on errors and ramps back cautiously. Plan for multi-day to multi-week normalization depending on stability and scale, with no fixed SLA.

- Hreflang 404s are likely a red herring. Broken alternate URLs waste fetches but rarely collapse crawl rate. Look for hidden rate limiting or bot blocking that coincided with the deployment.

- Paid search risk is real if infrastructure is the cause. WAF/CDN rules that block Googlebot often also block AdsBot, leading to ad disapprovals or reduced delivery. Validate both bots’ access.

- Define error thresholds and alerts. Set internal triggers when 5xx/429 share rises materially above baseline to avoid prolonged crawl throttling and freshness lag.

Situation snapshot

A case highlighted by SEJ traced a ~90% crawl drop within 24 hours after deploying broken hreflang URLs in Link HTTP headers. In a related Reddit thread, John Mueller noted 404s are unlikely to cause such a rapid drop and pointed instead to 429/500/503/timeouts or CDN blocks.

Facts

- Google recommends 500/503/429 to intentionally slow crawling - not 403 or 404 - see guidance on reducing crawl rate.

- Googlebot adapts crawl rate based on server health. It slows quickly on errors and ramps up cautiously after stability returns.

- Recovery has no fixed SLA. Crawl generally normalizes once server issues are resolved.

Breakdown and mechanics

- Control loop: Server instability or blocks - higher share of 5xx/429/timeouts - Googlebot lowers parallel fetches and/or increases backoff - measured daily crawl drops - once stability returns, Googlebot gradually increases concurrency and crawl recovers.

- Why 404s do not fit: 404 indicates a valid terminal state at the application layer and does not signal server distress. A sudden 90% crawl drop within 24 hours is more consistent with 429/5xx/timeouts or being gated by CDN/WAF.

- Hreflang angle: Broken Link header alternates can trigger many extra fetches. If a rate limiter or bot defense reacted to that pattern, it could throttle or block Google IPs, turning a content error into an availability issue that then triggered crawl throttling.

Diagnostic workflow

- Extract GSC Crawl Stats by response, host, and file type. Look for spikes in 429/500/503/timeouts in the affected window.

- Compare with origin and CDN logs. Filter by Googlebot and AdsBot user agents and verify IPs via reverse DNS.

- Review CDN and WAF rules, rate limits, and bot protections for actions against Google IPs. Inspect event logs during the incident window.

- Validate no robots.txt, DNS, or SSL issues. GSC shows fetch failures by category.

- If throttling is intentional during maintenance, prefer 503 or 429 with Retry-After where supported, and keep sitemaps and robots accessible.

Quantifying - planning assumptions

Use these models for planning, not as Google policy.

- Assume a baseline of 100k fetches per day across 10 parallel connections. If error share exceeds roughly 5% or timeouts persist, effective concurrency may drop to about 2 - an 80% decline.

- If recovery increases concurrency by roughly 50% per day after stability (2 to 3 to 4.5 to 6.75 to 10), expect a 4-6 day return to baseline. Noisy stability can extend recovery to weeks.

Impact assessment

Organic search

- Short-term reduction in recrawl frequency and slower reflection of updates. New pages discovered slower if sitemaps and internal links are fetched less often.

- Effect size: moderate to high for frequently updated sites; lower for evergreen content.

- Actions: stabilize infrastructure; keep sitemaps lean and 200-OK; monitor Crawl Stats by response and purpose; prioritize critical sections in internal linking.

Paid search

- If infrastructure blocks or breaks Google IPs, AdsBot can fail, causing ad disapprovals or reduced delivery under "destination not working."

- Effect size: high during active throttling; negligible if only alternate hreflang URLs 404.

- Actions: test AdsBot access to top landing pages; whitelist Google IP ranges; watch for spikes in destination errors; stage changes outside peak campaigns.

Analytics and measurement

- Crawl drops can lag indexing and traffic impacts. Expect stale SERP snippets or delayed cache refresh.

- Actions: track log-based Googlebot requests vs GSC series; alert when 5xx/429 exceeds 1% or 10x the seven-day baseline to trigger engineering escalation.

Engineering and ops

- Require reproducible bot verification, clear WAF and CDN rules for Googlebot and AdsBot, and rate limits that prefer 503 or 429 over silent timeouts.

- Actions: implement canary routes for bot traffic; serve 503 with short Retry-After for planned throttling; keep robots.txt and sitemaps fast and available; load-test bot patterns created by hreflang or faceted links.

Scenarios and probabilities

- Base - likely: Root cause is 429/5xx/timeouts or CDN/WAF gating coincident with the hreflang deployment. After fixes and 3-14 days of stability, crawl recovers progressively with a temporary freshness lag.

- Upside - possible: Clear fix plus explicit 503 or 429 with Retry-After during short maintenance windows and strong allow-rules for Google IPs. Crawl normalizes within 3-7 days with minimal impact.

- Downside - edge: Intermittent gating persists due to aggressive bot defenses, shared hosting saturation, or misidentified IPs. Crawl remains depressed for weeks, delaying discovery and risking AdsBot issues.

Risks, unknowns, limitations

- No public thresholds for Googlebot backoff or recovery. The timeframes above are planning assumptions, not guarantees.

- Confounding factors such as robots.txt changes, DNS or SSL renewals, or IP migrations can mimic availability issues.

- User agents can be spoofed. Verify Google IPs via reverse DNS to avoid misattribution.

- Falsification: if logs show near-zero 429/5xx/timeouts and no CDN or WAF actions while crawl still dropped 90% within 24 hours, investigate DNS failures, connection resets, or robots.txt fetch errors.

Sources

- Search Engine Journal, Aug 2025 - Googlebot crawl slump coverage.

- Reddit thread featuring John Mueller’s comment.

- Google Developers documentation on reducing crawl rate.

.svg)