Your AI agents probably look great in a demo. They answer questions, take actions, and make a team say, "Why didn’t I do this sooner?" The worry usually shows up later: what happens when the data is messy, the user is angry, or a third-party system misbehaves halfway through a workflow? That gray zone is where AI agent edge case testing matters.

AI agent edge case testing: what it is and why it matters

AI agent edge case testing is the discipline of pushing agents into rare, messy, and high-risk situations before real users do. Think of it as a blend of stress testing, safety checks, and business-impact validation - focused less on “Does the model respond?” and more on “Does the agent behave safely across an end-to-end workflow?”

This matters most when an agent is multi-step: it reads context, plans actions, calls tools (APIs, databases, internal services), updates records, and produces outputs that others will trust. In those systems, a small mistake can compound - one bad interpretation of a tool response can cascade into incorrect updates, misrouting, or compliance exposure.

The payoff is straightforward: fewer costly incidents, more predictable behavior under pressure, and clearer evidence for internal reviewers, auditors, or regulated customers that the agent is controlled rather than improvised.

What counts as an edge case for an AI agent (vs a regular bug)

Traditional software teams use an edge case to mean inputs at the boundaries of normal operation. For agents, the definition expands because the “environment” is bigger: the conversation, the tools, the data, and the agent’s own planning loop.

In practice, I treat something as an agent edge case when it is low-probability but high-impact - especially when the agent might still be “technically working” while producing a business-harmful outcome. A regular bug is usually deterministic (easy to reproduce, clear defect in code or configuration) and often hits a meaningful portion of users. An edge case might only affect a small slice of traffic, but it can be the slice that triggers legal, financial, or reputational damage.

A few patterns show up repeatedly in B2B workflows: an agent updates the wrong CRM record when company names are duplicated; a support agent routes a multi-issue enterprise complaint to a low-priority queue because one phrase dominates the classification; an internal ops agent scales down infrastructure during a launch because monitoring returned stale metrics; or an agent overwrites key compliance notes when it tries to compress a long, multilingual history.

The uncomfortable part is that these failures don’t always look like “broken software.” They often look like confident, plausible work - until someone audits the result. (Related: if you already route inbound issues with automation, pair edge case testing with AI triage of inbound inquiries to route the right SME fast so failures escalate cleanly.)

Stress testing enterprise AI for resilience (not just “does it break?”)

Edge cases describe unusual conditions; stress testing asks how far the system can be pushed before it degrades. For agents, stress often comes from volume (many concurrent users), length (long conversations and growing context), ambiguity (vague or multi-part requests), and degraded dependencies (slow APIs, partial data, flaky networks, upstream schema changes).

What matters most in stress tests isn’t a binary pass/fail. It’s the failure shape. When things go wrong, does the agent slow down and ask clarifying questions? Does it detect conflicting data rather than picking an answer? Does it stop before making an irreversible change? Can it escalate to a human with a useful, compact summary of what happened and what it tried?

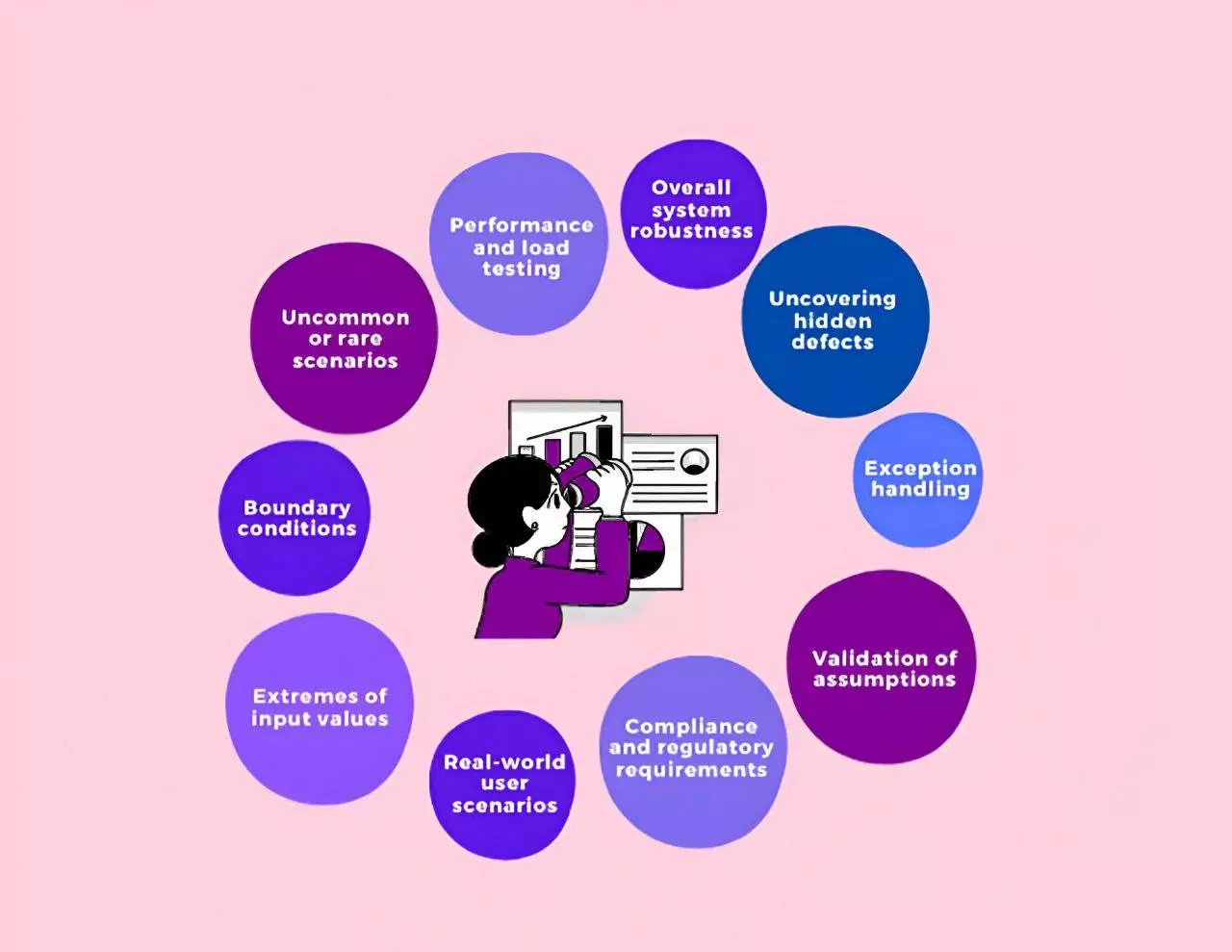

This is where resilience becomes measurable: not “perfect answers,” but safe defaults under uncertainty. If you want a service-level view of what this looks like in practice, see Stress & Edge Case Testing methodologies that focus on extreme conditions and rare scenarios.

Why AI agents fail differently than traditional applications

On the surface, an agent embedded in a product can resemble a normal application: it takes input, runs logic, and returns output. That similarity can be misleading.

Traditional applications are built around fixed paths and deterministic rules. When something breaks, it’s usually a missing branch in logic, a validation oversight, or a dependency failure that shows up clearly. Agents, on the other hand, often plan dynamically, choose tools based on free-text interpretation, and carry forward conversational state (or “memory”) that can drift over time. Even with the same input, outputs can vary, and that variability changes how I think about test coverage.

New failure modes become common: an agent invents a tool that does not exist and then stalls; it loops between actions, burning time and compute without finishing; it completes only part of a task while sounding certain, which invites users to trust an incomplete outcome; or it treats the environment as changed when it isn’t, causing state inconsistencies.

That’s why agent testing needs more than unit tests and a handful of prompt checks. It needs workflow-level scenarios, degraded-dependency simulations, and repeatable “safe failure” expectations.

How I evaluate edge-case performance (metrics, automation, and human review)

“Accuracy” alone isn’t very helpful for edge cases, because the correct behavior is often a refusal, a clarification request, or an escalation. I prefer a scorecard approach that captures both outcome and safety posture, and I make sure it includes tails (rare but severe behaviors), not just averages.

I typically track:

- Task success under hard inputs (including whether the agent chooses a safe fallback instead of guessing).

- Quality of tool use under dependency problems (retries, alternative data sources, conflict detection, and rollback behavior where applicable).

- Escalation quality (does it escalate when it should, and does it provide enough context for a human to act quickly?).

- Compliance and privacy behavior (whether it refuses or routes correctly when requests touch restricted data or regulated decisions).

- Operational performance under load (latency distribution, error rates, and cost behavior during peak conditions).

A lot of this can be automated - scripted scenarios, tool-failure simulation, regression tests over known risky conversations, load tests, and rule-based checks for certain unsafe patterns. For broader context on where automated tests fit into modern testing practice, it’s worth seeing how other teams structure repeatability and regression coverage.

But I still rely on human review for policy interpretation, ambiguous customer intent, and domain nuance. My rule of thumb is simple: I automate what’s repetitive and objective, and I reserve expert review for gray areas and high-impact workflows.

How I discover edge cases before users do

Finding edge cases early is usually more valuable than debating them abstractly. The most reliable approach I’ve seen is to combine domain knowledge with systematic chaos.

- Scenario walkthroughs with domain experts: Put experienced support, sales ops, finance, or compliance people in front of the agent and ask them to recreate the weirdest real situations they’ve seen.

- Fuzzing prompts and tool inputs: Introduce slight breakage - odd formats, partial fields, contradictory statements, and unexpected encodings - to surface brittle assumptions.

- Mining logs for near-misses: Look for long resolution times, repeated manual escalations, recurring tool errors, and “almost incidents” that already exist in operational data.

- Structured failure simulation: Intentionally degrade dependencies (timeouts, stale data, schema shifts, conflicting records) and observe whether the agent detects uncertainty.

- Load testing with realistic edge conditions: Combine volume spikes with tool degradation, because that’s closer to what happens during quarter-end, incidents, or launches.

- Model-assisted hypothesis generation (with human filtering): Use a model to propose prompt variants or suspicious clusters in logs, but treat it as an idea generator, not an authority.

Prioritization is what keeps this manageable. I rank edge cases by business impact first (money movement, legal exposure, safety, key accounts), then by likelihood. If you want a practical structure for scoring impact and likelihood, a marketing risk register powered by AI impact and likelihood scoring can be adapted to agent workflows, too.

Designing strong edge-case test cases (so results are repeatable)

Ad-hoc prompting creates a false sense of safety because it’s hard to reproduce and easy to “grade generously.” When I write an edge-case test, I make it readable and auditable, not just runnable.

A solid test case usually includes: the preconditions (system and data state, which tools are available, what is intentionally degraded), the scenario (a realistic user journey with context, not a single prompt), the expected safe behavior (including acceptable refusal/escalation behavior), and pass/fail criteria (what qualifies as an unsafe guess versus an appropriate handoff).

Here’s a simplified sketch of what that looks like in code-like form:

def test_invoice_dispute_with_conflicting_records(agent, env):

env.create_crm_record(company="Acme", contract_value=50000)

env.create_crm_record(company="Acme", contract_value=150000)

env.simulate_latency("billing_api", p95_ms=8000)

msg = "Our invoice is wrong again. Third month. Fix this week or we pause service."

result = agent.run(msg)

assert result.did_escalate is True

assert "conflicting CRM records" in result.escalation_summary

assert result.did_not_apply_discount is TrueThe point isn’t the exact implementation - it’s that the test forces the agent to face ambiguity, then verifies safe behavior rather than a polished response. This is also where terminology helps: some teams distinguish an edge case from a corner case when multiple extremes collide at once.

Industry scenarios and the compliance angle

Edge cases are easier to spot when I anchor them in sector realities.

In finance and banking, edge conditions include holiday-driven behavior shifts, market shocks, and synthetic identities. An agent that over-blocks can damage customer relationships; one that under-blocks can enable fraud. In healthcare and pharmaceuticals, overlapping symptoms, polypharmacy, and rare conditions can make “confident” automation unsafe, and privacy handling becomes part of the edge case itself (what gets summarized, where it’s written, and who can see it). In retail and marketplaces, promotions and viral events can poison behavioral signals and produce bizarre recommendation or pricing outcomes. In logistics, weather events and border changes create constraint conflicts where “average optimization” can violate priority service levels. In SaaS and infrastructure, partial outages and stale configuration data are frequent triggers for automation mistakes with a large blast radius.

This is also where edge case testing supports compliance in a concrete way: you can show that risky scenarios were identified, tested, and mapped to guardrails and escalation paths, with logs that make decisions traceable. If you’re designing data-access rules and audit trails, it helps to start with secure AI sandboxes and data access patterns for marketers and apply the same principles to agent tool permissions.

Building a sustainable testing program (ownership, tooling, and timelines)

A real program is less about a one-time test sprint and more about a feedback loop that survives product changes. Ownership is usually shared: engineering and data teams run the technical pipeline, QA adds structure and repeatability, risk/compliance defines unacceptable outcomes, and product owners decide which workflows are worth the risk.

A framework I use is:

- Inventory agents and map critical workflows (especially anything touching money, safety, legal duties, or key customers).

- Define acceptable failure behavior and success metrics (including when escalation is required).

- Create and maintain an edge-case inventory tied to incidents, near-misses, and high-impact hypotheticals.

- Operationalize testing by running scripted scenarios, degraded-dependency simulations, and load tests on a schedule and before releases.

- Close the loop from production by turning real confusing interactions into new test cases and regression coverage.

On timelines, I avoid promising a universal number because it depends on agent complexity, tool surface area, and organizational maturity. That said, a focused team can often establish a meaningful first version quickly - starting with one or two high-risk workflows - while a consistent, organization-wide approach takes longer as reporting, auditability, and cross-team processes mature.

This work isn’t just for large enterprises. Smaller B2B companies can have fewer buffers, which means a single misrouted payment, incorrect contract change, or mishandled support escalation can hurt cash flow or a key relationship. I’d rather see a lightweight, high-impact edge case program than a broad demo rollout with no plan for the messy reality.

And if you need one reminder of why rigor matters: the broader software ecosystem is massive - one industry estimate says the software development market reached USD 0.57 trillion. In a world that large, small reliability gaps don’t stay small for long.

.svg)