When I talk to B2B founders, I rarely meet anyone who is not already spending real money to get visitors to their site. Google Ads, LinkedIn, cold outbound, maybe a growing stream from SEO and content. Yet the pipeline still feels fragile. One month looks great, the next your demo calendar is half empty and your cost per opportunity creeps up again.

That is where A/B testing starts to pull its weight for a B2B service business. It does not bring more traffic. It quietly makes the traffic you already have work harder for you.

A/B testing for B2B services

Most B2B founders I speak with do not want more clicks. They want more qualified conversations, better fit deals, and revenue growth without blowing up ad spend. A/B testing is one of the few levers that directly supports that goal. Instead of guessing which headline, form, or offer will convert, you run a controlled experiment and let real users tell you what works.

Because you already pay to get people to the site, any lift in conversion goes straight to your unit economics. If your core service page turns 2 percent of visitors into demo requests and you move that to 3 percent, you just increased leads by 50 percent with no extra traffic. That is not just a nicer dashboard. That is more pipeline for sales to work.

To see the contrast, think about paid channels. Click costs keep rising, targeting is getting noisier, and many accounts reach a strange plateau where no matter how much you tweak bids, the needle barely moves. A/B testing flips that. You keep your traffic steady and improve what happens after the click.

A quick mini example from a service firm: a B2B consultancy changed the hero headline on its main service page from “Full service growth consulting” to “We add 7+ sales qualified opportunities a month for B2B teams.” Nothing else changed and traffic volume stayed the same. Over six weeks, demo bookings moved from 1.1 percent of visitors to 2.3 percent. Same ad spend, almost double the conversations.

You can think about it with a simple funnel:

Impression → Click → Lead → Opportunity → Deal

A/B testing can move numbers at several points here. It can increase clicks on a call to action, raise form submission rate, or improve how many leads turn into real opportunities because the copy sets better expectations. If you have not mapped how each step behaves yet, this guide on how to audit your sales pipeline is a useful starting point.

How A/B testing fits into a scalable growth system

A/B testing is not a magic switch. It is one part of a larger growth engine. In my experience, that engine rests on three pillars: SEO that brings the right people to you, content that builds trust and intent, and conversion work (including A/B testing) that turns that intent into pipeline as part of broader website optimization and Conversion Rate Optimization (CRO).

If you only test button colors or email subject lines, you hit a ceiling fast. When tests are tied to revenue metrics, things change. Instead of chasing “more page views” or “higher time on site”, you track demo requests, qualified opportunities, and revenue per visitor. Your tests become small bets connected to actual money.

This matters even more for high ticket B2B services. When a new client is worth 20k, 50k, or more per year, you do not need massive traffic numbers for A/B testing to be worthwhile. A lift of even 0.3 percent on a high intent page can pay for a year of serious experimentation.

What is A/B testing?

A/B testing is a simple idea. You show two versions of a page or element to different visitors at the same time and see which version gets more people to take a specific action, such as booking a call, filling out a form, or starting a trial.

- Version A is your control (what you are running now).

- Version B is the variation (your new idea).

Your testing tool splits traffic between them and measures which one wins.

At a high level, I care about three things: conversion rate (out of 100 people who see each version, how many take the action you care about), sample size (enough visitors so that results are not random noise), and statistical significance (a signal that the result is very likely real, not luck). If you want to sanity check results, a simple calculator for statistical significance can help.

You do not need to do the math yourself. Good tools handle the statistics; your job is to define the right question and keep the test running long enough.

A simple diagram:

Control (A) vs Variation (B) → Compare conversions → Keep the winner

A/B testing is a good fit when you have a clear goal such as “demo requests from this page”, that page gets regular traffic every week, and you can make a change that a visitor would clearly notice. It is less suitable when traffic is tiny, when the outcome takes many months to show up, or when the change is so subtle that even strong data will not tell you much.

Key terms in A/B testing explained simply

Conversion. The action you want someone to take, such as “Book a demo” or “Download the whitepaper”.

Control. Your current version, the baseline you compare against.

Variation. The new version you want to test against the control.

Confidence level. How sure the system is that the winning version is truly better and not a fluke.

Test duration. How long you run the experiment before you trust the result.

In my experience, if a CEO can speak to these five ideas, they can have sharp conversations with any marketing or growth partner without getting lost in jargon.

What you can A/B test on a B2B website

Almost any part of your funnel can be tested, yet not every test has the same upside. For B2B services, you care most about the paths that move visitors toward conversations and qualified pipeline.

Homepage and hero section

Your homepage sets the first impression. On this page, I often focus on the main headline and subheadline, the first call to action (for example, “Book a call” versus “See how it works”), above the fold proof such as logos, short results, or a client quote, and navigation labels that guide people toward your core services. For a deeper walkthrough of structure and messaging, see this guide on homepage copy that converts visitors.

Service and solution pages

These pages usually convert better than the homepage because intent is higher. Useful test ideas include shifting from generic taglines to concrete outcome focused headlines, presenting your service process in different ways, bringing industry specific proof or case stories closer to the top, and adding comparison blocks that explain “Why choose this vs doing nothing”.

Lead generation landing pages

Campaign or PPC landing pages are perfect for A/B testing. I look at whether one clear offer outperforms a page with multiple secondary links, whether simple benefit led copy beats long form explanations, how different CTAs such as “Talk to sales” versus “Get a strategy session” perform, and whether short or more detailed descriptions of who the offer is for convert better. For high ticket B2B, pairing focused pages with lead magnets that work for high-ticket services often gives tests more upside.

Blog to lead journeys

Blogs often attract traffic but do little for pipeline. Testing can change that by comparing in article calls to action with exit intent prompts, offering different types of content upgrades (for example, a more detailed guide or template), or moving signup forms between the top, middle, and end of articles to see where they perform best.

Pricing and ROI pages

Pricing feels sensitive, which makes it good ground for experiments. I often test adding example packages or indicative ranges instead of a bare “Contact us for pricing”, including an ROI explainer or simple calculator, clarifying “what is included” in each option, and adding friction reducers such as guarantees or clear cancellation terms. If pricing is a constant headache in your funnel, this overview on pricing pages that reduce tire-kickers is worth a look.

Demo and contact forms

Form changes can move your pipeline more than almost any other test. Typical experiments include changing the number of fields and which ones are required, comparing a single step form with a multi step flow, rewriting a plain “Submit” button into something more specific like “Schedule my call”, and placing short trust copy near the button, such as “I reply within one business day”. If form spam or junk leads are a problem, this guide on lead quality control will help you separate good experiments from bad leads.

To put this into a simple view, here are some high impact first tests for B2B:

| Area | High impact first test idea |

|---|---|

| Homepage hero | Outcome focused headline vs generic positioning line |

| Core service page | Proof (logos, metrics) above the fold vs lower on the page |

| Demo / contact form | Short form (name, email, company) vs longer qualification |

| Pricing / ROI page | “Contact us” CTA vs “Talk to a pricing specialist” |

| Blog posts | Inline CTA banner vs simple text link at the end |

Start with these kinds of changes before spending time on minor design tweaks.

Prioritizing A/B testing ideas for maximum ROI

Your team will always have more test ideas than capacity. I keep prioritization simple by scoring each idea on three factors, each on a 1 to 5 scale: potential impact (if this works, how much money could it move), ease (how easy it is to design, build, and launch), and traffic (whether the page gets enough visitors to reach a clear result in a realistic time). Multiplying those three numbers gives a rough score, and higher scores move to the front of the queue.

Good ideas rarely come from brainstorming alone. Structured voice-of-customer interviews, sales feedback, and analytics patterns usually surface better hypotheses than internal opinions.

That scoring usually pushes high intent pages to the top. A small change on the “Book a demo” page is almost always worth more than a test on a low traffic blog post. For a skeptical CEO who needs quick, visible wins, I have seen this kind of focus build trust in the whole process.

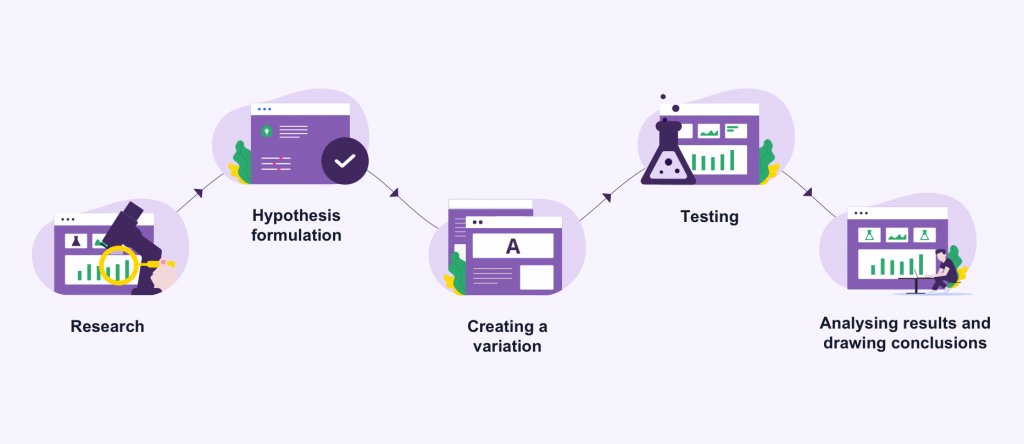

How to run an A/B test

Running an A/B test does not need to be complex. I treat it as a simple flow.

- Define the business goal. Decide what this test is meant to improve. For example: “Increase qualified demo requests from our main service page.”

- Pick one key metric. Choose the main number you will judge the test on, such as demo form submissions per visitor, not just clicks on the page.

- Form a clear hypothesis. Write one sentence: “If I change X for audience Y, I expect Z result.” For example: “If I change the CTA from ‘Request a proposal’ to ‘Book a strategy call’, more visitors will feel comfortable taking the next step, increasing demo requests.”

- Choose the page and variation. Decide what you will change. It might be a headline, layout, or form. Keep each test focused on one key change so that you know what caused the difference.

- Implement in a testing tool. Your team sets up the variation inside an A/B testing platform and ensures traffic is split fairly between A and B. Analytics and CRM tracking should be in place so that later you can see not just leads, but pipeline value. If you do not have this wiring yet, this walkthrough on how to track leads without a developer is a good reference.

- Run the test long enough. Resist the urge to check results every day and call a winner the moment you see a spike. Let it run across full weeks and normal traffic cycles, until the tool signals that the result is stable.

- Analyze and choose a winner. Look at your main KPI first, then check second layer numbers such as lead quality and downstream conversion to opportunities.

- Roll out and log learnings. Ship the winning version as the new default, record the result in a simple log, and note what you learned so that future tests get smarter.

I usually talk about three test types: simple A/B testing (one page, two versions, same URL), split URL testing (traffic split between two different URLs, often used for bigger layout changes), and multivariate testing (several elements changed at once to test combinations). For most B2B service websites, simple A/B tests are more than enough. They are easier to run, easier to read, and better suited to moderate traffic.

A short example walk through: imagine you run a consulting firm and your main CTA says “Request a proposal”, which feels heavy for early visitors. You create a variation where the button says “Book a strategy call” and you add a line under it: “30 minutes, no sales pitch”. Your hypothesis is that this will lower friction and increase form submissions. You set up the test, split traffic 50/50, and run it for four weeks. If variation B improves form submissions by, say, 28 percent and the percentage of leads that turn into sales opportunities stays flat or slightly better, that is a clear win worth rolling out.

Planning an A/B testing calendar

To keep A/B testing from turning into random “experiments when I feel like it”, treat it as a simple calendar.

For many B2B sites, planning one to three meaningful tests per month is realistic. You can keep this in a spreadsheet or project tool with columns such as:

| Test name | Hypothesis | KPI | Start | End | Owner | Result / learning |

|---|---|---|---|---|---|---|

| Service hero headline | Outcome headline will increase demo requests | Demo submissions per visit | Mar 1 | Mar 28 | Sara | +22 percent, keep new headline |

| Demo form length | Shorter form will increase submissions without hurting SQO | Submissions, SQO rate | Apr 3 | Apr 30 | Alex | +40 percent submissions, SQO flat |

| Pricing page CTA wording | “Talk to sales” vs “Talk to an expert” | Clicks and demos from page | May 5 | Jun 2 | Jordan | No clear lift, revisit messaging next |

From my perspective, the key piece for a CEO audience is clear ownership. Someone is responsible for launching the test on time, closing it on time, and sharing the result. That is often what went missing in past agency relationships that founders tell me about.

Common A/B testing mistakes and challenges

Many A/B programs in B2B environments stall for the same reasons. Here are common traps I see, and what to do instead.

1. Testing the wrong pages

Effort often goes into low intent content where even big lifts barely move revenue. Focus first on high intent pages such as service pages, pricing, and demo forms.

2. Running tests on very low traffic

After weeks of testing, the result is still unclear, so people lose faith and stop. Use A/B tests on pages with steady traffic. For thin traffic pages, rely more on qualitative research and larger design changes reviewed over longer periods.

3. Changing too many elements in one go

A version wins, but nobody knows why. Was it the headline, the proof, or the layout? Group related changes, yet keep each test focused on a single core idea.

4. Ending tests too soon

It is tempting to stop the test in the first week when variation B looks good, then traffic normalizes and the effect disappears. Agree upfront on minimum duration and minimum sample size, and stick to it unless something is clearly broken.

5. Chasing tiny uplifts that do not matter

You might celebrate a small lift in click through rate that has no visible effect on pipeline or revenue. Tie every test to a metric your CFO and sales leader care about, such as qualified opportunities or proposal volume.

6. Ignoring lead quality and sales feedback

It is easy to boost form fills by making the offer vague, then hear from sales that leads are unqualified. Track stage progression in the CRM and involve sales early when you change messaging and offers.

7. Not implementing winners properly

Sometimes a test shows a clear winner, yet the change never makes it to production, or gets overwritten in a redesign. Give someone clear responsibility for deploying winners and guarding them as the new standard.

8. Forgetting about long sales cycles

Judging tests only on form submissions can be misleading when your sales cycle is long. Use micro conversions for quicker feedback, yet spot check downstream data after a few months to see if lead quality changed.

A/B testing and SEO

Many B2B leaders worry that A/B testing might hurt organic rankings. Done badly, it can. Done well, it usually helps, because search engines reward pages that engage users and drive action. Google has cleared the air on their blog post titled “Website Testing And Google Search”, and their recommendations align with what follows.

I pay attention to a few practical points:

Client side vs server side changes. Most tools run client side, meaning they change the page in the browser with JavaScript. That is usually fine, but test pages should still load fast and not flicker between versions.

Avoid cloaking. Search engines should see the same content that human visitors see. Do not create a special “clean” version just for crawlers.

Use 302 redirects for split URL tests. When you test two different URLs, keep redirects temporary (302) so that search engines know you are experimenting.

Add canonical tags where needed. In split URL tests, use rel="canonical" to point variants back to the main page, so authority is not split for the long term.

Keep tests time bound and do not stuff keywords. Once you find a winner, stop the test and clean up. Avoid variants that cram in extra keywords for SEO at the cost of clarity; both users and search engines respond better to clear, useful copy.

When you run thoughtful tests on pages that already get organic traffic and you improve engagement and conversions, SEO usually benefits rather than suffers.

B2B A/B testing examples

To make this concrete, I like to use a few short stories based on typical B2B service scenarios.

Example 1: Consulting firm boosts demo requests with a sharper headline

A mid size consulting firm had a service page with a vague headline and a low demo request rate. The hypothesis was that making the outcome and audience explicit in the headline would lift conversions. The team changed “Consulting for growing companies” to “I help B2B founders add 1 to 3 extra sales qualified deals per month.” Demo request rate rose from 1.4 percent to 2.6 percent across eight weeks while lead quality stayed steady. The takeaway: clear, specific outcomes usually beat generic positioning.

Example 2: IT services company simplifies a heavy form

An IT services provider had a 14 field contact form on its “Talk to us” page. Many visitors started the form then dropped off. The hypothesis was that asking only for the information needed for the first call would increase completions without hurting qualification. The form was reduced to six fields, with a short note explaining why certain details were requested. Submissions increased by 38 percent, and the rate of leads becoming qualified opportunities declined only slightly. Net, there was more qualified pipeline. The takeaway: shorter, clearer forms are often worth testing, even in B2B.

Example 3: Agency pricing page adds a simple ROI explainer

A marketing agency had solid traffic to its pricing page, yet many visitors left without engaging. The hypothesis was that explaining how typical clients see payback would give buyers confidence to start a conversation. The team added a one screen ROI explainer with ranges, simple math, and two short client outcomes. Clicks from the pricing page to the “Book a call” form increased by 24 percent, and average deal size ticked up over the next quarter. The takeaway: good pricing pages reduce anxiety, and A/B testing can show what kind of reassurance your buyers need.

Example 4: A test that did not work, yet still helped

A SaaS company serving B2B clients wanted to increase free trial starts. The hypothesis was that a brighter CTA color and animated button would draw more clicks. The “Start trial” button changed color and included a subtle pulse animation. Clicks increased a little, but trial starts and paid conversions did not. Some users even mentioned in interviews that the animation felt “pushy”. The takeaway: not every test wins on the metrics that matter, and a “failed” test can still give you guidance about what your audience dislikes.

Quick A/B testing ideas you can launch this quarter

Here are practical ideas I often suggest when a CEO wants to get started without heavy development work:

- Test an outcome focused hero headline vs a generic “We help companies grow” line.

- Try “Book a strategy call” vs “Request a proposal” vs “Talk to an expert” as your main CTA.

- Add client logos above the fold vs lower on the page.

- Compare a short demo form vs a more detailed form that asks about deal size or timeline.

- Show a brief guarantee or simple “how we work” summary near the CTA vs no reassurance.

- Add a one line result under a client quote, such as “+32 percent qualified pipeline in six months”.

- Test different subject lines for outbound sequences that send people to your calendar. Benchmark your performance against typical average open rates so you know if a result is truly strong.

- Experiment with a sticky “Talk to us” bar on mobile vs relying on buttons in the content.

- Add a simple “What happens next” section below forms vs leaving next steps vague.

- On blog posts with traffic, compare an in text CTA paragraph vs a graphic banner.

These are all tests that can run with standard tools and a bit of copy and design time.

Conclusion

For B2B service companies, A/B testing is a quiet compounding lever. It does not shout like a new ad campaign, yet it steadily raises the share of visitors who turn into real pipeline and revenue. Instead of pushing for more traffic at higher prices, you make every current visit more productive.

The path is clear. Start with high intent pages, pick metrics that match your sales targets, avoid common testing traps, and treat experimentation as a regular habit rather than a side project. Over a few quarters, those small percentage gains stack up into serious growth.

Some companies handle all of this in house; others bring in specialist partners. If you choose to involve external help, look for people who understand B2B sales cycles, connect A/B tests directly to CRM and revenue data, and take real ownership of planning, running, and closing experiments. That kind of setup lets you stay focused on strategy while your site gets sharper, month after month. If you are rebuilding other parts of the funnel at the same time, resources on content refresh strategy and setting quarterly marketing goals that map to P&L can help keep experiments aligned with the bigger picture.

Frequently asked questions on A/B testing

How much traffic do I need for A/B tests to be useful?

There is no single magic number, but as a rough guide, a page with a few thousand visits per month can often support meaningful tests. What matters more is how many conversions you get. If a page gets only a handful of leads per month, tests will take a long time to show a clear result, so it may be better to focus tests on higher volume, higher intent pages or first work on driving more qualified traffic.

How long until I see results from A/B testing?

On a healthy B2B site, early wins often show up within four to eight weeks. That includes running at least one solid test and then rolling out the winner. Larger design or copy shifts can take longer to plan but can pay off more too. The real power of A/B testing shows over quarters, not days.

What if my sales cycle is very long?

If it takes months to close a deal, you do not need to wait that long for every test. Use earlier funnel stages as proxy metrics, such as qualified demo requests or opportunities created. Then, on a slower cycle, you can check back whether certain changes also affected revenue and deal quality.

Can A/B testing replace paid acquisition?

No. A/B testing does not create demand. It improves how well you convert the demand you already have. Many strong B2B companies use both: paid channels to control volume and A/B testing with SEO and content to raise conversion rates and lower cost per opportunity over time.

Do I need a developer, or can marketing own this?

Many tools let marketing teams launch A/B tests on copy, layout, and simple forms without heavy engineering support. For deeper experiments, such as pricing logic or app level flows, a developer will help. What matters is that someone in the organization clearly owns the testing roadmap and has the access needed to implement changes.

If I bring in an A/B testing or SEO partner, how can I tell they are truly accountable?

I would ask which metrics they will report to you each month, how they connect tests to CRM data, and who signs off on stopping or extending a test. Partners who talk mainly about clicks and “engagement” are easier to replace than those who speak fluently about qualified pipeline, win rate, and revenue per visitor. The right partner will be comfortable owning both the experiments and the business outcomes linked to them.

.svg)