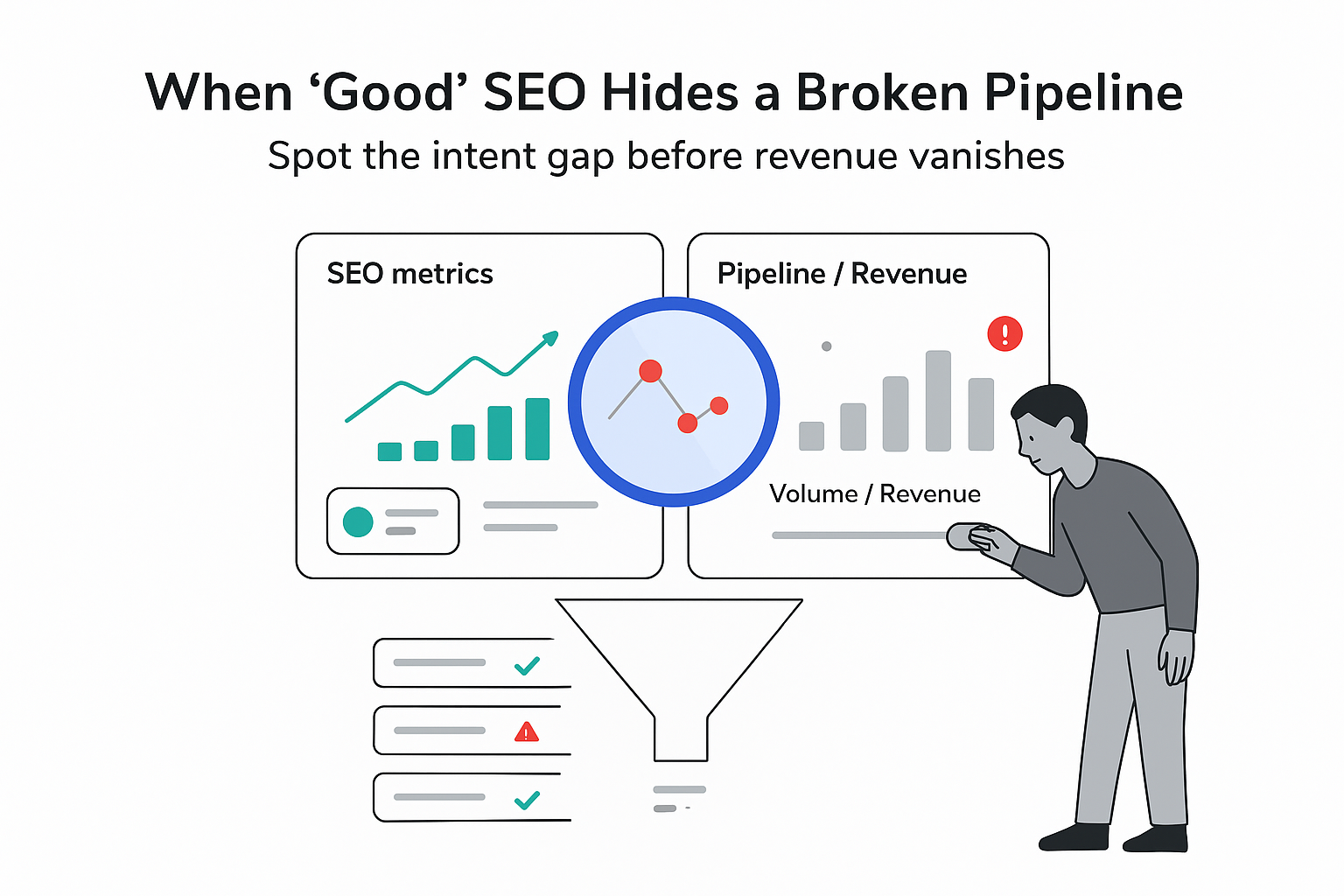

Your search traffic numbers may look decent on paper, yet your pipeline tells a different story. Lead quality is inconsistent, CAC keeps creeping up, and the sales team starts treating "SEO leads" as a punchline. I’ve seen plenty of monthly reports full of charts that never answer the only question that matters: which pages helped create qualified opportunities and closed revenue?

That gap is where AI search intent optimization becomes useful for B2B companies. It’s the practice of aligning (1) what buyers are actually trying to accomplish, (2) how modern search systems interpret that intent, and (3) what your content proves - so the right organic visits turn into real pipeline.

The revenue gap between traffic and pipeline

For B2B services, the game is rarely “more sessions.” It’s revenue predictability: getting in front of the right people at the right buying stage, with content that matches their decision criteria.

If organic growth feels stuck, it’s often because the content strategy is built around keyword volume and rankings rather than purchase intent and sales-cycle reality. If you’re still optimizing primarily for search engine positioning, it’s easy to win visibility while losing the deal.

AI-driven search surfaces (overviews, summaries, chat-style answers) amplify this problem. They can satisfy basic queries without a click, while sending fewer - but potentially more qualified - visits to sources that are clearly structured and credible.

| Approach | What it focuses on | Typical outcome for B2B services |

|---|---|---|

| Keyword-first SEO | Search volume and broad rankings | Traffic that rarely turns into opportunities |

| Intent-led SEO | Buyer stage, objections, decision criteria | Fewer visits that convert at a higher rate |

| AI search intent optimization | Human intent + how AI systems extract answers | Higher chance of being cited/quoted and trusted in AI surfaces |

I don’t treat this as “SEO vs. AI.” I treat it as a shift in how visibility is earned - and how quickly irrelevant content gets filtered out. If you’re building a pipeline-first program, this pairs naturally with a pipeline-first B2B SEO growth approach.

What “intent” means in modern, AI-shaped search

Search intent optimization means aligning content with the reason behind a query, not just the words. When someone searches, they’re trying to do something: learn, compare, validate, shortlist, or take an action.

Older SEO could win by matching phrasing. Modern systems infer meaning using signals such as query context, related entities/topics, and aggregate behavior patterns across similar searches. In other words, the model is trying to predict: what would satisfy this person right now? And in many cases, it tries to answer directly in the results.

That changes what “good content” looks like. It’s not only relevant - it’s also easy to extract, hard to misquote, and scoped to one primary job-to-be-done. If I want a page to show up in AI-generated summaries, I need it to read like a dependable source, not a vague opinion piece or a thin lead-gen wrapper.

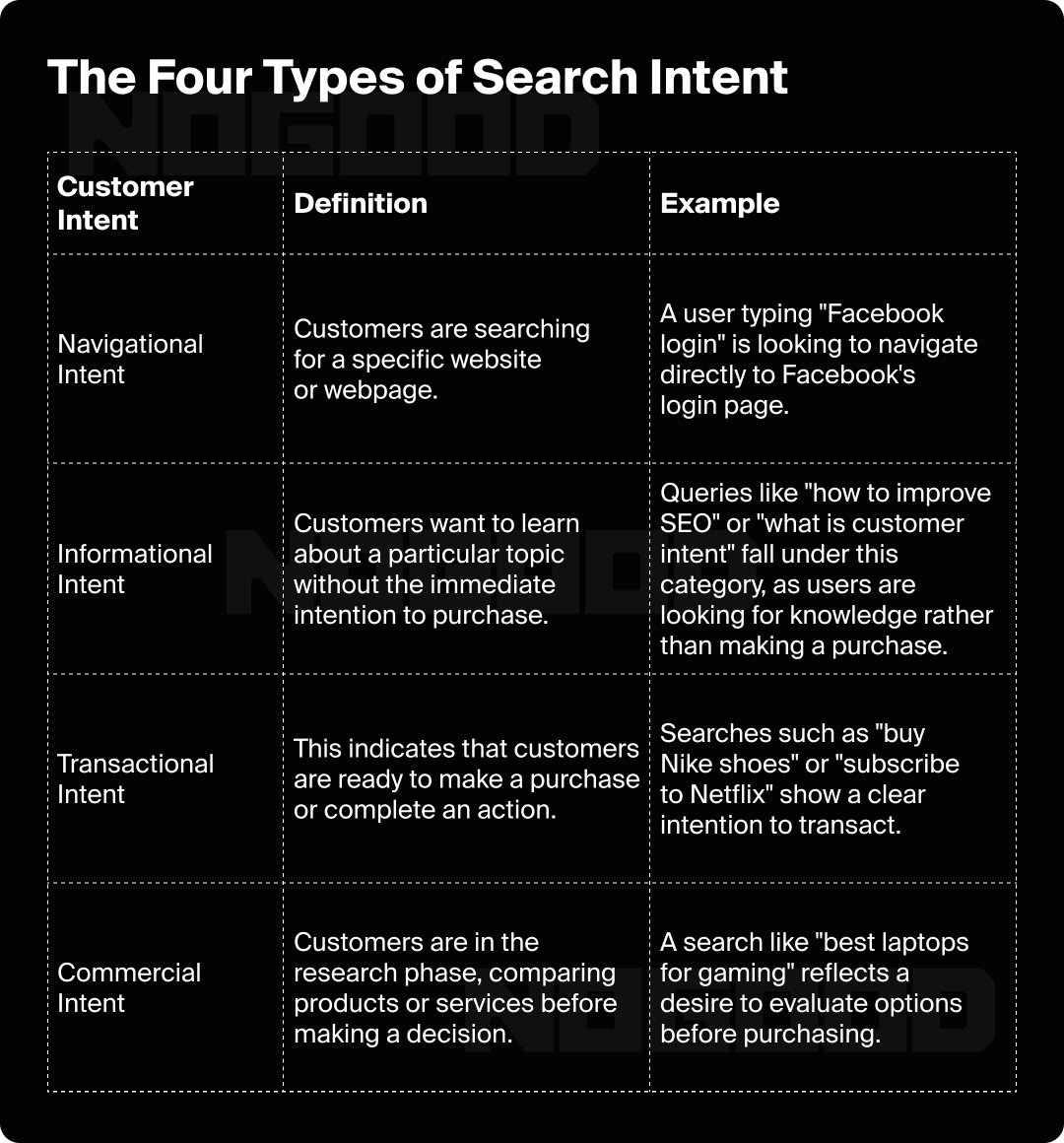

Intent types in B2B and what content actually fits

The classic intent buckets still hold up in B2B; what changes is that many queries are blended (learning + comparing + edging toward action). I use the four core types as an organizing layer, then design pages to support the natural “next question” buyers ask.

- Informational intent (top of funnel): the buyer is learning language, risks, and options. Content that performs here is practical and specific - guides, frameworks, and “how it works” explanations that don’t jump straight to a pitch.

- Navigational intent (mid to bottom): the buyer is looking for a specific company, person, or page. This is where clear brand and credibility pages matter: case evidence, process explanations, and proof points that reduce perceived risk.

- Commercial investigation (mid funnel): the buyer is comparing approaches or providers. This intent rewards content structured around decision criteria: pricing ranges, what’s included vs. excluded, tradeoffs, timelines, and common failure modes. (If you’re building these assets, see how B2B comparison pages can act as pipeline drivers.)

- Transactional intent (bottom): the buyer wants to take a step (contact sales, request a proposal, start an evaluation). These pages should be precise and frictionless: what happens next, who it’s for, how pricing works, and what outcomes are realistic.

A useful B2B nuance: “transactional” doesn’t always mean “ready to buy.” Often it means “ready to reduce uncertainty with a conversation.” If the page is written as if the buyer is already convinced, it tends to underperform.

Building an intent map around your buyers (and your best deals)

When I design an intent-led roadmap, I start from revenue - not from a massive keyword export.

I anchor on the buyers and deal types I want more of, then work backwards: who searches before a deal becomes active (CFO, CMO, Head of RevOps, IT/security leader), what triggers the search (churn spike, pipeline drop, compliance change, tool migration), what stalls deals (pricing risk, resourcing, time-to-value, integration complexity), and what proof closes the gap (case evidence, methodology, benchmarks, risk mitigation).

From there, I translate those into search-like language and cluster them by intent. The important part is not the labels - it’s the alignment: the page needs to satisfy the query’s main job, and it needs to set up the next step the buyer naturally takes. This is where a B2B high-intent keyword strategy beats “publish more” every time.

This is also where I bring sales reality into the loop. If the CRM shows that closed-won deals often started from “comparison” or “pricing model” research, then “definition” content can’t dominate the roadmap just because it’s easier to publish.

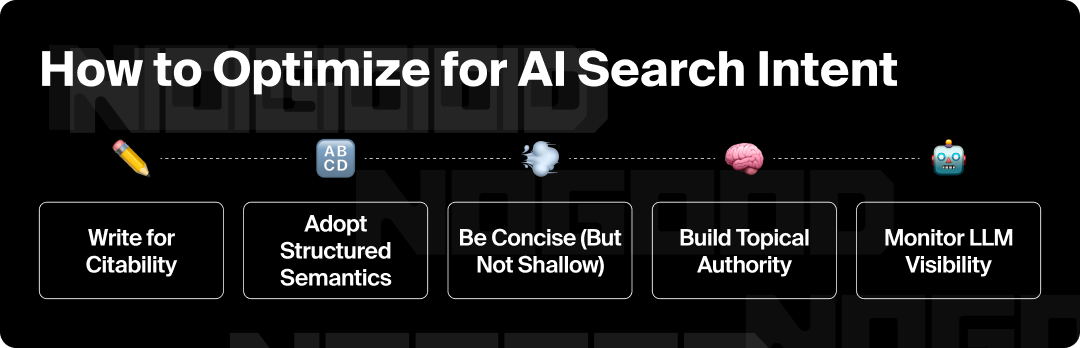

What changed with AI overviews and chat-style answers

AI summaries can reduce clicks for some query types, especially simple definitions and basic “what is X” questions. That’s not automatically bad for B2B. In many categories, the win is not maximum traffic - it’s becoming a trusted cited source during evaluation. This shift is closely tied to zero-click search behavior, where the buyer’s journey still moves forward even when the click does not happen.

What I optimize for now is answer-worthiness and extractability:

- Can a model pull a clean, accurate explanation from the page without rewriting it into something misleading?

- Does the content present decision-grade specifics (scope, constraints, numbers, tradeoffs), or is it generic?

- Is the page clearly organized so the system can identify what question each section answers?

If a page is the clearest source on a narrow commercial topic (for example, a specific regulated use case or a well-defined service model), it has a better chance of being surfaced repeatedly across related prompts - even when the buyer never clicks a traditional blue link.

How intent gets inferred (in plain English)

B2B leaders don’t need to become data scientists to benefit from intent optimization, but the basics help you make better content decisions.

Most search and AI systems infer intent using a combination of query meaning, patterns from similar searches, behavioral signals (did users bounce back or engage deeply?), and context (device, location, timing, follow-up searches). Under the hood, many systems represent language as vectors (“embeddings”), where meaning is captured by proximity: related phrases sit closer together even if the wording differs.

The practical implication I care about: I can’t rely on exact-match phrasing anymore. I have to cover the concept thoroughly, answer adjacent questions, and structure the page so both humans and machines can navigate it. In practice, this also pairs well with building durable clusters - not isolated posts - like the ones outlined in a B2B topic cluster strategy.

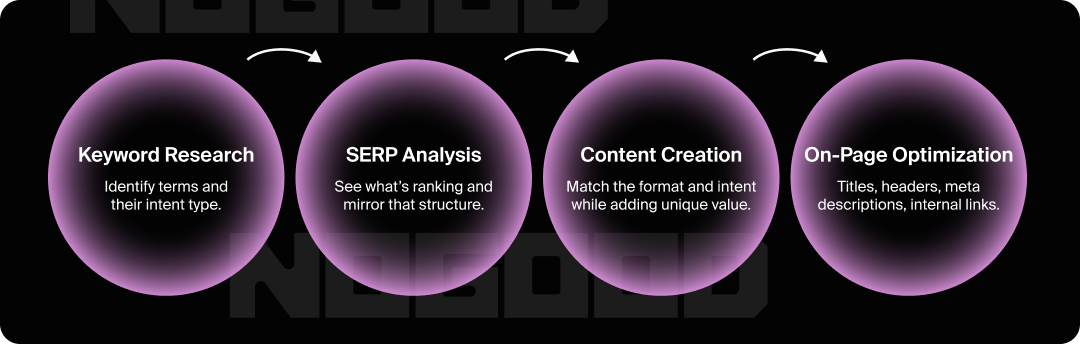

A practical workflow to realign existing content

When I want to move from theory to execution without turning it into an endless project, I use a tight workflow.

- Audit what already performs. Start with top queries and top landing pages, then map each page to the intent it actually satisfies versus the intent the query implies.

- Find mismatches. Common issues: an early-stage query landing on a hard-sell page, or a high-intent comparison query landing on vague thought leadership. These mismatches create high impressions, low CTR, and low downstream conversion quality.

- Decide: rewrite, merge, or split. If one URL is trying to satisfy multiple intents, sharpen it to one primary intent or split the content so each page has a clear job. If two pages compete for the same cluster, consolidate.

- Rewrite for clarity and extractability. Move direct answers closer to the top, use question-shaped subheads where appropriate, define terms once (cleanly), and add comparisons or constraints that show expertise. Structured data can help in some cases, but it never replaces clear writing.

- Connect clusters with internal links. Link informational content to commercial comparisons, and comparisons to proof (case evidence, methodology, scoped examples). The goal is to guide a buyer through a natural evaluation path, not force a conversion.

This is also where I’m careful about tone. If the query is “compare X vs Y,” I write like an analyst. If it’s “pricing model,” I write like someone reducing risk and ambiguity. If I write everything like a sales page, intent alignment breaks. For teams struggling to translate this into pipeline impact, the patterns in fixing fragile B2B SEO pipeline often show the fastest wins.

How I measure success: leading indicators to revenue

If I’m accountable to a business outcome, I don’t measure intent optimization by traffic alone. I track a chain of proof - from visibility, to engagement quality, to pipeline signals - because B2B revenue often lags by months.

Leading indicators (weeks to a few months): higher CTR on target clusters, improved engagement on reworked pages, and more searches landing on the “right” page type (comparison queries hitting comparison pages, pricing queries hitting pricing explanations).

Business indicators (months, sometimes longer): higher share of qualified inbound conversations from organic, better conversion to opportunity for high-intent clusters, and stronger win rate or shorter sales cycles when prospects arrive pre-educated.

On timelines, I stay realistic. I often see early movement within 4-8 weeks on CTR and engagement after meaningful rewrites. Pipeline influence tends to show up in the 3-6 month range, while revenue impact can take 6-12+ months depending on deal cycle length and competitive pressure. That’s why I like tracking “opportunity created” and stage progression, not only closed-won.

Two practical add-ons help here: (1) keep reporting board-ready so it survives scrutiny (see board-ready dashboards for SEO and pipeline), and (2) track whether your brand shows up in AI answers using a tool built for LLM visibility monitoring.

It’s also fair to run paid and outbound alongside this work. Those channels can produce demand quickly, while intent-led organic visibility compounds over time and reduces dependency on continuously rising acquisition costs. (If you’re auditing readiness before scaling spend, use a marketing operations checklist to avoid expensive leaks.)

Advanced considerations: volatility, multimodal, and pitfalls

In fast-changing categories (cybersecurity, fintech, AI infrastructure, compliance-heavy industries), intent shifts quickly. If regulations change or a category narrative flips, query patterns can move in weeks. I handle that by reviewing priority clusters regularly and updating high-value pages before they decay into outdated answers.

I also plan for multimodal behavior. Decision makers increasingly consume content through video clips, transcripts, diagrams, and voice queries. Clean summaries, descriptive headings, and properly described visuals make content easier to reuse and cite - both by people and by AI systems. If you want concrete tactics here, multimodal search optimization is where a lot of B2B teams are under-invested.

Common pitfalls are usually not complicated: optimizing for traffic volume instead of pipeline value; writing for the wrong stage (pushing “talk to sales” language at early learners); publishing thin content across too many unrelated topics; avoiding specificity (especially on pricing logic, scope boundaries, and tradeoffs); and ignoring sales feedback on which searches produce serious opportunities.

The core strategy stays simple: pick a small set of profitable intent clusters, build the most decision-useful pages in the category, and keep improving them based on what buyers and search behavior reveal over time.

.svg)