Traffic is climbing, form fills look fine, ads are busy, and yet the pipeline report is awkwardly quiet. Revenue is flat. The sales team keeps saying:

“These leads are junk.”

When I see that pattern, the real question is not how to get more leads - it’s how to measure lead quality so I stop paying for noise and start fueling real deals.

For B2B service companies with long sales cycles and high ACV, knowing how to measure lead quality is often the difference between a marketing engine that burns cash and one that builds predictable pipeline and profit.

Why measure lead quality

In B2B services, a “lead” can be cheap to generate. Sales capacity and delivery capacity are not. That’s why I treat lead quality measurement as an operating discipline, not a reporting nice-to-have.

Lead quality isn’t about form fills - or even MQLs. It’s about the probability that a contact will turn into real pipeline and then closed revenue, at a cost that keeps customer acquisition costs under control.

A simple way I frame it is:

Lead Quality = Fit × Intent × Validity × Reachability

Here’s what I mean by each part:

- Fit: the right company and the right person (industry, revenue band, role/seniority, region).

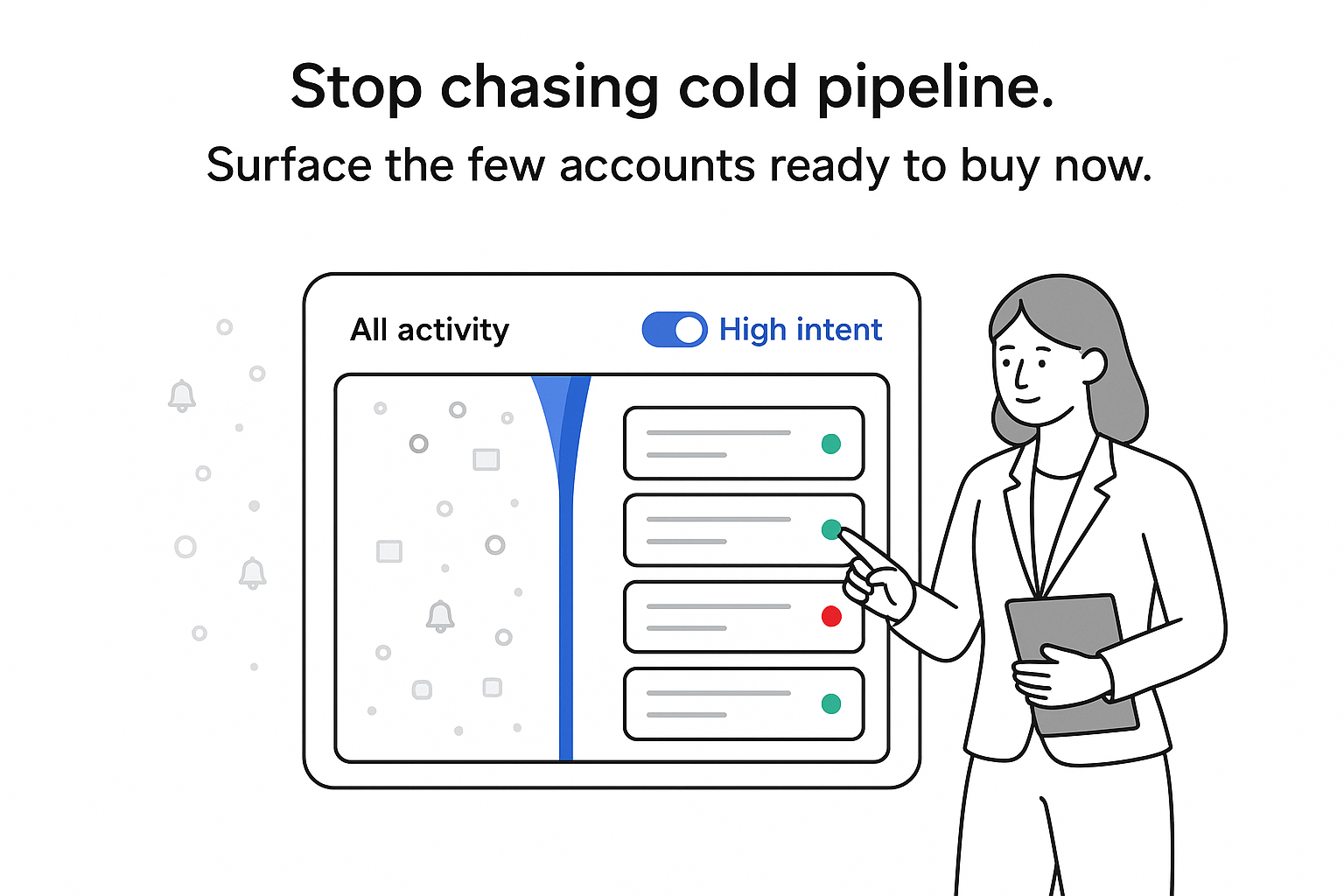

- Intent: clear pain and urgency (they engage with pricing, request a consultation, or take actions that signal an active evaluation - not casual browsing).

- Validity: the data is real (accurate email/phone, real company details, no obvious spam, and appropriate consent captured where relevant). If you’re seeing a lot of bounces or junk records, it often comes down to invalid leads.

- Reachability: sales can actually contact them and move them into a real conversation in a reasonable time window.

If any one of these goes to zero, the practical “quality” of that lead is close to zero. Measuring lead quality means scoring and tracking these components instead of counting raw volume - and using those insights to protect sales time, keep acquisition economics sane, and focus effort on sources that reliably create meetings, opportunities, and revenue.

The risks of not measuring lead quality

When no one is clearly measuring lead quality, dashboards can look healthy while the business feels off. I’ll see “record lead volume” next to a forecast that barely moves.

The failure mode is usually the same: budget keeps flowing to channels that create cheap leads on paper, while the downstream numbers (contact rate, meetings, opportunities, and closed won) quietly deteriorate. Sales time gets burned on conversations that were never likely to convert, and attribution becomes misleading - fast, low-quality sources look “best” at the top of the funnel, while slower, higher-value sources look weak if I only judge them by early-stage volume. If you’re dealing with long cycles, it helps to use an attribution approach built for reality (see Attribution for Long B2B Cycles: A Practical Model for Reality).

There’s also a real hygiene and compliance angle. If validity and consent aren’t tracked consistently, it becomes easier to accumulate duplicates, spam records, or contacts that shouldn’t be approached - none of which helps revenue, and all of which can create avoidable risk.

Day to day, this typically shows up as high form fills but low contact rates, lots of “leads created” but few meetings, long lead-to-sale timelines as deals leak out of the funnel, and growing pushback on “marketing-sourced” numbers because sales doesn’t trust the inputs.

Main lead quality metrics to track

There are many ways to describe “good” leads, but I can usually keep a tight core set of lead quality metrics as long as the funnel stages are clear. A simple funnel model is:

Lead → Qualified Lead → Meeting → Opportunity → Closed won

Once those stages are defined consistently, the goal is to track the metrics that connect marketing activity to revenue outcomes across the lead lifecycle.

| Metric | What it tells me | Where it usually lives |

|---|---|---|

| Conversion rate | How well leads move between stages (for example, Lead → Meeting) | CRM stage history and activity notes |

| Cost per qualified lead | What it costs to generate leads that meet the agreed “qualified” bar | Channel spend + CRM counts |

| Contact rate | Whether sales can reach leads and start a real conversation | Call logs, email replies, CRM outcomes |

| Lead-to-sale velocity | How long it takes leads to become closed won | CRM + revenue reporting |

| Revenue per lead | Average revenue generated per lead, by source/campaign | CRM + invoicing/revenue reporting |

| Compliance/validity indicators | Data quality signals (duplicates, bounces, spam patterns, missing consent fields) | Form submissions + CRM data hygiene |

If I only pick two to anchor a lead-quality conversation for B2B services, it’s conversion rate (downstream, not top-funnel) and cost per qualified lead, because together they force the conversation back to unit economics.

Conversion rate

Not every conversion rate is equally useful. For service businesses, I care most about the stage transitions that mirror how selling actually happens: Lead → Meeting held, Meeting → Opportunity, and Opportunity → Closed won (and sometimes Lead → Opportunity if that’s tracked cleanly).

Three practices keep conversion rates from becoming misleading:

- Break it down by source and campaign, and slice by the ICP segments that matter (industry, company size, region, seniority).

- Respect sample size. A conversion rate based on a handful of leads is mostly noise. Wait for a stable base before making major budget calls.

- Track cohorts over time for longer sales cycles. Follow “leads generated in January” forward at 30, 60, and 90+ days to separate early movement from real outcomes.

This is also where velocity matters: a source with slightly lower close rates but faster movement can still be attractive because it improves cash-flow timing and shortens payback. If you want a clean way to spot where deals leak, pair this with Pipeline Analytics: Reading Stage Drop-Off Like a Diagnostic.

Cost per qualified lead

CPL alone is a trap. A cheap lead that never turns into a meeting - or never becomes a real opportunity - is still expensive in the only currency that matters: time and pipeline.

Cost per qualified lead (CPQL) forces an agreement on the point where sales says, “Yes, this is worth real follow-up.” Depending on the business, I’ll define “qualified” as something like an SQL created, a meeting held, or an opportunity created - but I pick one definition and keep it consistent long enough to learn from it.

The basic formula is:

CPQL = Total channel cost / Number of qualified leads from that channel

When I think about “channel cost,” I include the direct spend and any clearly attributable operating costs required to generate those leads (not vague overhead). The key is consistency: if I include a cost type for one channel, I include it for the others in the same way.

Then I connect CPQL back to expected revenue with simple math. For example, if CPQL is $500, the close rate from qualified lead to customer is 20%, and average first-year revenue is $15,000, then expected revenue per qualified lead is 0.2 × 15,000 = $3,000. In that case, paying $500 for a $3,000 expected value can make sense - assuming delivery capacity and margins support it.

This is also where higher CPQL from a high-intent source can outperform low CPL from a low-intent source. CPQL brings lead quality back to economics instead of volume.

How to measure lead quality

To move from theory to a system I can use weekly (not just in an annual audit), I think in four moves: definitions, tracking, reporting, and decisions.

-

Align definitions with sales

I start by agreeing - plainly - on what “Lead,” “Qualified Lead,” “Meeting,” “Opportunity,” and “Closed won” mean. I write the definitions down and keep them stable so the metrics remain comparable over time. -

Instrument tracking

I make sure stages are set up and actually used consistently, and that source tracking is reliable (for example, campaign parameters are captured, and inbound sources aren’t getting overwritten). I also ensure the minimum context fields exist to judge fit - industry, size, region, role/seniority - and that the team can record contact attempts and outcomes without turning it into busywork. -

Report by cohort

I view leads in groups based on when they came in and from which source, then follow those cohorts forward into meetings, opportunities, and revenue. -

Make decisions from the numbers

Once the same scorecard repeats every month, I can shift budget and attention toward sources with strong conversion, sensible CPQL, and good revenue per lead - and away from sources that look good only at the top.

To keep the system honest, I also rely on a simple measurement agreement between marketing and sales. In practice, that means setting expectations for response time to new inbound leads, requiring stage updates after meaningful interactions, and using consistent disqualification reasons (for example, not ICP, no budget, no clear timeline). The specific rules matter less than the consistency; without it, even a clean-looking dashboard can tell the wrong story.

A practical way to run this is funnel-based measurement: I group leads by source and time period (for example, “paid search in Q1”), map them to downstream outcomes in the CRM (meetings, opportunities, closed won, revenue), calculate conversion and velocity, and then compare cohorts over time. When I layer in CPQL and revenue per lead, I get a simple scorecard that shows not just what generated leads, but what generated business.

For longer sales cycles, I don’t expect new cohorts to show full revenue quickly. Instead, I use leading indicators (like meeting rate and opportunity rate) for recent cohorts, and I lock in final conversion and revenue outcomes for older cohorts once enough time has passed to be meaningful.

How to improve lead quality

Once I can measure lead quality, the next step is to raise it - fewer junk leads and more ready buyers entering the pipeline.

I usually start with ICP and messaging clarity. If the ideal client profile is broad, targeting and content tend to invite “maybe” buyers. Tightening the ICP (industry, size, constraints, deal profile) and reflecting it directly in ads and landing pages can filter out poor-fit traffic before it becomes a sales task. For practical ways to pressure-test positioning before you overhaul anything, see The B2B Messaging Test: How to Validate Positioning Before a Redesign.

Next, I add qualification friction in the right places. Ultra-short forms can inflate lead counts while stripping away context. For higher-ticket services, asking for a few additional fields - company name, role/seniority, basic size band, and the primary challenge - often improves both fit and sales efficiency. The goal isn’t to interrogate; it’s to collect enough signal to route and prioritize intelligently. If you want a tighter bridge between intent and follow-up, reference From MQL to SQL: Fixing Lead Quality With Intent-Based Forms and B2B Lead Gen Landing Pages: The 7 Blocks That Move Demo Requests.

Then I focus on speed-to-lead and routing. High-intent inquiries lose momentum quickly. When routing is slow or ambiguous, contact rates drop and “quality” looks worse than it really is. Clear ownership, fast first touch, and consistent logging help separate true low-quality leads from operational delays (see Lead Routing Speed: Why 15 Minutes Changes CAC).

I also pay attention to data validity and spam protection. Basic steps - like reducing duplicate creation, filtering obvious bot patterns, and ensuring key fields are captured reliably - can clean up the lead pool and make quality metrics more trustworthy. If bot traffic is a recurring issue in your inbound mix, start by learning how to spot bots before they hit your CRM.

Finally, I build sales feedback loops into the process: not just “good/bad lead,” but structured reasons that marketing can act on. If “not ICP” spikes from a channel, targeting and messaging need adjustment. If “good fit, no urgency” is common, follow-up strategy and content may be the issue.

Over time, this loop can raise average lead quality without needing more traffic - because the system gets better at attracting, identifying, and prioritizing the leads that are most likely to become revenue.

Continuously optimize based on outcomes

Lead quality isn’t static. Channels change, audiences shift, and the ICP evolves - especially as a firm moves upmarket. That’s why I treat lead quality measurement as a habit.

On a monthly cadence, I review cohort performance (conversion, CPQL, revenue per lead, and velocity), then make small, deliberate reallocations rather than dramatic swings. I adjust scoring and routing rules based on sales outcomes, and I keep an eye on validity indicators so the data stays reliable enough to support real decisions. Instead of running many simultaneous experiments, I prefer one or two controlled changes per cycle so I can read results without confounding variables.

I can also add a lightweight forecasting layer using the same funnel math. If I know a source typically produces a certain lead volume and predictable stage-to-stage conversion rates, I can estimate expected revenue over the full cycle. The forecast won’t be perfect, but it creates a shared language for leadership to discuss how today’s lead quality likely becomes future pipeline and revenue.

Final thoughts: lead quality measurement is a growth strategy

When I strip away the jargon, measuring lead quality is about one thing: creating a simple, honest link between marketing activity and revenue, so growth doesn’t require burning cash - or burning out the sales team.

In practice, I can get surprisingly far quickly by doing four things: locking stage definitions, making sure every lead has a reliable source and basic fit data, reporting conversion and CPQL by cohort, and then making one or two focused changes that should improve quality (tighter ICP filters, clearer messaging, faster routing). Over a couple of cycles, the impact shows up where it matters: fewer wasted records, more pipeline from the same spend, and a clearer performance story that doesn’t depend on vanity metrics.

Lead quality measurement turns marketing from a black box into a controlled growth engine. Once the system is in place, every new campaign plugs into the same clear picture - and I can scale with much more confidence.

.svg)