Agentic AI and Brand Differentiation Economics

How AI agents flatten brand signals for many sites while raising the strategic value of structured data, on-site experiences, and first-party agents.

Agentic AI systems that read, summarize, and act across the web threaten to compress many brands into "just another data source." The central question is whether this shift permanently erodes brand differentiation and traffic value, or instead pushes differentiation into new technical and experiential layers that most marketers are not yet equipped to compete in.

Key Takeaways

- Expect AI agents to absorb a growing share of informational queries and simple tasks, treating many sites as interchangeable data sources. Model scenarios where 10-30% of current organic sessions never reach your pages and pressure-test your acquisition mix against that loss.

- For transactional brands, agents will favor whoever has the cleanest structured data, competitive pricing, and clear policies. SEO and PPC roadmaps should shift from "more content" toward feed quality, machine-readable product and service data, and explicit trust signals.

- For publishers and informational sites, ad-supported page views are at direct risk. Survivable models likely involve gated expertise, subscriptions, communities, or on-site agents that turn "readers" into "participants" rather than anonymous search traffic.

- Brand and voice will still matter, but primarily in the human-visible experience layer and in how your own agent negotiates with user agents. Plan for UX, interaction design, and agent design to take budget from generic content marketing.

- WordPress's AI roadmap moving in this direction signals that protocols like MCP and Agent2Agent are not fringe experiments. Marketers should treat agent-to-agent interaction as an emerging distribution channel on the same strategic level as search and social.

Situation Snapshot

- Trigger: A Search Engine Journal piece by Roger Montti, owner of Martinibuster.com, summarizes James LePage's essay on the "Agentic AI Web." LePage is Director of Engineering AI at Automattic and co-leads the WordPress AI Team, which coordinates AI integration in WordPress core [S1].

- LePage outlines a progression from today's AI search (Perplexity-style: gather → synthesize → present) toward agents that execute tasks and eventually act semi-autonomously based on user guidelines [S1].

- In this model, AI agents:

- Visit sites primarily to extract data.

- Synthesize content according to their own logic.

- Present results in their own interface, often via summary rather than click-throughs.

- Result: LePage argues that:

[S1]"Your website becomes a data source rather than an experience," and for media and services "your brand, your voice, your perspective...get flattened when an agent summarizes your content alongside everyone else's."

- As a counter-move, LePage describes sites deploying their own agents to represent their capabilities, constraints, and preferences to visiting user agents, enabled by emerging protocols like Model Context Protocol (MCP, now under the Linux Foundation) and Agent2Agent [S1][S2].

These points are broadly uncontested: agent frameworks are being built, WordPress is investing in them, and AI-mediated experiences are already live in search and assistants.

Breakdown & Mechanics

1. Why agentic AI flattens brand presentation

Traditional web flow:

User query → search results page → user chooses result → on-site UX and brand voice → conversion or bounce.

Brand differentiation has typically happened in:

- How you appear in the SERP (snippet, brand recognition).

- What happens once the user lands (design, tone, tools, guarantees).

Agentic flow:

User goal ("book a plumber," "summarize best project management tools") → user agent breaks the goal into tasks → agent crawls or queries multiple sites → synthesizes an answer → presents a single or small set of actions or results.

In this agentic model:

- The agent, not the brand, chooses which attributes to show (price, rating, features).

- Styling, narrative, and voice are removed or rewritten.

- Multiple brands are normalized into comparable attributes; differences shrink to numbers and short labels.

Mechanically, this is similar to comparison engines, but now it is:

- The default for many intent types, not a specific destination.

- Embedded directly at the assistant layer (chatbots, operating-system-level assistants, search AI overviews).

2. Why control shifts away from site owners

In LePage's framing, control over presentation moves from:

Publisher: content + UX → AI agent: logic + interface → user.

Publisher influence is weakest at the point of synthesis because:

- Agents rely on their own ranking and summarization models.

- Legal and safety concerns push them toward risk-averse, consensus-style outputs, which further flatten differentiation.

- Unless a site exposes a machine interface that expresses its own rules and priorities, it is just content in a larger pool.

3. The proposed counter: site-side agents and protocols

LePage's counterproposal is that if user agents represent users, sites need agents that represent entities.

A site agent:

- Ingests and understands the site's content, products, and policies.

- Negotiates with visiting user agents to:

- Answer questions.

- Provide structured offers.

- Signal constraints (for example, stock limits, regions served).

- Potentially negotiate terms or bundles.

Conceptually:

User → user agent → site agent or agents via protocol (MCP or Agent2Agent) → agreement (purchase, booking, answer).

Under this model, differentiation moves into:

- The fidelity and richness of what your agent can express.

- Your policies, service levels, and pricing.

- Any proprietary data or tools only your agent can access (for example, live capacity, custom configurations).

This looks closer to API-led commerce and B2B integrations than traditional SEO, but applied to consumer journeys.

4. Why informational sites are structurally exposed

LePage notes that transactional flows fit this model well, while for media and services it is more complex [S1]. Mechanically:

- Transactional brands still have a clear value exchange: an agent sends a transaction, the brand delivers a product or service, and margin funds the system.

- Informational sites often trade content for ad views or lead capture. If the AI agent surfaces the information without a click, that trade breaks: no page view, no ad, no email sign-up.

- Agents tend to compress competing viewpoints into one neutralized answer, exactly where media and expert voices aim to stand out.

The result is that informational content becomes substrate for AI outputs rather than a destination, and differentiation on style or editorial slant is heavily discounted.

Impact Assessment

Organic search and content

Direction: Downward pressure on informational organic traffic; mixed impact on high-intent brand and product queries.

Likely effect: Over a 3-5 year horizon, it is reasonable to scenario-plan for 10-30% of search-driven informational sessions to be intercepted by AI overviews or agents. That range is a modeling assumption, but it is consistent with early evidence from AI overview experiments and the logic of one synthesized answer instead of many blue links.

Winners:

- Sites with strong structured data (Schema.org, product or service feeds, clear metadata) that agents can parse reliably.

- Brands with distinctive, machine-readable signals of authority (citations, well-structured references, clear author identity).

Losers:

- Thin affiliates and generic informational blogs that depend heavily on ad impressions from commodity queries.

- Mid-tier comparison and review properties whose main function, synthesizing options, is directly replicated by agents.

Actions and watchpoints:

- Reclassify content by "agent risk": which topics are easily answered by an AI summary versus which require proprietary tools, data, or opinion.

- Shift new content investment toward assets that either:

- Are inherently experiential (for example, calculators, configurators, interactive demos).

- Contain proprietary or hard-to-replicate data.

Paid search and performance media

Direction: Mixed. Cost-per-click on some generic queries could soften as agents satisfy those intents, while auctions around agent-integrated placements and sponsored options may emerge.

For product and service marketers:

- Agents will likely behave like hyper-rational meta-shoppers, focusing on price, service levels, and trust signals.

- Feed completeness, live inventory, and policy clarity (returns, warranties, SLAs) will be as important as ad copy.

For lead generation:

- Agents can pre-qualify prospects and book directly, but they will compress lead forms and landing-page time.

- Expect more handshake via API and less educational landing page for mature categories.

Actions and watchpoints:

- Treat product feeds, business profiles, and structured policies as primary PPC assets, not back-office afterthoughts.

- Track new ad formats inside assistant or agent environments (for example, sponsored providers in agent recommendations) and model their cannibalization of existing search and shopping spend.

Brand, creative, and UX

Direction: Experience becomes the main differentiator for humans who still visit; brand voice migrates from article copy to interaction flows.

Implications:

- Expect fewer but higher-intent human visits; these users will expect richer experiences than a static article.

- On-site AI (chat, configurators, personalized content flows) shifts from nice-to-have to core brand expression.

Actions:

- Prioritize a small number of high-value journeys (for example, complex purchase, onboarding, diagnosis) and design them as interactive experiences, not just content pages.

- Define brand guidelines not only for text and visuals, but for how your own agent should speak, prioritize trade-offs, and handle negotiation.

Operations, data, and governance

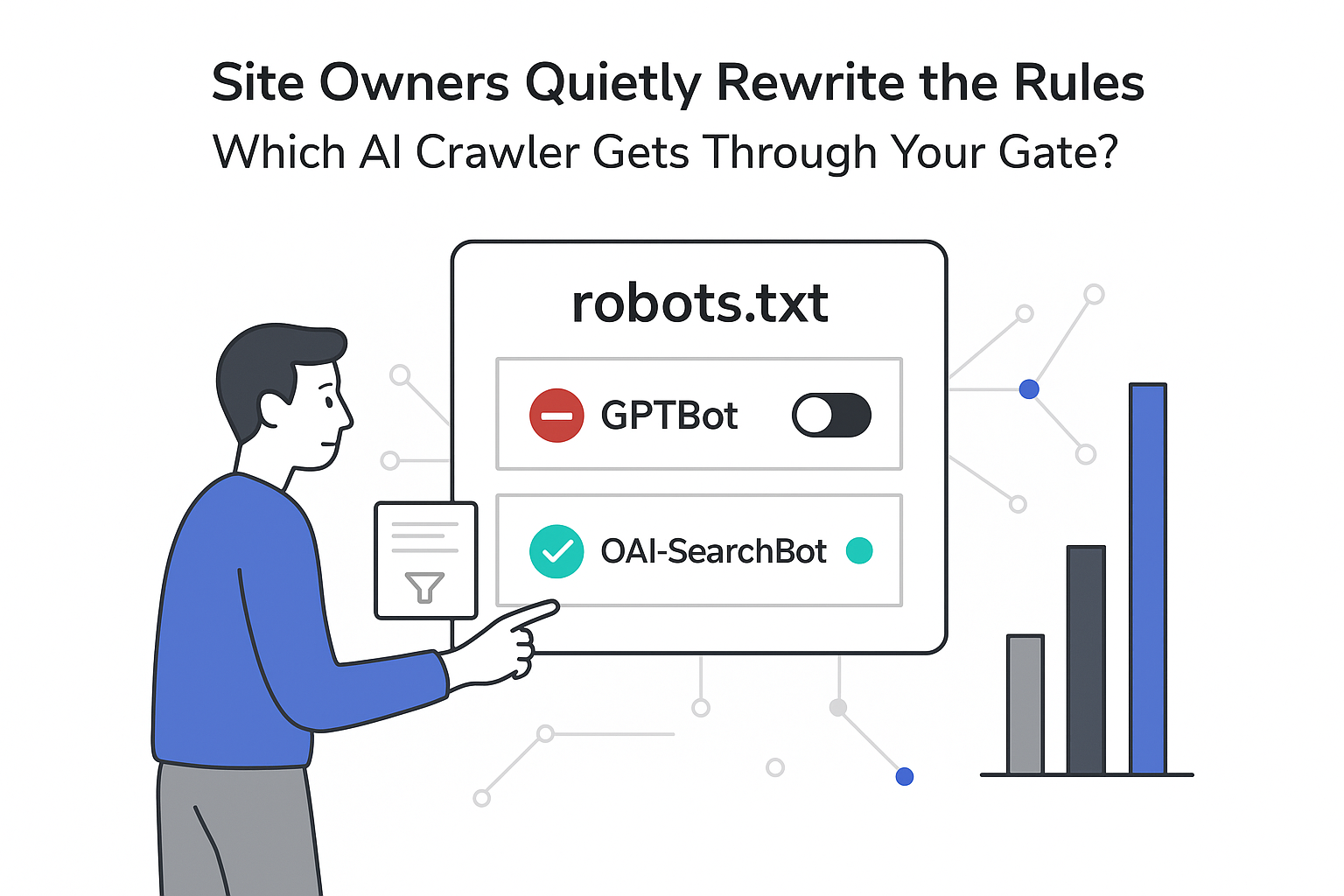

Direction: More emphasis on machine-to-machine interfaces and policy definition; less reliance on page-level analytics alone.

Actions:

- Inventory where your data lives and how an agent would access it, including APIs, feeds, structured markup, and documentation.

- Begin logging and analyzing non-human accesses distinctly (AI crawlers and agent calls) and treat them as a separate channel with their own KPIs, such as number of agent queries answered and tasks completed.

Scenarios & Probabilities

These are scenario models, not forecasts; the labels indicate relative likelihood based on current signals.

Base scenario - partial agentic shift (likely)

- Timeframe: 3-5 years.

- AI agents become a standard option inside major search engines, assistants, and some vertical tools, but do not replace traditional search entirely.

- 20-40% of informational and comparison intents see significant agent mediation; high-intent product and brand queries are less affected.

- Marketers manage a mixed environment: some journeys dominated by pages, others by agents.

- Outcome: Noticeable but uneven organic-traffic decline for informational content; transactional brands see more API and agent-driven conversions.

Upside scenario - managed integration (possible)

- Strong norms and regulation emerge around attribution, traffic sharing, and monetization for content used by agents.

- Major agents integrate with site-side agents and structured APIs by default, allowing brands to influence representation and negotiate terms.

- New monetization formats, such as paid premium data feeds and agent plug-ins, give both brands and publishers replacement revenue streams.

- Outcome: Traffic volume is lower, but revenue per exposure rises for brands that build solid agent and data infrastructure.

Downside scenario - agent gatekeepers dominate (edge)

- A small number of agent platforms concentrate user attention and transactions, tightly controlling which sources they use and how they are paid, if at all.

- Opting out of agent access sharply reduces visibility; opting in yields data extraction with weak economic returns for most publishers.

- Informational and mid-market media properties see sustained double-digit traffic and revenue declines with limited substitutes.

- Outcome: Consolidation around a few large brands and platforms; many independent publishers exit or downsize.

Risks, Unknowns, Limitations

- Adoption speed: It is unclear how fast mainstream users will trust agents with high-stakes tasks (for example, finances, health, legal), which affects how much of the funnel moves into agent environments.

- Platform behavior: The economics for publishers depend heavily on how OpenAI, Google, Microsoft, and others design revenue sharing, attribution, and opt-out mechanisms; those policies are still fluid.

- Technical standards: Protocols like MCP and Agent2Agent are early; real-world interoperability, security guarantees, and governance are still being tested [S2].

- Data and numbers: There is limited public, long-term data on how AI overviews and agents change click behavior across categories. Any percentage figures above are scenario assumptions, not measured outcomes.

- Model behavior: Agent summarization quality, bias toward large brands, and susceptibility to manipulation are open questions; any of these could shift which players benefit.

This analysis would be weakened or falsified if:

- Empirical data show stable or rising click-through rates to a broad diversity of sites even as AI overviews and agents scale.

- User studies reveal strong, lasting preference for direct site browsing over agent-mediated answers, keeping agents niche.

- Agent protocols evolve in a way that gives publishers more control over representation and monetization than current trajectories suggest.

Sources

- [S1] Roger Montti, Search Engine Journal, January 2026, article: "Why Agentic AI May Flatten Brand Differentiators."

- [S2] Linux Foundation, 2024, announcement: "Model Context Protocol (MCP) joins the Linux Foundation as a neutral standard for connecting AI models to tools and data."

.svg)